Question Engine 2:Overview: Difference between revisions

(New page: This page outlines how I think the question engine should work in Moodle 2.0 or 2.1. Previous section: Rationale {{Work in progress}} ===Clar...) |

No edit summary |

||

| Line 5: | Line 5: | ||

{{Work in progress}} | {{Work in progress}} | ||

== | ==Normalise the database structure== | ||

At the moment, the some parts of the question_sessions and question_states tables and their relations are not normalised. This is a technical term for describing database designs. Basically, if the tables are normalised, then each 'fact' is stored in exactly one place. A normalised database is much less likely to get into an inconsistent state. Changing to a normalised structure should therefore increase robustness. | |||

In addition, I wish to change the tables, so the the responses received from the student are stored in a much more 'raw' form. That will mean that the responses can be saved much earlier in the sequence of processing, which will again increase robustness. It will also allow the sequence of responses from the student to be replayed more easily, making it easier to continue after a server crash, to regrade, and to write automatic test scripts. | |||

(+++Robustness, +Correctness) | |||

==New concept: Question interaction models== | |||

At the moment, the various sequences of states that a question can move through in response to student input is hard-coded. It is controlled by a combination of the compare_responses and grade_responses methods of the question types, and tangled logic in the question_process_responses function. This makes it difficult to add new ways on interacting with questions, for example certainty based marking. Also, the current code is tricky to keep working. | |||

I would like to separate out the control of how a question moves through different states, into what I will call question interaction models. Currently, Moodle has three or four of these: | |||

===Deferred feedback=== | |||

This is how the quiz currently work when adaptive mode is off. The student enters a response to each question, then does Submit all and finish at the end of their attempt. Only then do they get feedback and/or grades on each question, depending on the review settings. | |||

===Adaptive=== | |||

In this mode there is a separate submit button beside each question, so the student can submit each question individually during the attempt, and if they are wrong, try to improve their answer, although for a reduced grade. | |||

Currently, the way adaptive mode works from the student point of view is not very good. I propose to replace it with a new Interactive mode. See below. | |||

===Manually graded=== | |||

Essay questions need to be manually graded by the teacher, so you cannot really use them in adaptive mode (although currently there is nothing in the Quiz to stop you, which leads to confusing results). And it is not quite the same as Deferred feedback mode, because the student must wait for the teacher to grade their response after clicking submit all and finish. | |||

====Each attempt builds on last==== | |||

This is very similary to the deferred feedback model, except that in subsequent attempts, each question does not start blank, but instead with the student's last response from the previous attempt. | |||

Currently in Moodle there is nothing to stop you trying to combine Each attempt builds on last with Adaptive mode, although that combination does not make any sense to me. I think it simplifies things to treat this as a separate mode, although, of course, it will share code with Deferred feedback mode. | |||

There are also some new modes that I propose to add, either immediately, or shortly after the main part of the work: | |||

====Interactive==== | |||

This will replace adaptive mode. This is the model that has been used successfully for several years in the OU's [http://www.open.ac.uk/openmarkexamples/ OpenMark] system. The OU has also modified Moodle to work like this, but because of the way the quiz core currently works, I was not happy just merging the OU changes into Moodle core. In a sense, this whole document grew out of my thoughts about how to implement the OU changes in Moodle core properly. | |||

In the existing adaptive mode, after the student has clicked submit, the question both shows the feedback for the last answer the student submitted, while also letting the student change their answer. This can lead to weird results. For example create a numerical question 2 + 2 = 4. Attempt it. Enter 4. Click Submit. Enter 5. Click Save. You will see a page that seems to say that your answer is 5 and it is correct! | |||

In Interactive mode, the question is either in a state for the student to enter their answer, with a Submit button; or it is showing feedback for the student's previous attempt, with a Try again button to get back to the first state. | |||

The other difference is that students are only allowed a limited number of tries at each question (typically three). When they submit their third try, or when they submit a correct answer, that question is finished and they must go onto the next one. | |||

(This is quite difficult to explain. Try [https://students.open.ac.uk/openmark/s205.ayrf/ this example] and you should see how it works.) | |||

====Immediate feedback==== | |||

This would be like cross between Deferred feedback an Interactive. Each question has a submit button beside it, like in interactive mode, so the student can get the feedback immediately while they still remember their thought processes while answering the question. However, unlike the interactive model, there is no try again button. You only get one chance at each question. | |||

This is a necessary prerequisite for implementing MDL-11047, which is fairly frequently asked for in the quiz forum. | |||

====Certainty based marking with deferred feedback==== | |||

( | This takes any question that can use the deferred feedback model, and adds three radio buttons to the UI (higher, medium, lower) for the student to use to indicate how certain they are that their answer is correct. If they are more certain, the get more marks if they are right, but lose marks if they are wrong. This encourages students to reflect about their level of knowledge. | ||

=== | ====Certainty based marking with immediate feedback==== | ||

This is like immediate feedback mode with the certainty based marking feature. | |||

====Delegate to remote system==== | |||

The Opaque question type from contrib, which is used to run both OpenMark and [http://stack.bham.ac.uk/ STACK] questions inside a Moodle quiz was very difficult to implement within the current code. Since the remote system controls the flow of the question, it makes sense to use a custom Interaction model for this question type, and that will be possible in the new code. | |||

So, what exactly does the interaction model control? Given the current state of the question, it limits the range of actions that are possible. For example, in adaptive mode, there is a submit button next to each question. In non-adaptive mode, the only option is to enter an answer and have it saved, until the submit all and finish button is pressed. That is, it | So, what exactly does the interaction model control? Given the current state of the question, it limits the range of actions that are possible. For example, in adaptive mode, there is a submit button next to each question. In non-adaptive mode, the only option is to enter an answer and have it saved, until the submit all and finish button is pressed. That is, there will be a class in the PHP code for each model, and it will have methods that replace the old question_extract_responses, question_process_responses, question_process_comment and save_question_state functions in the current code. | ||

(+++Richness, ++Correctness, +Robustness) | (+++Richness, ++Correctness, +Robustness) | ||

===Brief digression: who controls the model, the quiz or the question?=== | |||

There is one design decisions that it took a long time for me to resolve, and I want to mention it here. | |||

The question is: Is the interaction model a property of the question, or the quiz? | |||

At the moment, there is a setting in the quiz that lets you change all the questions in the quiz from adaptive mode to non-adptive mode at the flick of a switch. Or, at least, it allows you to re-use the same questions in both adaptive and non-adaptive mode. This suggest that the interaction model is a property of the quiz. | |||

On the other hand, suppose that you wanted to introduce the feature that, in adaptive mode, the student gets different amounts of feedback after each try at getting the question right. For example, the first time they get it wrong the only get a brief hint. If they are wrong the second time they get a more detailed comment, and so on. In order to do this, you need more data (the various hints) in order to define an adaptive question that is irrelevant to a non-adaptive question. Also, certain question types (e.g. Opaque, from contrib) have to, of necessity, ignore the adaptive, non-adaptive setting. And I suggested above that, manually graded question types like Essay should really be considered to have a separate interaction model. This suggests that the interaction model is a properly of the individual question. (Although usability considerations suggest that a single quiz should probably be constructed from questions with similar interaction models.) | |||

I eventually concluded that both answers are right. That is, it is a good idea for the quiz to have a setting like adaptive/non-adaptive that sets out the teacher's intention of how each question in this quiz should behave. However, the exact choice of interaction model to use is up to the different question types. When a quiz attempts is started, each question type is asked something like "A quiz attempt is starting using 'adaptive mode'. Exactly which interaction model should be used for questions of this type in this attempt?" This gives questions that can only work in a certain way (for example essay questions) a chance to override the quiz setting. | |||

==Clarifying question states== | |||

Althought there is a database table called question_states, the column there that stores the type of state is called event, and the values stored there are active verbs like open, save, grade. The question_process_responses function is written in terms of these actions too. One nasty part is that during the processing, the event type can be changed several times, before it is finally stored in the database as a state. | |||

I would like to clearly separate the concept of the current state that a question is in, and the various actions that lead to a change of state. The actions will be handled by the interaction models, and the state will be stored in the database as a state. | |||

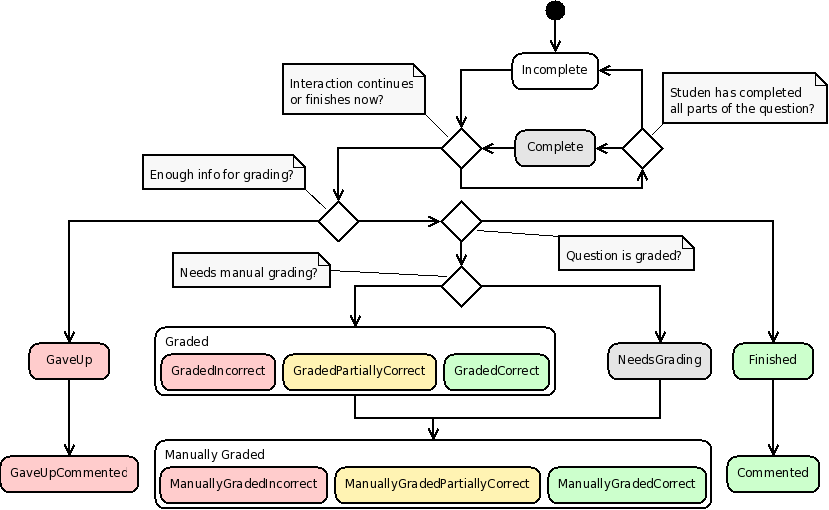

The list of available states will also be changed slightly to match the following diagram. This came from thinking about what information it was important to display in the quiz navigation block. | |||

[[Image:Question_state_diagram.png]] | |||

(+ | (+Correctness, +Richness). | ||

==Reorganise the code== | |||

Already, in the Moodle 2.0 developments, I have made several changes to the how the quiz and question bank code is organised, in an attempt to make it easier to maintain and understand. It also makes it easier to write automated tests. I hope that this work will have a further beneficial effect on the code quality. | Already, in the Moodle 2.0 developments, I have made several changes to the how the quiz and question bank code is organised, in an attempt to make it easier to maintain and understand. It also makes it easier to write automated tests. I hope that this work will have a further beneficial effect on the code quality. | ||

| Line 54: | Line 114: | ||

(+Robustness, +Efficiency) | (+Robustness, +Efficiency) | ||

==Simplified API for question types== | |||

The question is, is the | Although I said above "The student enters an answer to each question which is saved. Then when they submit the quiz attempt, all the questions are graded." In fact, that was a lie. Whenever a response is saved, the grade_responses method of the question type is called, even in the state is only being saved. This is confusing, to say the least, and very bad for performance in the case of a question type like JUnit (in contrib) which takes code submitted by the student, and compiles it in order to grade it. | ||

So some of the API will only change to the extent that certian funcitons will in future only be called when one would expect them to be. I think this can be done in a backwards-compatible way. | |||

Another change will be that, at the moment, question types have to implement tricky load/save_question_state methods that, basically, have to unserialise/serialise some of the state data in a custom way, so it can be stored in the answer column. This is silly design, and leads to extra, unnecessary database queries. By changing the database structure so that the students' responses are stored directly in a new table (after all, an HTTP POST request is really just an array of string key-value pairs, and there is a natural way to store that in a database) it should be posiblity to eliminate the need for these methods. | |||

(+Richness, +Correctness, +Efficiency) | |||

==See also== | ==See also== | ||

Revision as of 17:02, 1 October 2009

This page outlines how I think the question engine should work in Moodle 2.0 or 2.1.

Previous section: Rationale

Note: This page is a work-in-progress. Feedback and suggested improvements are welcome. Please join the discussion on moodle.org or use the page comments.

Normalise the database structure

At the moment, the some parts of the question_sessions and question_states tables and their relations are not normalised. This is a technical term for describing database designs. Basically, if the tables are normalised, then each 'fact' is stored in exactly one place. A normalised database is much less likely to get into an inconsistent state. Changing to a normalised structure should therefore increase robustness.

In addition, I wish to change the tables, so the the responses received from the student are stored in a much more 'raw' form. That will mean that the responses can be saved much earlier in the sequence of processing, which will again increase robustness. It will also allow the sequence of responses from the student to be replayed more easily, making it easier to continue after a server crash, to regrade, and to write automatic test scripts.

(+++Robustness, +Correctness)

New concept: Question interaction models

At the moment, the various sequences of states that a question can move through in response to student input is hard-coded. It is controlled by a combination of the compare_responses and grade_responses methods of the question types, and tangled logic in the question_process_responses function. This makes it difficult to add new ways on interacting with questions, for example certainty based marking. Also, the current code is tricky to keep working.

I would like to separate out the control of how a question moves through different states, into what I will call question interaction models. Currently, Moodle has three or four of these:

Deferred feedback

This is how the quiz currently work when adaptive mode is off. The student enters a response to each question, then does Submit all and finish at the end of their attempt. Only then do they get feedback and/or grades on each question, depending on the review settings.

Adaptive

In this mode there is a separate submit button beside each question, so the student can submit each question individually during the attempt, and if they are wrong, try to improve their answer, although for a reduced grade.

Currently, the way adaptive mode works from the student point of view is not very good. I propose to replace it with a new Interactive mode. See below.

Manually graded

Essay questions need to be manually graded by the teacher, so you cannot really use them in adaptive mode (although currently there is nothing in the Quiz to stop you, which leads to confusing results). And it is not quite the same as Deferred feedback mode, because the student must wait for the teacher to grade their response after clicking submit all and finish.

Each attempt builds on last

This is very similary to the deferred feedback model, except that in subsequent attempts, each question does not start blank, but instead with the student's last response from the previous attempt.

Currently in Moodle there is nothing to stop you trying to combine Each attempt builds on last with Adaptive mode, although that combination does not make any sense to me. I think it simplifies things to treat this as a separate mode, although, of course, it will share code with Deferred feedback mode.

There are also some new modes that I propose to add, either immediately, or shortly after the main part of the work:

Interactive

This will replace adaptive mode. This is the model that has been used successfully for several years in the OU's OpenMark system. The OU has also modified Moodle to work like this, but because of the way the quiz core currently works, I was not happy just merging the OU changes into Moodle core. In a sense, this whole document grew out of my thoughts about how to implement the OU changes in Moodle core properly.

In the existing adaptive mode, after the student has clicked submit, the question both shows the feedback for the last answer the student submitted, while also letting the student change their answer. This can lead to weird results. For example create a numerical question 2 + 2 = 4. Attempt it. Enter 4. Click Submit. Enter 5. Click Save. You will see a page that seems to say that your answer is 5 and it is correct!

In Interactive mode, the question is either in a state for the student to enter their answer, with a Submit button; or it is showing feedback for the student's previous attempt, with a Try again button to get back to the first state.

The other difference is that students are only allowed a limited number of tries at each question (typically three). When they submit their third try, or when they submit a correct answer, that question is finished and they must go onto the next one.

(This is quite difficult to explain. Try this example and you should see how it works.)

Immediate feedback

This would be like cross between Deferred feedback an Interactive. Each question has a submit button beside it, like in interactive mode, so the student can get the feedback immediately while they still remember their thought processes while answering the question. However, unlike the interactive model, there is no try again button. You only get one chance at each question.

This is a necessary prerequisite for implementing MDL-11047, which is fairly frequently asked for in the quiz forum.

Certainty based marking with deferred feedback

This takes any question that can use the deferred feedback model, and adds three radio buttons to the UI (higher, medium, lower) for the student to use to indicate how certain they are that their answer is correct. If they are more certain, the get more marks if they are right, but lose marks if they are wrong. This encourages students to reflect about their level of knowledge.

Certainty based marking with immediate feedback

This is like immediate feedback mode with the certainty based marking feature.

Delegate to remote system

The Opaque question type from contrib, which is used to run both OpenMark and STACK questions inside a Moodle quiz was very difficult to implement within the current code. Since the remote system controls the flow of the question, it makes sense to use a custom Interaction model for this question type, and that will be possible in the new code.

So, what exactly does the interaction model control? Given the current state of the question, it limits the range of actions that are possible. For example, in adaptive mode, there is a submit button next to each question. In non-adaptive mode, the only option is to enter an answer and have it saved, until the submit all and finish button is pressed. That is, there will be a class in the PHP code for each model, and it will have methods that replace the old question_extract_responses, question_process_responses, question_process_comment and save_question_state functions in the current code.

(+++Richness, ++Correctness, +Robustness)

Brief digression: who controls the model, the quiz or the question?

There is one design decisions that it took a long time for me to resolve, and I want to mention it here.

The question is: Is the interaction model a property of the question, or the quiz?

At the moment, there is a setting in the quiz that lets you change all the questions in the quiz from adaptive mode to non-adptive mode at the flick of a switch. Or, at least, it allows you to re-use the same questions in both adaptive and non-adaptive mode. This suggest that the interaction model is a property of the quiz.

On the other hand, suppose that you wanted to introduce the feature that, in adaptive mode, the student gets different amounts of feedback after each try at getting the question right. For example, the first time they get it wrong the only get a brief hint. If they are wrong the second time they get a more detailed comment, and so on. In order to do this, you need more data (the various hints) in order to define an adaptive question that is irrelevant to a non-adaptive question. Also, certain question types (e.g. Opaque, from contrib) have to, of necessity, ignore the adaptive, non-adaptive setting. And I suggested above that, manually graded question types like Essay should really be considered to have a separate interaction model. This suggests that the interaction model is a properly of the individual question. (Although usability considerations suggest that a single quiz should probably be constructed from questions with similar interaction models.)

I eventually concluded that both answers are right. That is, it is a good idea for the quiz to have a setting like adaptive/non-adaptive that sets out the teacher's intention of how each question in this quiz should behave. However, the exact choice of interaction model to use is up to the different question types. When a quiz attempts is started, each question type is asked something like "A quiz attempt is starting using 'adaptive mode'. Exactly which interaction model should be used for questions of this type in this attempt?" This gives questions that can only work in a certain way (for example essay questions) a chance to override the quiz setting.

Clarifying question states

Althought there is a database table called question_states, the column there that stores the type of state is called event, and the values stored there are active verbs like open, save, grade. The question_process_responses function is written in terms of these actions too. One nasty part is that during the processing, the event type can be changed several times, before it is finally stored in the database as a state.

I would like to clearly separate the concept of the current state that a question is in, and the various actions that lead to a change of state. The actions will be handled by the interaction models, and the state will be stored in the database as a state.

The list of available states will also be changed slightly to match the following diagram. This came from thinking about what information it was important to display in the quiz navigation block.

(+Correctness, +Richness).

Reorganise the code

Already, in the Moodle 2.0 developments, I have made several changes to the how the quiz and question bank code is organised, in an attempt to make it easier to maintain and understand. It also makes it easier to write automated tests. I hope that this work will have a further beneficial effect on the code quality.

(+Robustness, +Efficiency)

Simplified API for question types

Although I said above "The student enters an answer to each question which is saved. Then when they submit the quiz attempt, all the questions are graded." In fact, that was a lie. Whenever a response is saved, the grade_responses method of the question type is called, even in the state is only being saved. This is confusing, to say the least, and very bad for performance in the case of a question type like JUnit (in contrib) which takes code submitted by the student, and compiles it in order to grade it.

So some of the API will only change to the extent that certian funcitons will in future only be called when one would expect them to be. I think this can be done in a backwards-compatible way.

Another change will be that, at the moment, question types have to implement tricky load/save_question_state methods that, basically, have to unserialise/serialise some of the state data in a custom way, so it can be stored in the answer column. This is silly design, and leads to extra, unnecessary database queries. By changing the database structure so that the students' responses are stored directly in a new table (after all, an HTTP POST request is really just an array of string key-value pairs, and there is a natural way to store that in a database) it should be posiblity to eliminate the need for these methods.

(+Richness, +Correctness, +Efficiency)

See also

In the next section, Design, gives the detailed design of the above solution.

- Back to Question Engine 2