Learning Analytics Specification

| Learning Analytics | |

|---|---|

| Project state | Planning |

| Tracker issue | XXX |

| Discussion | XXX |

| Assignee | XXX |

| Note: This specification is obsolete, it was discarded in favour of the current Moodle Analytics API. |

This is a specification for a Learning Analytics system including an API for collection and combination of learning analytics data and a number of interfaces for presenting this information.

The specification started at the Learning Analytics working group during MoodleMoot US 2015 (course, notes).

What are Learning Analytics?

Learning Analytics are any piece of information that can help an LMS user improve learning outcomes. Users include students, teachers, administrators and decision-makers.

There are a number of existing reports, blocks and other plugins for Moodle (standard and additional) that provide analytics. A list of Learning Analytics plugins is listed in the Moodle user docs.

Why is a Learning Analytics system needed?

Although there are already a number of sources of learning analytics, there is no API or consistent source of learning analytics that combines data from multiple sources and makes it accessible to plugins throughout Moodle. An framework that relies on a common language will allow development of learning analytics tools that will gather and present analytics information.

Learning analytics are needed to inform teachers about students, to inform the staff that support teachers and to inform students themselves. The primary need for learning analytics has been identified as promoting student retention by identifying student's status (at risk, normal, ahead), based on their activity in a course.

??? Will we ever need more than three states? Adding this as a constraint does simplify a lot of the design, but will we regret it later? ???

A learning analytics system should be:

- predictive (combining currently available data to show current status and to enable prediction of future status)

- proactive (notifying users when action is needed to change status)

- verifiable (able to compare predictions to actual outcomes to measure accuracy)

Validation against historical data is important because different institutions/organisations (and even courses within these) have different outcomes they wish to predict, e.g. course completion, grades, etc. and different criteria that may be relevant, such as levels of participation, mastery of specific outcomes (if used), depth or breadth of social network or content access, etc.. Verifying configuration(s) against historical data will give confidence of how predictive they will be in future.

??? Keeping a history of LA values is not hard, but how could configurations be compared to outcomes later? ???

A learning analytics system will be able to make use of pre-fetched data that can be combined in varying ways, on the fly.

Predictions will be based on a configuration (weights applied to source data) based on institution/organisation calibration and able to be adjusted on the fly.

Learning Analytics data gathering API

The learning analytics system is made up of a number of components.

- Sources represent individual suppliers of pre-defined learning analytics/metrics. They are objects based on an extensible API, so the number and nature of sources can be added to.

- The Learning Analytics API consists of a number of data tables and a calculator that takes learning analytics and calculates a status value, based on the current configuration, as it is requested. It is an API in the sense that any other component can request the current status value for a user (student) in a given context (course).

- A number of essential user interfaces are defined initially, but there are other potential interfaces possible.

Sources of Learning Analytics

Each of the designated Learning Analytics sources should be pre-collected or accessed through simple queries so they can be combined in calculations on the fly. Teachers and other users should be able to manipulate weights to explore the effect this has on student status.

The Learning Analytics API is a pluggable system so that additional sources can be added. In this sense, sources are a sub-plugin of the Learning Analytics API.

The following sources were identified as important learning analytics that need to be made available.

- Course access/views (useful early in course), relative within students of a course

- Activity/resource views, reads (from event observer, using R in CRUD), relative to number of possible views

- Activity resource submissions, postings, etc. (from event observer, using C,U in CRUD), relative to number of possible posts

- Gradebook current grades and course grade

- Completion status, if used

- Assessment Feedback views (quiz, assignment, workshop, others?) (from events observer on specific events)

- Interaction between students (Social Network Analysis) (forum posts vs views, diversity of interaction vs number of) (pre-calculated from DB)

Learning analytics source values are pre-gathered or available through simple queries so values are available for combination/calculation as needed. They will be recalculated when dependent information changes. Such changes are observed through specific events. For example, when a user access a course, the course access source is triggered and recalculates the related learning analytic value. When new sources are required, a history of information may need to be reviewed to calculate a current value that can be kept up-to-date.

Sources produce a floating point value between zero and one and pass this to the Learning Analytics API for storage. This value limitation is important so that learning analytics can have relative meaning. The way of calculating this value will differ for each source because they are drawing on different information. Such information may be calculated based on:

- a proportion of a possible maximum grade/value,

- a normalised relative score for a user within a context,

- events taking place in relation to expected dates (eg., submitting before a deadline) or

- a more complex algorithmic combination of information.

??? How will individual LA values be calculated? It will need to differ for each source? Can we describe how this will be done for the desired learning analytics, such as course access? ???

??? Should status values relate to relative or absolute performance in relation to other students? Should they be relative to expected status at a particular point in the course? How should this be calculated at varying stages through a course? Would this be relevant in courses that have no defined beginning or end? ???

LA sources themselves may have some configuration so they can be tweaked under different circumstances (such as different education sector or class sizes). Such configuration may be limited to the site level or it could be configured within a given context (course).

Some sources would relate to multiple activities. For example, viewing may related to all activities/resources within a course but submissions may be limited to a subset of activities.

??? Is it necessary for different activities to be distinguished by a source? Could it be the case that some activities should be included/excluded? Would this make the system too complex? ???

File hierarchy for a Source

- backup (folder)

- moodle2 (folder)

- backup_analytics_source_XXX.class.php

- restore_analytics_source_XXX.class.php

- moodle2 (folder)

- classes (folder)

- event (folder)

- analytics_source_configuration_created.php

- analytics_source_configuration_updated.php

- external.php

- eventlist.php

- eventobservers.php

- event (folder)

- db (folder)

- access.php

- caches.php

- install.xml

- services.php

- upgrade.php

- lang (folder)

- en (folder)

- analytics_source_XXX.php

- en (folder)

- lib.php

- settings.php

- mod_form.php

- version.php

A source sub-plugin includes a number of common files in a standard structure. Some files need explanation for this plugin type.

| File(s) | Explanation |

|---|---|

| eventlist.php, eventobservers.php | These files relate to events that trigger the functionality of source plugins. Events start the process of filtering, calculation and storing an analytics value. |

| external.php, services.php | The leaning analytics source may need to respond to external prompting through Web services. This may be the case if the source plugin is reliant on information external to Moodle. In most cases, external uses of activities and resources will be handled through those plugins and the source will be notified when events take place. |

| caches.php | As source plugins are interacting with events, source configurations relevant to a context (course) may need to be cached to reduce reliance on database tables. |

| files in the events folder | Sources should not create events in relation to filtering and calculations of analytics values. Such events would duplicate events already being generated (and potentially logged). Sources may trigger events related to configuration changes.

??? Should sources trigger events when they store a new analytics value? Could there be other plugins, within or outside the learning analytics system, that may want to know about when new analytics values? ??? |

| settings.php, mod_form.php, access.php | There is no human interface to source plugins, except for optional configuration interfaces. Not all source plugins will be configurable, but for those that are, it may be necessary to restrict configurability. A configuration for an individual course means additional filtering, which may intern require additional information to be gathered, so this is a potential performance bottleneck. |

| install.xml, upgrade.php, backup_XXX.php, restore_XXX.php | These files relate primarily to source plugin database tables needed for configuration values and storage of counts (per user) for calculations. |

| lib.php | This is the home of standard functions relating to source plugins. |

Source plugins may add any other necessary files to accomplish their purposes, which will differ.

Functions of the Sources API

These functions are located within the lib.php file.

| Method | Comment |

|---|---|

| has_config() | Returns true if a source plugin is configurable |

| instance_config($context) | Returns a form permitting configuration |

| get_value($user, $context) | Returns the source learning analytics value (floating point number from 0 to 1) for the given user in the given context. |

| get_additional_info($user, $context) | Provides additional textual information about the source value, such as how the value was calculated or some action by the student (eg last course access). This can be used in more focussed reports. |

Learning Analytics API data structures

To calculate the status of individual users (students), learning analytics values are combined as a weighted sum, according to a configuration, and stored as status values. These status values can be used by numerous interfaces via requests to the Learning Analytics API.

Learning Analytics are gathered and/or pre-calculated pieces of information from LA sources. They relate to an individual user's (student's) success as reported by the source. Each calculated learning analytics value is stored so they can be accessed later. Usually only the latest values will be kept.

Learning Analytics

- User ID

- Context ID

- Source

- Timestamp

- Value {0..1}

A configuration defines how learning analytics should be combined within a particular context (course). Each source is given a weight in the configuration and the weighted sum is calculated to form the student status. Threshold values for at risk, normal and ahead are defined in the configuration.

Configuration

- Context ID

- Source

- Weight

- Thresholds

- Timestamp

Configurations can be validated when compared to final outcomes, such as course completion or course grade. Configurations can be saved as revisions with a timestamp, so that a series of status values can be recalculated later and so that the validity of different configurations can be compared over time.

Student status values can be requested for each user (student) in each relevant context (their enrolled courses). This information is available from the Learning Analytics API to various interfaces throughout Moodle.

Student Status

- User ID

- Context ID

- Status value {0..1}

- Status code {'At risk','Normal','Ahead'}

- Rank (relative to the status value of other users in the context)

- Timestamp

Status values are the weighted sum of learning analytics values, calculated based on the configuration within the context (course). Status values relate to status codes, which denote whether the user (student) is at risk, making normal progress or is ahead within the context (course). These status codes are bounded by thresholds defined in the configuration for the context (course).

Calculations should continue to work when there is an absence of a value from a particular source. When this is the case, zero values should be assumed.

Functions of the Learning Analytics API

These functions are located within the lib/la/learning_analytics.php file.

| Method | Comment |

|---|---|

| get_status($user, $context, $time = "latest") | Returns the status object for the given student in a given context. An optional time may be provided to find the status value in the past, if a history of values has been kept. There is an implied recalculation when a student status value is accessed. |

| get_all_user_status($context) | Returns array of status objects for all users in the given context. This is useful for reports. |

How it works

The following is a general scenario of how the system is used.

- Event takes place (eg., student accesses course)

- Observing Source is triggered

- Learning analytics value calculated

- Learning analytics value passed to LA API for storage

- LA value stored

...later...

- Status requested

- Configuration retrieved

- All relevant LA values gathered

- Calculation sums weighted LA values

- Status codes calculated based on thresholds

- Status object returned

Interfaces

The Learning Analytics system includes a number of interfaces. Initially, a basic set of interfaces will be developed, but the system will be able to be expanded, with future interfaces possible, created as standard and additional plugins.

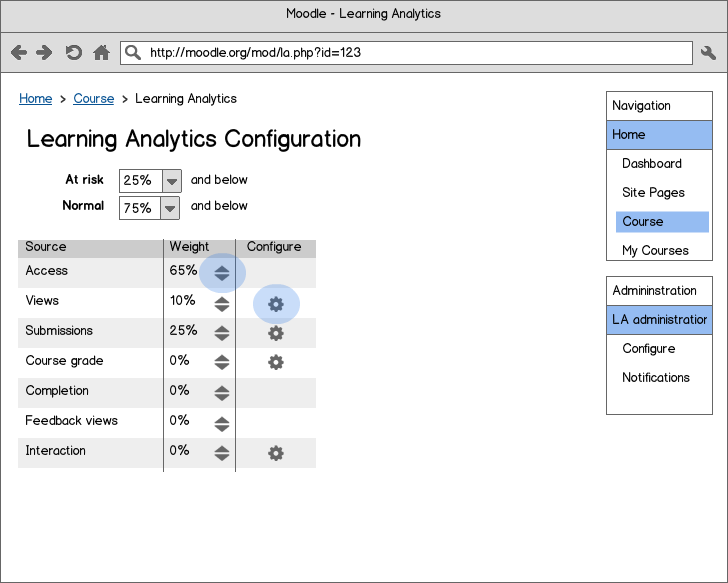

Configuration Interface (administrators, teachers, support staff)

The configuration interface is an important part of the system, although it may be the case that a teacher will never visit the page, relying on the list interface and the ability to tweak weights there. The configuration interface allows teachers/administrators to control which learning analytics sources are involved in status calculations, their weights and their configuration. A configuration is relative to a specific context (course), but it could be based on a default configuration set at the site level.

- Thresholds can be set for status values at risk and normal, implying a threshold for ahead.

- Each available source is listed in a table of sources. As the LA API is extensible, this list is not fixed and sources could be added.

- Each source has a weight {0..1} (represented as a percentage). The sum of all weights must always sum to 1 (100%). Weights can be adjusted up and down, with the weights of other sources dynamically adjusting to compensate. Adjustments will be saved without reloading the page. As the weight of a source is increased, the next lowest source (in sorted order) is automatically decreased; as a source is decreased, the next highest source is increased.

- When a source has a weight of zero, it is not included in status calculations and the source will not appear in other interfaces. To include a source in calculations and to show it on other interfaces, its weight will need to be increased above zero.

- Sources may have the potential to be configured, although this may not be the case for all sources. Configuration may include tweaking how the source presents it's score for students, such as the inclusion/exclusion of certain activities. The configuration will appear in a form presented by the source and this configuration interface will differ between sources. The configuration set for an individual source will be relevant to context (course). It is assumed that in most cases, a default configuration for a course will be sufficient for most teaching situations.

- At the site level, thresholds and a default set of weights can be established and applied for new courses. In this way, a relevant default configuration can be created for an institution and the general way that teaching happens there. The learning analytics system should be able to work, even if the configuration interface is never visited in a particular context.

An option of the system would be to allow multiple configurations for each context. This option would allow configurations to be save and automatically made active based on dates. This option would be set a the site level and the default would be for this option to be turned off to keep the system and interfaces as simple to use as possible.

Additional controls introduced with multiple configurations are as follows.

- A name, so different configurations can be identified.

- A date/time on which the configuration would be made active (automatically becoming active is something that can be enabled/disabled).

- A table of saved configurations with details and the potential to manually make a configuration active.

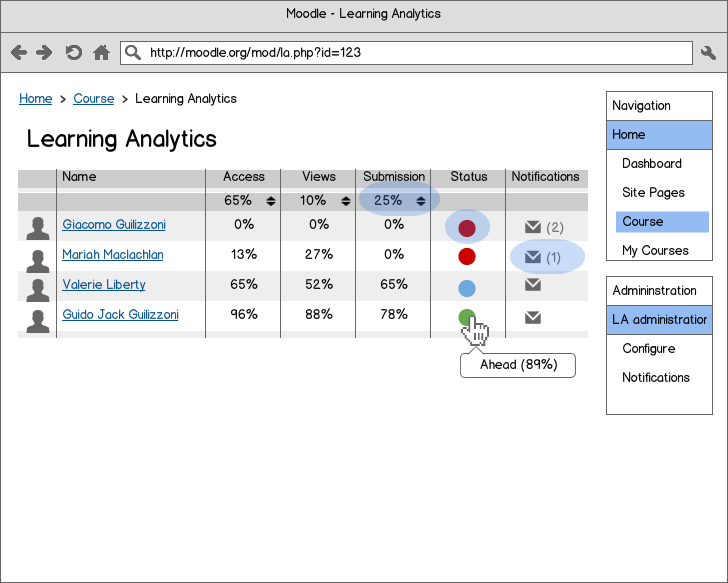

List of students sorted by status (teachers, support staff)

The list of students in a context (course) will be displayed on one page, with their status value given iconically and number of notifications sent from the learning analytics system. The list of students is always sorted by status value, but may be filtered.

- The list is always sorted by status value, with students having the lowest status values (at risk) being noted at the top of the list and ordered downwards towards students whose status is normal or ahead. This sorting denotes the relative performance/position/rank of the student in the class.

- When the size of the class exceeds a certain number, say 50, it may be useful to include pagination. To assist in finding individuals, filtering by name may be included.

- The row below the table header includes controls for on-the-fly weight adjustments. (These controls should be visually different to ones that indicate sorting). When weights are adjusted, other weights will dynamically compensate (using the same rules applied on the configuration page) and adjustments will be saved automatically. The effect of weighting changes will be an immediate recalculation of status values, potentially reordering students and providing new status icons.

- The status column will include a colour-coded status icon. These colours will need to be configurable at the site level as colours can have different meanings in different cultures; also, some sites may wish to match status icons to theme colours. The default colours will be:

- red for at risk,

- blue for normal and

- green for ahead.

- Hovering over the status icon will reveal the status and status value (as a percentage).

- The number of notifications sent to students from the learning analytics system will be shown. An envelope icon appears with the number of sent notifications beside it (when greater than zero). Clicking on the envelope takes the user to the notification interface, where they can send an individual notification to the relevant user (student).

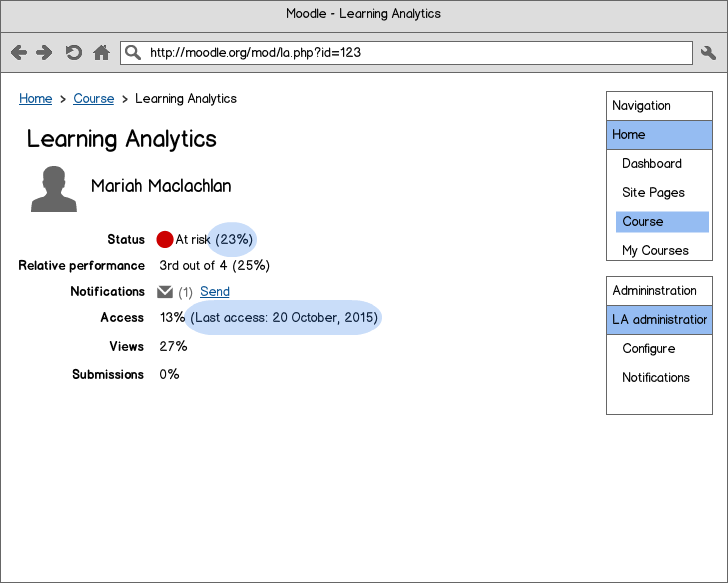

Detail view per user (manager, teacher, student)

The detail view shows details for an individual user (student). It can be viewed by teachers (and higher roles) and an option for students to see their own status details can be set through a capability.

- The status icon is shown with the textual term for the status and the status value (as a percentage).

- The relative performance of the user is given in relation to other users in the course. This represents their position in the course and a percentile is also given.

- The number of notifications sent from the system can be seen and there is an explicit link to allow individual notifications to be sent to the user.

- ??? Should there be a history of notifications? How should it be presented? ???

- Components of the status, from each source, are given and there could potentially be additional textual information provided by the relevant source (such as the last access date) or it could provide a graphical representation of information from the source (such as histogram of access counts over the last weeks).

- A user (manager, teacher) will be able to see the status of an individual student across all that they have the appropriate capabilities to view.

- By default, the user (student) referred to in details should not be able to see this page. A capability controlling this should be created so exceptions can be made. (Studies have shown that showing at-risk students detailed evidence of their progress leads to poorer outcomes.) Such users would be able to see the status information for all courses where they have the required capability.

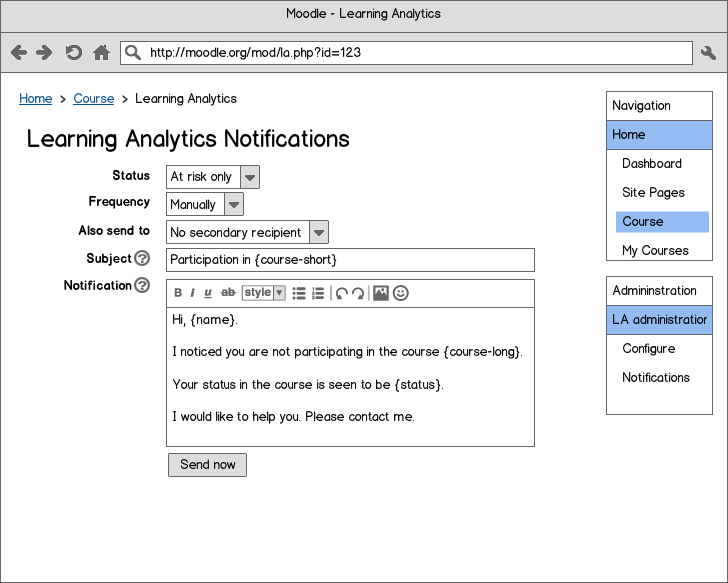

Push notifications

The notification interface allows notifications to be sent to users in response to their status. This interface is similar to the messaging interface, but needs to be independent from it so that:

- notifications can be sent based on triggers,

- specific information can be included in notifications and

- notifications can be tracked in relation to the learning analytics system.

- Notifications can be sent to a group of students who have been identified as at risk (default) or to everyone in the course.

- Notifications can be sent at a certain point in time, for example, every two weeks, or they can be sent Manually (default). When a frequency other than Manually is selected, the Send now button will be replaced by a Save button.

- Notifications sent to users (students) can also be sent to secondary users as a way of tracking notifications. The list of secondary recipients would be based on roles of users enrolled in the course, excluding those based on archetypal student roles. For example, a teacher could be sent notifications at the same time they are sent to students. If other roles are defined in the course, such as a manager or mentor, these could be nominated as a secondary recipient. A note should be automatically added to the subject when sent to secondary recipients.

- The message subject and body can contain place-holders, replaced by values when the message is sent. Help will need to be provided to use these place-holders. Default values for subject and body will be made available, based on language strings and can be adjusted at the site level by an administrator.

- The notification interface can be used to send a message to an individual user (student) when this action is selected from the list or detail views. When sending to an individual, the status and frequency controls will be hidden. The only button possible will be Send now as this is an act of manual intervention.

The following table lists available placeholders that can be used in a notification.

| Placeholder | What it does | Example |

|---|---|---|

| {short-course} | The short course name | Subject: Participation in {short-course} |

| {long-course} | The full length course name | I noticed you are not participating in {long-course}. |

| {name}, {first-name}, {surname} | The student's full name or parts of it. | Hi, {name}. |

| {status} | The calculated status code (At risk, Normal, Ahead). | Your status in the course is seen to be {status}. |

Configuration verification interface (teachers, researchers)

It is seen as desirable to verify configurations to see how accurately they predicted outcomes at the end of the course. To achieve this, a history of configurations and status values would need to be kept. It may also be necessary to keep a history of source values if a user wishes to determine an optimal configuration (or set of configurations).

???What would this interface look like? How would it behave? ???

Usage scenarios

The following scenarios were expressed at by the working group and should be able to be satisfied by the current system or by an extension to the proposed system. Usage scenarios are expressed in the form "As a [user type] I want to [goal] so I can [reason]". These scenarios are given so that the functional completeness of the system can be checked and tests (unit tests and automated functional tests) can be written.

- As a teacher I want to see the summary status of all of the students in my course so that I can intervene.

- As a teacher I want to sort or filter the summary status of all of the students to reduce the amount of information I need to consider.

- As a teacher I want to know if my students have accessed course materials before the course begins and in the first weeks.

- As a teacher I want to see the status of particular sources across students so that I can change elements of my course that need improvement.

- As a teacher I want to see a list of my students indicating whether I have interacted with them, so I can be sure to communicate with each student frequently.

- As a teacher I want to see the detailed status of a student in my course so that I know how to intervene.

- As a teacher I want to be notified as soon as a student becomes at risk in a course so that I can intervene.

- As a teacher I want to customise weights/configuration to better meet my specific course context.

- As a teacher I want to see how students are faring against a certain configuration so that I can understand how that may impact student success.

- As a teacher I want to customise the notification templates for my specific course.

- As a manager I want to see the detailed status of an advisee in a course so that I know how to intervene.

- As a manager I would like to see the status of a students in all of their classes so that I can intervene.

- As a manager I want to be notified as soon as a student becomes at risk in a course so that I can intervene.

- As a student I want to see my status in my current courses so I know which course to focus on.

- As a student I want to see my status in all my courses and know what it means so that I can talk with people about what I need to do.

- As a student I want to receive a notification when my status has changed so I can get feedback about my status in the course.

- As an administrator I want to set or change default weights/configurations to reflect institutional/organisational knowledge of appropriate practice.

- As an administrator I want to configure the default notification templates so there they are worded appropriately for the institution/organisation.

- As a decision-maker I want to know the level of student activity in the first two weeks of the course.

Future work

Once the Learning Analytics API is established, it could be extended in a number of ways and additional sources and interfaces would be possible.

Other potential extensions to the system

- Reporting of resource/activity effectiveness in contributing to learning outcomes

- Potential for students to opt in/out of data collection, analysis, reporting or exports. This may be necessary in some places. It might be possible to have student logging be anonymous or exported anonymously.

- Ability to export data as a static dump or as a stream of analytics information, possibly conforming to the IMS Caliper standard, for extra-system analysis.

- Third-party plugins that use the Learning Analytics API.

Other potential sources of Learning Analytics data

There are other possible sources of Learning Analytics. As well as those suggested above, here are some additional potential sources.

- performance in other courses enrolled (now & previously)

- student ratings on forums

- self-evaluation

- history of activity within the current course

- history of course activity within a faculty/category

- mood/sense-making

- activity in relation to deadlines

- time spent online based on activity captured through JavaScript

- demographic/profile data

- year/progress

- past grades, prior credit, GPA

- completion of prerequisites vs recommended prerequisites

- personal background

- native language, exceptions / accommodations

- cross-instance performance across multiple Moodle instances

- engagement

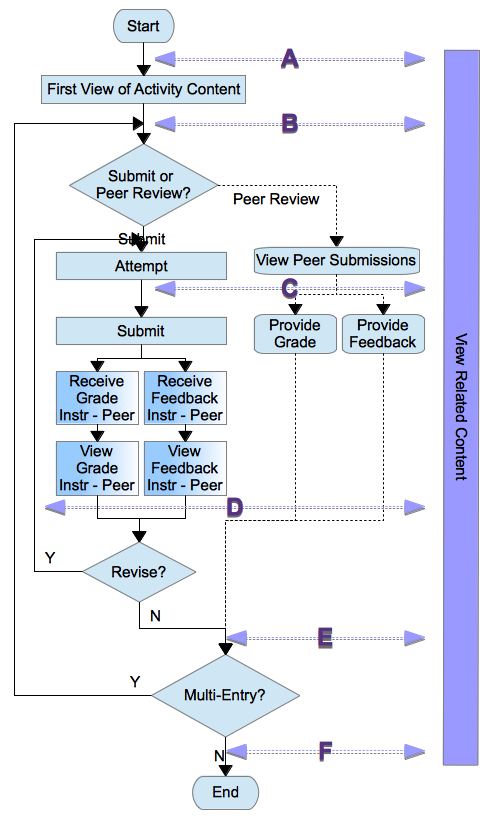

The following diagram illustrates a generalised process of learner engagement with Moodle modules.

For each process block, an Event record can be generated that includes module instance ID, date/time, user, etc. Learning Analytics should include counts of these process activities, but also time spans between processes.

Other potential interfaces

- An institutional summary view showing an overview of student success/retention in courses

- Activity restrictions based on risk status based on learning analytics

- A block showing a student their current status and linking to their details, showing teachers a list of students (with at risk status?)

- A profile page element showing the student's status

- A student interface (block?) that shows what activity will increase their status

- An interface that shows what actions are most correlated with student success

- A graphical display of status over time, showing how students are progressing in the course

- A display students who have become unenrolled to determine commonalities in drop-outs

- A list of all the courses with activity level by teachers