Diferencia entre revisiones de «Usando Taller»

(tidy up) |

({{Urgente de traducir}}) |

||

| Línea 1: | Línea 1: | ||

{{ | {{Urgente de traducir}}{{Taller}} | ||

==Fases del taller== | ==Fases del taller== | ||

El flujo del trabajo para el módulo de Taller puede verso como que tuviera cinco fases. La actividad típica de Taller puede cubrir días e inclusive semanas. El profesor cambia la actividad de una fase a otra. | El flujo del trabajo para el módulo de Taller puede verso como que tuviera cinco fases. La actividad típica de Taller puede cubrir días e inclusive semanas. El profesor cambia la actividad de una fase a otra. | ||

Revisión del 15:19 24 mar 2017

Nota: Urgente de Traducir. ¡ Anímese a traducir esta muy importante página !. ( y otras páginas muy importantes que urge traducir)

Fases del taller

El flujo del trabajo para el módulo de Taller puede verso como que tuviera cinco fases. La actividad típica de Taller puede cubrir días e inclusive semanas. El profesor cambia la actividad de una fase a otra.

The typical workshop follows a straight path from Setup to, Submission, Assessment, Grading/Evaluation, and ending with the Closed phased. However, an advanced recursive path is also possible.

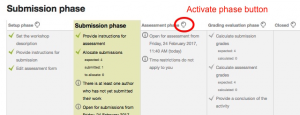

The progress of the activity is visualized in so called Workshop planner tool. It displays all Workshop phases and highlights the current one. It also lists all the tasks the user has in the current phase with the information of whether the task is finished or not yet finished or even failed.

Fase de configuración

In this initial phase, Workshop participants cannot do anything (neither modify their submissions nor their assessments). Course facilitators use this phase to change workshop settings, modify the grading strategy of tweak assessment forms. You can switch to this phase any time you need to change the Workshop setting and prevent users from modifying their work.

Fase de envío

En la fase de envío, los participantes del Taller envían su trabajo. Las fechas para control del acceso pueden configurarse de forma tal que aun y cuando el taller estuviera en esta fase, el envío está restringido a solamente el marco de tiempo dado. La fecha (y hora) de inicio del envío, la fecha ( y hora) de fin, o ambas, pueden especificarse.

¡Nueva característica

en Moodle 3.0!

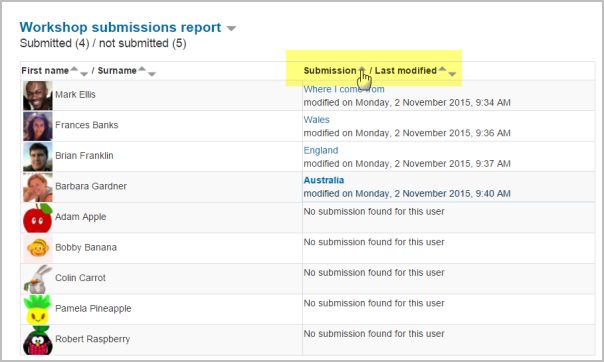

El reporte de envíos del taller le permite a los profesores ver quienes han enviado y quienes no han enviado su trabajo, y filtrar por envío y por última modificación:

¡Nueva característica

en Moodle 3.1!

Un estudiante puede eliminar sus envíos propios siempre y cuando elllos puedan editarlos y que aun no hayan sido evaluados. Un profesor puede eliminar cualquier envío en cualquier momento; sin embargo, si ya hubiera sido evaluado, al profesor se le advertirá que también se eliminará la evaluación y que pueden afectarse las calificaciones de los evaluadores.

Fase de evaluación

If the Workshop uses peer assessment feature, this is the phase when Workshop participants assess the submissions allocated to them for the review. As in the submission phase, access can be controlled by specified date and time since when and/or until when the assessment is allowed.

Fase de evaluación de la calificación

The major task during this phase is to calculate the final grades for submissions and for assessments and provide feedback for authors and reviewers. Workshop participants cannot modify their submissions or their assessments in this phase any more. Course facilitators can manually override the calculated grades. Also, selected submissions can be set as published so they become available to all Workshop participants in the next phase. See Taller FAQ for instructions on how to publish submissions.

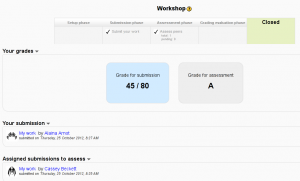

Cerrado

Whenever the Workshop is being switched into this phase, the final grades calculated in the previous phase are pushed into the course Libro de calificaciones.This will result in the Workshop grades appearing in the Gradebook and in the workshop (new in Moodle 2.4 onwards). Participants may view their submissions, their submission assessments and eventually other published submissions in this phase.

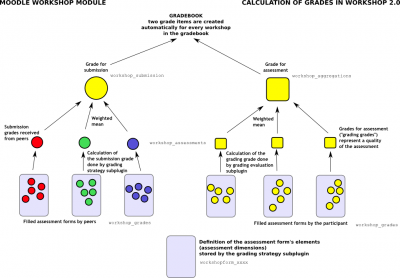

Calificación del taller

Las calificaciones para una actividad de Taller se obtienen gradualmente en diferentes etapas y después cuando están finalizadas. El esquema siguiente ilustra el proceso y también proporciona la información sobre en cuales tablas de la base de datos están almacenadas.

Como Usted puede ver, cada participante obtiene dos calificaciones numéricas en el Libro de calificaciones. Durante la fase de Calificar la Evaluación, el facilitador del curso puede permitirle al módulo del Taller que calcule estas calificaciones finales. Tome nota de que están almacenadas en el módulo del Taller solamente hasta que la actividad cambia a la fase final (Cerrada). Por lo tanto, es bastante seguro el "jugar" con las calificaciones hasta que Usted esté feliz con ellas y entonces sí cerrar el Taller y empujar las calificacines al interior del Libro de calificaciones. Inclusive puede Usted cambiar de vuelta la fase, recalcular o anular las calificaciones y cerrar otra vez el Taller para que se actualicen las calificaciones adentro del Libro de calificaciones (debe tomarse nota de que Usted también puede anular las calificaciones adentro del Libro de calificaciones).

Durante la calificación de la evaluación, el reporte de calificaciones del taller le proporciona a Usted una vista general exhaustiva de todas las calificaciones individuaeles. El reporte usa varios símbolos y sintaxis:

| Valor | Significado |

|---|---|

| - (-) < Alice | There is an assessment allocated to be done by Alice, but it has been neither assessed nor evaluated yet |

| 68 (-) < Alice | Alice assessed the submission, giving the grade for submission 68. The grade for assessment (grading grade) has not been evaluated yet. |

| 23 (-) > Bob | Bob's submission was assessed by a peer, receiving the grade for submission 23. The grade for this assessment has not been evaluated yet. |

| 76 (12) < Cindy | Cindy assessed the submission, giving the grade 76. The grade for this assessment has been evaluated 12. |

| 67 (8) @ 4 < David | David assessed the submission, giving the grade for submission 67, receiving the grade for this assessment 8. His assessment has weight 4 |

| 80 ( |

Eve's submission was assessed by a peer. Eve's submission received 80 and the grade for this assessment was calculated to 20. Teacher has overridden the grading grade to 17, probably with an explanation for the reviewer. |

Calificación por envío

La calificación final para cada envío es calculada como la media ponderada de las calificaciones de evaluaciones particulares dadas por todos los revisores de este envío. el valor es redondeado a un número de (lugares) decimales que está configurado en el formato de configuración del Taller.

Los facilitadores del curso pueden influenciar la calificación para un envío dado en dos formas:

- al proporcionar su propia evaluación, posiblemente con una ponderación (peso) mayor que que tienen los pares evaluadores

- al anular la calificación a un valor dado

Calificación por evaluación

Grade for assessment tries to estimate the quality of assessments that the participant gave to the peers. This grade (also known as grading grade) is calculated by the artificial intelligence hidden within the Workshop module as it tries to do typical teacher's job.

During the grading evaluation phase, you use a Workshop subplugin to calculate grades for assessment. At the moment, only one standard subplugin is available called Comparison with the best assessment (other grading evaluation add-ons can be found in the Moodle plugins directory). The following text describes the method used by this subplugin.

Grades for assessment are displayed in the braces () in the Workshop grades report. The final grade for assessment is calculated as the average of particular grading grades.

There is not a single formula to describe the calculation. However the process is deterministic. Workshop picks one of the assessments as the best one - that is closest to the mean of all assessments - and gives it 100% grade. Then it measures a 'distance' of all other assessments from this best one and gives them the lower grade, the more different they are from the best (given that the best one represents a consensus of the majority of assessors). The parameter of the calculation is how strict we should be, that is how quickly the grades fall down if they differ from the best one.

If there are just two assessments per submission, Workshop can not decide which of them is 'correct'. Imagine you have two reviewers - Alice and Bob. They both assess Cindy's submission. Alice says it is a rubbish and Bob says it is excellent. There is no way how to decide who is right. So Workshop simply says - ok, you both are right and I will give you both 100% grade for this assessment. To prevent it, you have two options:

- Either you have to provide an additional assessment so the number of assessors (reviewers) is odd and workshop will be able to pick the best one. Typically, the teacher comes and provide their own assessment of the submission to judge it

- Or you may decide that you trust one of the reviewers more. For example you know that Alice is much better in assessing than Bob is. In that case, you can increase the weight of Alice's assessment, let us say to "2" (instead of default "1"). For the purposes of calculation, Alice's assessment will be considered as if there were two reviewers having the exactly same opinion and therefore it is likely to be picked as the best one.

Backward compatibility note

In Workshop 1.x this case of exactly two assessors with the same weight is not handled properly and leads to wrong results as only the one of them is lucky to get 100% and the second get lower grade.

It's not final grades what is compared

It is very important to know that the grading evaluation subplugin Comparison with the best assessment does not compare the final grades. Regardless the grading strategy used, every filled assessment form can be seen as n-dimensional vector of normalized values. So the subplugin compares responses to all assessment form dimensions (criteria, assertions, ...). Then it calculates the distance of two assessments, using the variance statistics.

To demonstrate it on example, let us say you use grading strategy Number of errors to peer-assess research essays. This strategy uses a simple list of assertions and the reviewer (assessor) just checks if the given assertion is passed or failed. Let us say you define the assessment form using three criteria:

- Does the author state the goal of the research clearly? (yes/no)

- Is the research methodology described? (yes/no)

- Are references properly cited? (yes/no)

Let us say the author gets 100% grade if all criteria are passed (that is answered "yes" by the assessor), 75% if only two criteria are passed, 25% if only one criterion is passed and 0% if the reviewer gives 'no' for all three statements.

Now imagine the work by Daniel is assessed by three colleagues - Alice, Bob and Cindy. They all give individual responses to the criteria in order:

- Alice: yes / yes / no

- Bob: yes / yes / no

- Cindy: no / yes / yes

As you can see, they all gave 75% grade to the submission. But Alice and Bob agree in individual responses, too, while the responses in Cindy's assessment are different. The evaluation method Comparison with the best assessment tries to imagine, how a hypothetical absolutely fair assessment would look like. In the Development:Workshop 2.0 specification, David refers to it as "how would Zeus assess this submission?" and we estimate it would be something like this (we have no other way):

- Zeus 66% yes / 100% yes / 33% yes

Then we try to find those assessments that are closest to this theoretically objective assessment. We realize that Alice and Bob are the best ones and give 100% grade for assessment to them. Then we calculate how much far Cindy's assessment is from the best one. As you can see, Cindy's response matches the best one in only one criterion of the three so Cindy's grade for assessment will not be as high.

The same logic applies to all other grading strategies, adequately. The conclusion is that the grade given by the best assessor does not need to be the one closest to the average as the assessment are compared at the level of individual responses, not the final grades.

Grupos y Taller

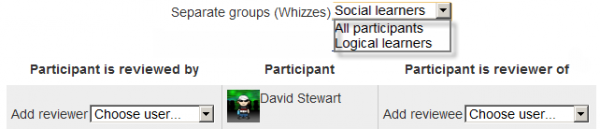

When a workshop is used in a course using separate or visible groups and groupings, it is possible to filter by group in a drop-down menu at the Assessment phase, manual allocation page, grades report and so on.

Vea también

- Research paper Moodle Workshop activities support peer review in Year 1 Science: present and future by Julian M Cox, John Paul Posada and Russell Waldron

- Using Moodle Workshop module forum

- Using Moodle forum discussion [1] where David explains a particular Workshop results

- Moodle Workshop 2.0 - a (simplified) explanation presentation by Mark Drechsler

- Development:Workshop for more information on the module infrastructure and ways how to extend provided functionality by developing own Workshop subplugins

- A Brief Journey into the Moodle 2.0 Workshop at moodlefairy's posterous