Caching

A cache is a collection of processed data that is kept on hand and re-used to avoid costly repeated database queries.

Moodle 2.4 saw the implementation of MUC, the Moodle Universal Cache. This new system allows certain functions of Moodle (e.g. string fetching) to take advantage of different installed cache services (e.g. files, ram, memcached).

In future versions of Moodle, we will continue expanding the number of Moodle functions that use MUC, which will continue improving performance, but you can already start using it to improve your site.

This is quite a long document, so if you're just looking for what the additional caching options that you can add to Moodle to improve performance are, head down to either Adding Cache Store Instances, or Performance recommendations. If you want to know more about how caching works in Moodle, read on below.

General approach to performance testing

Here is the general strategy you should be taking:

- Build a test environment that is as close to your real production instance as possible (e.g. hardware, software, networking, etc.)

- Make sure to remove as many uncontrolled variables as you can from this environment (e.g. other services)

- Use a tool to place a realistic but simulated and repeatable load upon your server. (e.g. jmeter or selenium).

- Decide on a way to measure the performance of the server by capturing data (ram, load, time taken, etc.)

- Run your load and measure a baseline performance result.

- Change one variable at a time and re-run the load to see if performance gets better or worse. Repeat as necessary.

- When you discover settings that result in consistent performance improvement, apply to your production site.

Cache configuration in Moodle

Since Moodle 2.4, Moodle has provided a caching plugin framework to give administrators the ability to control where Moodle stores cached data. For most Moodle sites, the default configuration should be sufficient, and it is not necessary to change the configuration. For larger Moodle sites with multiple servers, administrators may wish to use memcached, mongodb or other systems to store cache data. The cache plugin screen provides administrators with the ability to configure what cache data is stored where.

Caching in Moodle is controlled by what is known as the Moodle Universal Cache. Commonly referred to as MUC.

This document explains briefly what MUC is before proceeding into detail about the concepts and configuration options it offers.

The basic cache concepts in Moodle

Caching in Moodle isn't as complex as it first appears. A little background knowledge will go a long way in understanding how cache configuration works.

Cache types

Let's start with cache types (sometimes referred to as mode). There are three basic types of caches in Moodle.

The first is the application cache. This is by far the most commonly used cache type in code. All users share its information, and its data persists between requests. Information stored here is usually cached for one of two reasons, either it is required information for the majority of requests and saves us one or more database interactions, or it is information that is accessed less frequently but is resource intensive to generate.

By default, this information is stored in an organised structure within your Moodle data directory.

The second cache type is the session cache. This is just like the PHP session that you will already be familiar with; it uses the PHP session by default. You may be wondering why we have this cache type at all, but the answer is simple. MUC provides a managed means of storing and removing information that is required between requests. It offers developers a framework to use rather than having to re-invent the wheel and ensures that we have access to a controlled means of managing the cache as required.

It is important to note that this isn't a frequently used cache type as, by default, session cache data is stored in the PHP session, and the PHP session is stored in the database. Uses of the session cache type are limited to small datasets as we don't want to bloat sessions and thus put a strain on the database.

The third and final type is the request cache. Data stored in this cache type only persists for the lifetime of the request. If you're a PHP developer, think of it like a managed static variable.

This is by far the least used of the three cache types. Uses are often limited to information that will be accessed several times within the same request, usually by more than an area of code.

Cached information is stored in memory by default.

Cache types and multiple-server systems

If you have a system with multiple front-end web servers, the application cache must be shared between the servers. In other words, you cannot use fast local storage for the application cache but must use shared storage or some other form of shared cache such as a shared memcached.

The same applies to session cache unless you use a 'sticky sessions' mechanism to ensure that users always access the same front-end server within a session.

Cache backends

Cache backends are where data actually gets stored. These include things like the file system, PHP session, Memcached, and memory.

By default, just file system, PHP session, and memory are used within Moodle.

We don't require that a site has access to any other systems such a Memcached. Instead, that is something you are responsible for installing and configuring yourself.

When cache backends are mentioned, think of systems outside of Moodle that can store data. The MongoDB server, the Memcached server and similar "server" applications.

Cache stores

Cache stores are a plugin type within Moodle. They facilitate connecting Moodle to the cache backends discussed above. The standard Moodle has the three defaults mentioned above as well as Memcached, MongoDB, APC user cache (APCu) and Redis.

You can find other cache store plugins in the plugins database.

The code for these is located within cache/stores in your Moodle directory root.

Within Moodle, you can configure as many cache stores as your architecture requires. If you have several Memcached servers, for instance, you can create a cache store instance for each. By default, Moodle contains three cache store instances that get used when you've made no other configuration.

- A file store instance is created, which gets used for all application caches. It stores its data in your moodledata directory.

- A session store instance is created, which gets used for all session caches. It stores its data in the PHP session, which, by default, is stored in your database.

- A static memory store instance is created, which gets used for all request cache types. Data exists in memory for just the lifetime of a request.

Caches: what happens in code

Caches are created in code and are used by the developer to store data they see a need to cache.

Let's keep this section nice and short because perhaps you are not a developer. There is one very important point you must know about.

The developer does not get any say in where the data gets cached. They must specify the following information when creating a cache to use.

- The type of cache they require.

- The area of code this cache will belong to (the API, if you will).

- The cache's name, something they make up to describe in one word what the cache stores.

There are several optional requirements and settings they can specify as well, but don't worry about that at this point.

The important point is that they can't choose which cache backend to use; they can only choose the type of cache they want from the three detailed above.

How it ties together

This is best described in relation to roles played in an organisation.

- The system administrator installs the cache backends you wish to use. Memcached, XCache, APC and so on.

Moodle doesn't know about these; they are outside of Moodle's scope and purely the responsibility of your system administrator. - The Moodle administrator creates a cache store instance in Moodle for each backend the site will make use of.

There can be one or more cache store instances for each backend. Some backends like Memcached have settings to create separated spaces within one backend. - The developer has created caches in code and is using them to store data.

He doesn't know anything about how you will use your caches; he just creates a "cache" and tells Moodle what type it is best for. - The Moodle administrator creates a mapping between a cache store instance and a cache.

That mapping tells Moodle to use the backend you specify to store the data the developer wants cached.

In addition to that, you can take things further still.

- You can map many caches to a single cache store instance.

- You can map multiple cache store instances to a single cache with priority (primary ... final)

- You can map a cache store instance to be the default store used for all caches of a specific type that don't otherwise have specific mappings.

If this is the first time you are reading about the Moodle Universal Cache, this probably sounds pretty complex but don't worry; it will be discussed in better detail as we work through how to configure the caching in Moodle.

Advanced concepts

These concepts are things that most sites will not need to know or concern themselves about.

You should only start looking here if you are looking to maximise performance on large sites running over clustered services with shared cache backends or on multi-site architecture again where information is being shared between sites.

Locking

The idea of locking is nothing new; it is the process of controlling access to avoid concurrency issues.

MUC has a second type of plugin; a cache lock plugin used when caches require it. To date, no caches have required it. A cache by nature is volatile, and any information that is absolutely mission-critical should be a more permanent data store, likely the database.

Nonetheless, there is a locking system that cache definitions can require within their options, and that will be applied when interacting with a cache store instance.

Sharing

Every bit of data that gets stored within a cache has a calculated unique key associated with it.

By default, part of that key is the site identifier making any content stored in the cache specific to the site that stored it. For most sites, this is exactly what you want.

However, it's beneficial to allow multiple sites or somehow linked sites to share cached data in some situations.

Of course, not all caches can be shared, however some certainly can and by sharing, you can further reduce load and increase performance by maximising resource use.

This is an advanced feature; if you choose to configure sharing, please do so carefully.

To make use of sharing, you need to first configure identical cache store instances in the sites you want to share information, and then on each site, set the sharing for the cache to the same value.

- Sites with the same site ID

- This is the default, it allows for sites with the same site ID to share cached information. It is the most restrictive but is going to work for all caches. All other options carry an element of risk in that you have to ensure the cache's information is applicable to all sites that will be accessing it.

- Sites running the same version

- All sites accessing the backend that have the same Moodle version can share the information this cache has stored in the cache store.

- Custom key

- For this, you manually enter a key to use for sharing. You must then enter the exact same key into the other sites you want to share information.

- Everyone

- The cached data is accessible to all other sites accessing the data. This option puts the ball entirely in the Moodle administrators court.

For example, if you had several Moodle sites all the same version running on a server with APC installed you could decide to map the language cache to the APC store and configure sharing for all sites running the same version.

The language cache for sites on the same version is safe to share in many situations, it is used on practically every page, and APC is extremely fast. These three points may result in a nice wee performance boost for your sites.

It is important to consider with the language cache that by sharing it between sites any language customisations will also be shared.

The cache configuration screen

The cache configuration screen is your one stop shop for configuring caching in Moodle.

It gives you an overview of how caching is currently configured for your site and it provides links to all of the actions you can perform to configure caching to your specific needs.

Accessing the cache configuration screen

The cache configuration screen can only be accessed by users with the moodle/site:config capability. By default this is only admins.

Once logged in the configuration screen can be found in Site Administration > Plugins > Caching > Configuration.

Installed cache stores

This is showing you a list of cache store plugins that you have installed.

For each plugin you can quickly see whether it is ready to be used (any PHP requirements have been met), how many store instances already exist on this site, the cache types that this store can be used for, what features it supports (advanced) and any actions you can perform relating to this store.

Often the only action available is to create a new store instance.

Most stores support having multiple instances, however not all as you will see that that the session cache and static request cache do not. For those two stores, it does not make sense to have multiple instances.

Configured store instances

Here you get a list of the cache store instances on this site.

- Store name

- The name given to this cache store instance when it is created so that you can recognise it. It can be anything you want and is only used so that you can identify the store instance.

- Plugin

- The cache store plugin of which this is an instance of.

- Ready

- A tick gets shown when all PHP requirements have been met as well as any connection or set-up requirements have been verified.

- Store mappings

- The number of caches this store instance has been mapped to explicitly. Does not include any uses through default mappings (discussed below).

- Modes

- The modes that this cache store instance can serve.

- Supports

- The features supported by this cache store instance.

- Locking

- Locking is a mechanism that restricts access to cached data to one process at a time to prevent the data from being overwritten. The locking method determines how the lock is acquired and checked.

- Actions

- Any actions that can be performed against this cache store instance.

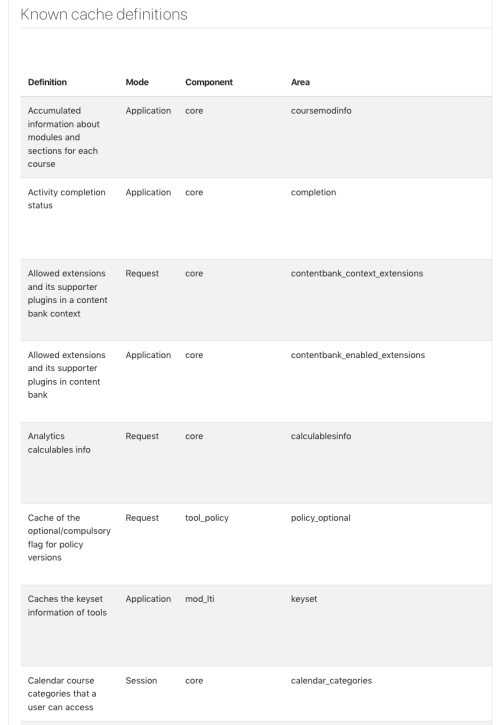

Known cache definitions

The idea of a cache definition hasn't been discussed here yet. It is something controlled by the developer. When they create a cache they can do so in two ways, the first is by creating a cache definition. This is essentially telling Moodle about the cache they've created. The second way is to create an Ad hoc cache. Developers are always encouraged to use the first method. Only caches with a definition can be mapped and further configured by the admin. Ad hoc caches will make use of default settings only.

Typically Ad hoc caches are only permitted in situations where the cache is small and configuring it beyond defaults would provide no benefit to administrators. Really it's like saying you the administrator doesn't need to concern yourself with it.

For each cache shown here you get the following information:

- Definition

- A concise description of this cache.

- Mode

- The cache type this cache is designed for.

- Component

- The code component the cache is associated with.

- Area

- The area of code this cache is serving within the component.

- Store mappings

- The store or stores that will be used for this cache.

- Sharing

- How is sharing configured for this site.

- Actions

- Any actions that can be performed on the cache. Typically you can edit the cache store instance mappings, edit sharing, and purge the cache.

You'll also find at the bottom of this table a link title "Rescan definitions". Clicking this link will cause Moodle to go off an check all core components, and installed plugins looking for changes in the cache definitions.

This happens by default during an upgrade, and if a new cache definition is encountered. However, should you find yourself looking for a cache that isn't there this may be worth a try.

It is also handy for developers as it allows them to quickly apply changes when working with caches. It is useful when tweaking cache definitions to find what works best.

Information on specific cache definitions can be found on the Cache definitions page.

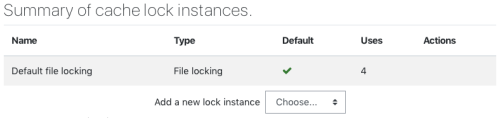

Summary of cache lock instances

As mentioned above cache locking is an advanced concept in MUC.

The table here shows information on the configured locking mechanisms available to MUC. By default, just a single locking mechanism is available, file locking.

At present, there are no caches that make use of this and as such I won't discuss it further here.

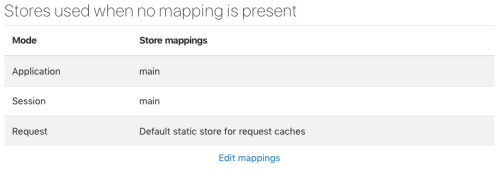

Stores used when no mapping is present

This table quickly shows which cache store instances are going to be used for cache types if there are no specific mappings in place.

To simplify that, this shows the default cache stores for each type.

At the bottom, you will notice there is a link "Edit mappings" that takes you to a page where you can configure this.

Adding cache store instances

The default configuration is going to work for all sites, however, you may be able to improve the performance of your sites by making use of various caching backends and techniques. The first thing you will want to do is add cache store instances configured to connect to/use the cache backends you've set up.

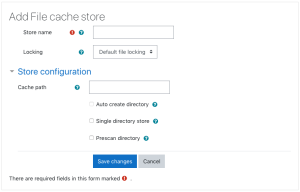

File cache

When on the cache configuration screen within the Installed cache stores table, you should see the File cache plugin, click `Add instance` to start the process of adding a file cache store instance.

When creating a file cache there is in fact only one required param, the store name. The store name is used to identify the file store instance in the configuration interface and must be unique to the site. It can be anything you want, but we would advice making it something that describes your intended use of the file store.

The following properties can also be specified, customising where the file cache will be located, and how it operates.

- Cache path

- Allows you to specify a directory to use when storing cache data on the file system. Of course the user the web server is running as must have read/write access to this directory. By default (blank) the Moodledata directory will be used.

- Auto create directory

- If enabled when the cache is initialised if the specified directory does not exist Moodle will create it. If this is specified and the directory does not exist the cache will be deemed not ready and will not be used.

- Single directory store

- By default, the file store will create a subdirectory structure to store data in. The first 3 characters of the data key will be used as a directory. This is useful in avoiding file system limits it the file system has a maximum number of files per directory. By enabling this option the file cache will not use subdirectories for storage of data. This leads to a flat structure but one that is more likely to hit file system limits. Use with care.

- Prescan directory

- One of the features the file cache provides is to prescan the storage directory when the cache is first used. This leads to faster checks of files at the expense of an in-depth read.

The file cache store is the default store used for application caches and by default, the moodledata directory gets used for the cache. File access can be a taxing resource in times of increased load and the following are some ideas about configuring alternative file stores in order to improve performance.

First up is there a faster file system available for use on your server.

Perhaps you have an SSD installed but are not using it for your moodledata directory because space is a premium.

You should consider creating a directory or small partition on your SSD and creating a file store to use that instead of your Moodle data directory.

Next, you've not got a faster drive available for use, but you do have plenty of free space.

Something that may be worth giving a shot would be to create a small partition on the drive you've got installed that uses a performance orientated file system.

Many Linux installations these days, for example, use EXT4, a nice file system but one that has overheads due to the likes of journalling.

Creating a partition and using a file system that has been optimised for performance may give you that little boost you are looking for. Remember caches are designed to be volatile and choosing a file system for a cache is a different decision to choosing a file system for your server.

Finally, if you're ready to go to lengths and have an abundance of memory on your server you could consider creating a ramdisk/tmpfs and pointing a file store at that.

Purely based in memory, it is volatile exactly like the cache is, and file system performance just isn't going to get much better than this.

Of course, you will be limited in space and you are essentially taking that resource away from your server.

Please remember with all of these ideas that they are just ideas.

Whatever you choose - test, test, test, be sure of the decision you make.

Memcached

You must first have a Memcached server you can access and have the Memcached PHP extension installed and enabled on your server.

There are two required parameters in configuring a Memcached store.

- Store name

- It is used to identify the store instance in the configuration interface and must be unique to the site.

- Servers

- The servers you wish this cache store use. See below for details.

Servers should be added one per line and each line can contain 1 to 3 properties separated by colons.

- The URL or IP address of the server (required)

- The port the server is listening on (optional)

- The weight to give this server (optional)

For example, if you had two Memcached instances running on your server, one configured for the default port, and one configured for 11212 you would use the following:

127.0.0.1

127.0.0.1:11212

There are also several optional parameters you can set when creating a Memcached store.

- Use compression

- Defaults to true, but can be disabled if you wish.

- Use serialiser

- Allows you to select which serialiser gets used when communicating with the Memcached server. By default the Memcached extension and PHP only provide one serialised, however, there is a couple of others available for installation if you go looking for them. One, for example, is the igbinary found at https://github.com/igbinary/igbinary.

- Prefix key

- Allows you to set some characters that will be prefixed to all keys before interacting with the server.

- Hash method

- The hash method provided by the Memcached extension is used by default here. However, you can select to use an alternative if you wish. http://www.php.net/manual/en/memcached.constants.php provides little information on the options available. Please note if you wish to you can also override the default hash function PHP uses within your php.ini.

- Buffer writes

- Disabled by default, and for good reason. Turning on buffered writes will minimise interaction with the Memcached server by buffering io operations. The downside to this is that there is a good chance multiple requests will end up generating the data on a system with any concurrency because no one had pushed it to the Memcached server when they first requested it. Enabling this can be advantageous for caches that are only accessed in capability-controlled areas, for example, where multiple interactions take a toll on network resources. But that is definitely on the extreme tweaking end of the scale.

Important implementation notes

- The Memcached extension does not provide a means of deleting a set or entries. Either a single entry is deleted, or all entries are deleted.

Because of this, it is important to note that when you purge a Memcached store within Moodle it deletes ALL entries in the Memcached server. Not just those relating to Moodle.

For that reason, it is highly recommended to use dedicated Memcached servers and to NOT configure any other software to use the same servers. Doing so may lead to performance depreciation and adverse effects.

- Likewise if you want to use Memcached for caching and for sessions in Moodle it is essential to use two Memcached servers. One for sessions, and one for caching. Otherwise, a cache purge in Moodle will purge your sessions!

MongoDB

MongoDB is an open source document orientated NoSQL database. Check out their website www.mongodb.org for more information.

- Store name

- Used to identify the store instance in the configuration interface and must be unique to the site.

- Server

- This is the connection string for the server you want to use. Multiple servers can be specified using a comma-separated list.

- Database

- The name of the database to make use of.

- Username

- The username to use when making a connection.

- Password

- The password of the user being used for the connection.

- Replica set

- The name of the replica set to connect to. If this is given the master will be determined by using the ismaster database command on the seeds, so the driver may end up connecting to a server that was not even listed.

- Use

- If enabled the usesafe option will be used during insert, get, and remove operations. If you've specified a replica set this will be forced on anyway.

- Use safe value

- You can choose to provide a specific value for use safe. This will determine the number of servers that operations must be completed on before they are deemed to have been completed.

- Use extended keys

- If enabled full key sets will be used when working with the plugin. This isn't used internally yet but would allow you to easily search and investigate the MongoDB plugin manually if you so choose. Turning this on will add a small overhead so should only be done if you require it.

Redis

Redis is described as "The open source, in-memory data store used by millions of developers as a database, cache, streaming engine, and message broker." by the people who make it. It's an excellent caching option that can be used to store the Application and Session Caches. More info can be found on their site: https://redis.io, or if you're looking for installation instructions and more information about how it interacts with Moodle specifically, head to this wiki page: Redis cache store.

Mapping a cache to a store instance

Mapping a store instance to a cache tells Moodle to use that store instance when the cache is interacted with. This allows the Moodle administrator to control where information gets stored and, most importantly, optimise your site's performance by making the most of the resources available to your site.

To set a mapping first browse to the cache configuration screen.

Proceed to find the Known cache definitions table and within it find the cache you'd like to map.

In the actions column select the link for Edit mappings.

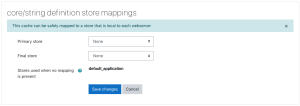

The screen you are presented allows you to map one or more cache store instances to be used by this cache.

You are presented with several drop-downs that allow you to map one or more cached. All mapped caches get interacted with. The "Primary" store is the store that will be used first when interacting with the cache.

The "Final" mapped store will be the last cache store interacted with.

How this interaction occurs is documented below.

If no stores are mapped for the cache then the default stores are used. Have a look at the section below for information on changing the default stores.

If a single store instance is mapped to the cache the following occurs:

- Getting data from the cache

- Moodle asks the cache to get the data. The cache attempts to get it from the store. If the store has it, it gives it to the cache, and the cache gives it to Moodle so that it can use the data. If the store doesn't have it, then fail is returned and Moodle will have to generate the data and will most likely then send it to the cache.

- Storing data in the cache

- Moodle will ask the cache to store some data, and the cache will give it to the cache store.

If multiple store instances are mapped to the cache the following occurs:

- Getting data from a store

- Moodle asks the cache to get the data. The cache attempts to get it from the first store. If the first store has it then it returns the data to the cache and the cache returns it to Moodle. If the first store doesn't have the data then it attempts to get the data from the second store. If the second store has it is returns it to the first store that then stores it itself before returning it to the cache. If it doesn't then the next store is used. This continues until either the data is found or there are no more stores to check.

- Storing data in the cache

- Moodle will ask the cache to store some data, the cache will give it to every mapped cache store for storage.

The main advantage of assigning multiple stores is that you can introduce cache redundancy. Of course, this introduces an overhead so it should only be used when actually required. The following is an example of when mapping multiple stores can provide an advantage:

Scenario: You have a web server that has a Moodle site as well as other sites. You also have a Memcached server that is used by several sites including Moodle. Memcached has a limited size cache, that when full and requested to store more information frees space by dropping the least used cache entries. You want to use Memcached for your Moodle site because it is fast, however, you are aware that it may introduce more cache misses because it is a heavily used Memcached server. Solution: To get around this you map two stores to caches you wish to use Memcached. You make Memcached the primary store, and you make the default file store the final cache store. Explanation: By doing this you've created redundancy; when something is requested, Moodle first tries to get it from Memcached (the fastest store) and if it is not there it proceeds to check the file cache.

Just a couple more points of interest:

- Mapping multiple caches will introduce overhead, the more caches mapped the more overhead.

- Consider the cache stores you are mapping to, if data remains there once set then there is no point mapping any further stores after it. This technique is primarily valuable in situations where data is not guaranteed to remain after being set.

- Always test your configuration. Enable the display of performance information and then watch which stores get used when interacting with Moodle in such a way as to trigger the cache.

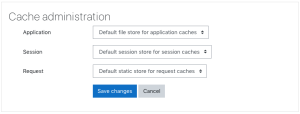

Setting the stores that get used when no mapping is present

This is really setting the default stores that get used for a cache type when there is not a specific mapping that has been made for it.

To set a mapping first browse to the cache configuration screen.

Proceed to find the Stores used when no mapping is present table.

After the table you will find a link Edit mappings, click this.

On the screen you are presented with you can select one store for each cache type to use when a cache of the corresponding type gets initialised and there is not an explicit mapping for it.

Note that on this interface the drop downs only contain store instances that are suitable for mapping to the type.

Not all instances will necessarily be shown. If you have a store instance you don't see then it is not suitable for ALL the cache definitions that exist.

You will not be able to make that store instance the default, you will instead need to map it explicitly to each cache you want/can use it for.

Configuring caching for your site

This is where it really gets tricky, and unfortunately, there is no step-by-step guide to this.

How caching can be best configured for a site comes down entirely to the site in question and the resources available to it.

What can be offered are some tips and tricks to get you thinking about things and to perhaps introduce ideas that will help you along the way.

If you are reading this document and you've learnt a thing or two about configuring caching on your site share your learnings by adding to the points here.

- Plan it. It's a complex thing. Understand your site, understand your system, and really think how users will be using it all.

- If you've got a small site the gains aren't likely to be significant, if you've got a large site getting this right can lead to a substantial boost in performance.

- When looking at cache backends really research the advantages and disadvantages of each. Keep your site in mind when thinking about them. Depending upon your site you may find that no one cache backend is going to meet the entire needs of your site and that you will benefit from having a couple of backends at your disposal.

- Things aren't usually as simple as installing a cache backend and then using it. Pay attention to configuration and try to optimise it for your system. Test it separately and have an understanding of its performance before telling Moodle about it. The cache allows you to shift load off the database and reduce page request processing.

If for instance you have Memcached installed but your connection has not been optimised for it you may well find yourself in a losing situation before you even tell Moodle about the Memcached server. - When considering your default store instances keep in mind that they must operate with data sets of varying sizes and frequency. For a large site really your best bet is to look at each cache definition and map it to a store that is best suited for the data it includes and the frequency of access.

Cache definitions have been documented Cache definitions. - Again when mapping store instances to caches really think about the cache you are mapping and make a decision based upon what you understand of your site and what you know about the cache.

Cache definitions have been documented Cache definitions. - Test your configuration. If you can stress test it even better! If you turn on performance information Moodle will also print cache access information at the bottom of the screen. You can use this to visually check the cache is being used as you expect, and it will give you an indication of where misses etc. are occurring.

- Keep an eye on your backend. Moodle doesn't provide a means of monitoring a cache backend and that is certainly something you should keep an eye on. Memcached for instance drops least used data when full to make room for new entries. APC, on the other hand, stops accepting data when full. Both will impact your performance if full and you're going to encounter misses. However APC when full is horrible, but it is much faster.

More on performance testing

Two links that might be useful to anyone considering testing performance on their own servers:

- Moodle performance testing: how much more horsepower do each new versions of Moodle require?

- How to load test your Moodle server using Loadstorm

Performance advice for load-balanced web servers

- In Moodle 2.4 onwards with load-balanced web servers, don't use the default caching option that stores the data in moodledata on a shared network drive. Use memcached instead. See Tim Hunt's article on http://tjhunt.blogspot.de/2013/05/performance-testing-moodle.html

- In Moodle 2.6 onwards make sure you set $CFG->localcachedir to some local directory in config.php (for each node). This will speed up some of the disk caching that happens outside of MUC, such as themes, javascript, libraries etc.

Troubleshooting

Have you encountered a problem, or found yourself in a conundrum? Perhaps the answer is in this section. If not when you find an answer, how about you share it here.

More information

- Cache definitions Information on the cache definitions found within Moodle.

- Cache API Details of the Cache API.

- Cache API - Quick reference A short, code focused page of on the Cache API.

- The Moodle Universal Cache (MUC) The original cache specification.

Cache related forum discussions that may help in understanding MUC:

- MUC is here, now what?

- Status of MUC?

- Putting cachedir on local disks in cluster

- moodle cachestore_file

- Why using a not-completely-shared application cache will fail

- Some Cache / MUC questions

- Moodle Caching at Scale

Other:

- Moodle 2.4.5 vs. 2.5.1 performance and MUC APC cache store blog post by Justin Filip