Workshop 2.0 specification: Difference between revisions

David Mudrak (talk | contribs) m (Moved Grading before other parts of implementation plan) |

David Mudrak (talk | contribs) m (→DB tables: ERD and list of tables) |

||

| Line 222: | Line 222: | ||

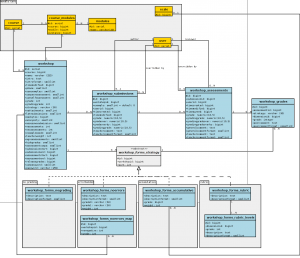

==DB tables== | ==DB tables== | ||

[[Image:workshop20_erd.png|thumb|right|ERD of Workshop 2.0]] | |||

* workshopng | |||

* workshopng_elements | |||

* workshopng_rubrics | |||

* workshopng_submissions | |||

* workshopng_assessments | |||

* workshopng_grades | |||

===Tables and columns used by grading strategies=== | ===Tables and columns used by grading strategies=== | ||

Revision as of 23:02, 6 May 2009

Note: This page is a work-in-progress. Feedback and suggested improvements are welcome. Please join the discussion on moodle.org or use the page comments.

Moodle 2.0

This page tracks and summarizes the progress of my attempt to try and rewrite the Workshop module for Moodle 2.0 (yes, yet another attempt).

PROJECT STATE: Planning

Introduction

Workshop module is an advanced Moodle activity designed for peer-assessments within a structured review/feedback/grading framework. It is generally agreed the Workshop module has huge pedagogical potential and there is some demand for such a module from the community (including some Moodle Partners). It was originally written by Ray Kingdon, and was the very first third-party module written for Moodle. For a long time it's been largely un-maintained except for emergency fixes by various developers to keep it operational. During last years, several unsuccessful attempts were made by various volunteers to rewrite the module or to replace it with an alternative. At the moment it has been removed from the 2.0 development version and lies in the CONTRIB area. This document proposes a way of revitalizing the Workshop module so it can become a standard core module again in Moodle 2.0.

Key concepts

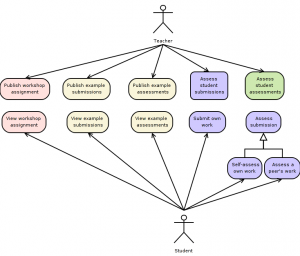

There are two key features making the Workshop module unique among other Moodle activities

- Advanced grading methods - contrary to the standard Assignment module, Workshop supports more structured forms of assessment/evaluation/grading. Teacher defines several aspects of the work, they are assessed separately and then aggregated. Examples include multi-criterion evaluation and rubrics.

- Peer-assessment - according to some psychology/education theories (for example the Bloom's taxonomy), the evaluation is one of the highest level of cognitive operations. It implicitly requires paying attention, understanding the concepts, critical thinking, knowledge applying and analysing of a subject being evaluated. Peer-assessment fits very well into the social constructionist model of learning. In Workshop, students not only create (which is the other non-trivial cognitive operation) and submit their own work. They also participate on the assessment of others' submissions, give them feedback and suggest a grade for them. The Workshop module provides some (semi)automatic ways how to measure the quality of the peer-assessment and calculates a "grading grade", ie. the grade for how well a student assessed her peers. Peer-assessment gives opportunity to see other work and learn from it, formulate quality feedback which will enhance learning, learn from feedback of more than one person (i.e. the teacher)

Basic scenario of the module usage

The following scenario includes all supported workshop features and phases. Some of them are optional, eg. teacher may decide to disable the peer-assessment completely so the Workshop behaves similarly to the Assignment module with the benefit of multi-criteria evaluation.

- Workshop setup - teacher prepares workshop assignment (eg. an essay, research paper etc.) and sets up various aspects of the activity instance. Especially, the grading strategy (see below) has to be chosen and the assessment elements (criteria) defined.

- Examples from teacher (optional)

- teacher publishes example submissions - eg. examples of a good work and a poor work

- teacher publishes example assessments of the example submissions. Again, there may (and should, in fact) be an example of very well done evaluation/assessment and an example of a poor one.

- students can try and train the process of assessment of example submissions (this part to be discussed: how would the module compute the grading grade?)

- Students work on their submissions - the typical result is a file (several files?) that can be submitted into the workshop (together with a comment maybe?). Students may upload draft version of their work before they decide it is a final submission (or there is a deadline for submissions). Other types of work are possible using the new Repository API - eg. students can prepare a view in Mahara, GoogleDoc, publish video at YouTube etc.

- Self-assessment (optional) - after the work is submitted, the student is asked to assess her own work using the selected grading strategy evaluation form

- Peer-assessment (optional) - the module randomly selects a given number of submissions to be reviewed/commented/evaluated/assessed by student.

- Assessment by teacher (optional)

- teacher manually evaluates submissions using the selected grading strategy evaluation form

- teacher manually evaluates the quality of peer-assessments

- Final grade aggregation

- generally, the final grade consists of a grade for submission and grade for assessments

- every grading strategy defines how the final grade for submission is computed

Pedagogical use cases

Simple assignment with advanced grading strategy

- Students submit just one file as they do in Assignment module

- No peer grading

- Several dimensions (criteria) of the assessment

- Weighted mean aggregation

- if only one dimension is used, the Workshop behaves like an ordinary Assignment

Teacher does not have time to evaluate submissions

- Dozens/hundreds of students in the class, everyone submits an essay

- Every student is given a set of, say, five submissions from peers to review and evaluate

- The review process is bi-anonymous - the author does not know who reviewers are, the reviewer does not know who the author is

- The teacher randomly picks some peer-assessments and grade their quality

- The grading grade is automatically calculated according to the level of reliability. If reviewers did not reach a required level of the consensus (ie. the peer-assessments vary a lot), the teacher is noticed and asked for the assessment. The assessment of teacher is given more weight so it (hopefule) helps to decide and calculate the final grade for the author and the grading grades for the reviewers.

- When the teacher is happy about the results, she pushes the final grades into the course Gradebook.

Presentations and performance

- Students submits their slides and give a presentation in class

- Peer feedback on submitted materials and live presentation

- Randomly assigning assessments is a motivation to pay attention and take good notes

Activity focused on work quality

- Initially, teacher uses No grading strategy to collect comments from peers

- Students submit their drafts during the Submission period and get some feedback during assessment period

- Then, teacher reverts the Workshop back into the submission phase, allows re-submissions and changes the grading strategy to a final one (eg Accumulative)

- Students submit their final versions with the comments being considered

- Peers re-assess the final versions and give them grades

- Final grades are computed using the data from the last (final) round of assessment period.

- The reverting may happen several times as needed. The allocation of reviewers does not change.

Current implementation problems

- Very old and unmaintained code - the vast majority of the code is the same as it was in Moodle 1.1. mforms are not used (accessibility). Missing modularity. No unit testing.

- Does not talk to the Gradebook - there are some patches around in forums fixing partial problems.

- User interface and usability

- Grades calculation is kind of dark magic - see this discussion. It is not easy to understand the way how Workshop calculates the grading grade even if you read the code that actually does the calculation. More than that, the whole algorithm depends on a set of strange constants without any explanation or reasoning of the value. The grade calculation must be clear and easy to understand to both teacher and student. Teachers must be always able to explain why students got their final grades. Therefore the plan is to get rid of the fog above the grades calculation, even if it breaks backward compatibility.

- Lack of custom scales support - see this discussion

- Submitting and assessing phases may overlap - the fact that a student can start assess while some others have not submitted the work yet causes problems with assessment allocation balancing and has to be handled by a strange "overall allocation" setting - see this discussion. IMO there is no strong educational use case explaining why this should be possible. Therefore, it is proposed to break backwards compatibility here and keep all workshop phases distinct. This will simplify phases switching and controlling and module set-up.

- Performance - SQL queries inside loops, no caching, no comment.

- Weights not saved in the database - currently, evaluation elements weights are saved in DB by the key in the array of the supported weights. Eg. there is weight "11" in DB and looking at WORKSHOP_EWEIGHTS you can see that "11" => 1.0. The new version will save both grades and weights as raw values (type double precision) so calculation with weights are possible at SQL level.

Project analysis

See the project analysis mindmap

Project schedule

Milestone 1

- Date: 15/05/2009

- Goal: The functional specification is in docs wiki and is reviewed and agreed by the community and Moodle HQ. The implementation plan is transferred into sub-tasks in the tracker.

Milestone 2

- Date: 15/07/2009

- Goal: All features implemented. The community is asked for the testing.

Milestone 3

- Date: 15/08/2009

- Goal: Major bugs fixed, upgrading from pre-2.0 versions works. The community is asked for the QA testing.

Milestone 4

- Date: 15/09/2009

- Goal: The module is moved from contrib back to the core.

User interface mockups

Implementation plan

Grading

The final grade (that is to be pushed into the Gradebook when the workshop is over) generally consists of two parts: grade for submission and grade for assessment. The default grade values will be 80 for grade for submission and 20 for grade for assessment. The final grade is always sum of these two components, giving default value 100. Maximum values for both grade for submission and grade for assessment are defined by teacher in mod_form (see the mockup UI in MDL-18688).

Workshop tries to compute grades automatically if possible. Teacher can always override the computed grade for submission and grade for assessment before the final grade is pushed into the Gradebook (where the grade can be overridden again).

Grading strategies

Grading strategy is a way how submissions are assessed and how the grade for submission is calculated. Currently (as of Moodle 1.9), the Workshop contains the following grading strategies: No Grading, Accumulative, Error Banded, Criterion, or Rubric.

My original idea was to have grading strategies as separate subplugins with their own db/install.xml, db/upgrade.php, db/access.php and lang/en_utf8/ - similar to what question/types/ have. This pluggable architecture would allow to easily implement custom grading strategies, eg. national-curriculum specific. However, Petr Škoda gave -2 to such proposal given that the Moodle core doesn't support them right now. So, from the DB tables, capabilities and language files perspective, grading strategies have to be part of monolithic module architecture.

Technically, grading strategies will be classes defined in mod/workshop/grading/strategyname/strategy.php. Grading strategy class has to implement workshop_grading_strategy interface, either directly or via inheritance.

interface workshop_grading_strategy { }

class workshop_strategyname_strategy implements workshop_grading_strategy { }

abstract class workshop_base_strategy implements workshop_grading_strategy { }

class workshop_strategyname_strategy extends workshop_base_strategy { }

Grading strategy classes basically provide grading forms and calculate grades. During the current phase, four strategies will be implemented. Grading strategy of a given workshop can not be changed after a work has been submitted.

In contrary to 1.9, the number of evaluation elements/critera/dimensions is not defined in mod_form but at the strategy level. There is no need to pre-set it, teacher just clicks on "Add another element/criterion" and get en empty form (AJAX welcome).

See MDL-18912 for UI mockups of grading forms.

No grading

- No grades are given by peers to the submitted work and/or assessments, just comments.

- Submissions are graded by teachers only

- There may be several aspects/evaluation elements to be commented separately.

- This may be used to practice submitting work and assessing peers' work through comment boxes (small textareas).

Accumulative

- Several (1 to N) evaluation elements/criteria

- Each can be graded using a grade (eg out of 100) or a scale (using site-wide scale or a scale defined in a course)

- The final grade is aggregated as a weighted sum.

- There is backwards compatibility issue as the current Workshop uses its own scales. During upgrade to 2.0, the module will create all necessary scales in the course or as standard scales (to be decided).

Error banded

- Several (1 to N) evaluation propositions/elements/criteria

- The student evaluates the submission by Yes/No, Present/Missing, Good/Poor etc. pairs.

- The final grade is aggregated as a weighted count of positive assessments.

- This may be used to make sure that certain criteria were addressed in article reviews.

- Examples of evaluation elements/criteria: Has less than 3 spelling errors, Has no formatting issues, Has creative ideas, Meets length requirements

Rubrics

- See the description of this scoring tool at Wikipedia

- Several (1 to N) evaluation categories/criteria/dimensions

- Each of it consists of a grading scale, ie. set of ordered propositions/levels. Every level is assigned a grade.

- The student chooses which proposition (level) answeres/describes the given criterion best

- Finally the student may suggest an optional adjustment to a final grade. (this is because of backward compatibility. Is it really needed?)

- The final grade is aggregated as a weighted sum

- This may be used to assess research papers or to assess papers in which different books were critiqued.

- Example: Criterion "Overall quality of the paper", Levels "5 - An excellent paper, 3 - A mediocre paper, 0 - A weak paper" (the number represent the grade)

This strategy merges the current Rubrics and Criterion strategy into a single one. Conceptually, current Criterion is just the one dimension of Rubrics. In Workshop 1.9, Rubrics can have several evaluation criteria (categories) but are limited to a fixed scale 0-4 points. Criterion in Workshop 1.9 may use custom scale, but is limited to a single evaluation aspect. The new Rubrics strategy combines the old two. To mimic the legacy behaviour:

- Criterion in 1.9 can be replaced by Rubrics 2.0 using just one dimension

- Rubrics in 1.9 can be replaced by Rubrics 2.0 by using point scale 0-4 for every criterion.

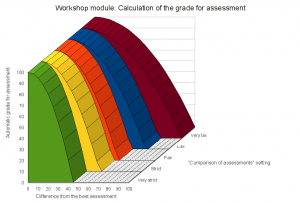

Grade for assessment

Currently implemented in workshop_grade_assessments() and workshop_compare_assessments(). Before I have understood how it works, I was proposing very loudly this part has to be reimplemented (is it just me tending to push own solutions instead of trying to understand someone else?). Now I think the ideas behind the not-so-pretty code are fine and the original author seems to have real experiences with these methods. I believe the current approach will be fine if we provide clear reports that help teachers and student understand why the grades were given. The following meta-algorithm describes the current implementation. It is almost identical for all grading strategies.

For all evaluation elements/criteria/aspects, calculate the arithmetic mean and sample standard deviation across all made assessments in this workshop instance. Grades given by a teacher can be given weight (integer 0, 1, 2, 3 etc.) in the Workshop configuration. If the weight is >1, figures are calculated as if the same grade was given by more reviewers. Backwards compatibility issue: currently weights are not taken into account when calculating sample standard deviation. This is going to be changed.

Try to find the "best" assessment. For our purposes, the best assessment is the one closest to the mean, ie. the one representing the consensus of reviewers. For each assessment, the distance from the mean is calculated similarly as the variance. Standard deviations very close to zero are too sensitive to a small change of data values. Therefore, data having stdev <= 0.05 are considered equal.

$variance = 0

for each evaluation element

if stdev > 0.05 then $variance += ((mean - grade) * weight / stdev) ^ 2

In some situations there might be two assessments with the same variance (distance from the mean) but the different grade. In this situation, the module has to warn the teacher and ask her to assess the submission (so her assessment hopefully helps to decide) or give grades for assessment manually. There is a bug in the current version linked with this situation - see MDL-18997

If there are less than three assessments for this submission (teacher's grades are counted weight-times), they all are considered "best". In other cases, having the "best" assessment as the reference point, we can calculate the grading grade for all peer-assessments.

The best assessment gets grading grade 100%. All other assessments are compared against the best one. The difference/distance from the the best assessment is calculated as sum of square differences. For example, in case of accumulative grading strategy with all elements having the same weight:

sumdiffs += ((bestgrade - peergrade) / maxpossiblescore) ^ 2

- Example: we have accumulative grading strategy with a single evaluation element. The element is graded as number of points of 100. The best assessment representing the opinion of the majority of reviewers was calculated as 80/100. So, if a student gave the submission 40/100, the distance from the bestgrade is sumdiffs = ((80 - 40) / 100) ^ 2 = 0.4 ^ 2 = 0.16

In the current implementation, this calculation is influenced by a setting called "comparison of assessments". The possible values are "Very strict", "Strict", "Fair", "Lax" and "Very lax". Their meaning is illustrated in the attached graph. For every level of the comparison, the factor f is defined: 5.00 - very strict, 3.00 - strict, 2.50 - fair, 1.67 - lax, 1.00 - very lax. The grade for assessment is then calculated as (again in case of all elements having the same weight):

gradinggrade = (1 - f * sumdiffs)

- Example (cont.): in case of the default "fair" comparison of assessments: gradinggrade = (1 - 2.5 * 0.16) = 60/100

I remember me having difficulties trying to find good Czech terms for "Comparison of assessments", "Fair", "Lax" and "Strict" when I was translation Workshop module. I am not sure how clear they are for other users, including the native-speakers. I propose to change the original English terms to:

- "Comparison of assessments" to "Required level of assessment similarity"

- "Fair" to "Normal"

- "Lax" to "Low"

- "Strict" to "High"

The structure of the code

Files, libraries, interfaces, classes, unit tests

DB tables

- workshopng

- workshopng_elements

- workshopng_rubrics

- workshopng_submissions

- workshopng_assessments

- workshopng_grades

Tables and columns used by grading strategies

My initial idea was that every grading strategy will keep its own tables to store the grading form definition. But Moodle does not support subplugins with their own DB schemas yet. Also, I realized that all strategies tables would be very similar to each other. So I decided to stay with the current DB layout with just some modifications.

Grading strategies use two tables to store grading form definition: workshop_elements and workshop_rubrics. The table workshop_rubrics is used by Rubrics grading strategy only. The following matrix describes which columns are used by grading strategies to store their grading forms. If the grading strategy does not use the column, its value should be NULL.

| X | X | |||||

| X | X | X | X | |||

| X | X | X | X | |||

| X | X | X | X | |||

Capabilities

File API integration

How Workshop will use the new File API.

Repository/portfolio API integration

If and how will be implemented.

Out of scope

What are not goals of this project (may be discussed later for 2.1 etc.)

- Generally no new/other grading strategies are to implement in the current phase

- No new Workshop features will be implemented - remember this is a revitalization of the current behaviour, not a new module

- No group grading support - every student submits his/her individual work

- Outcomes integration - it appears to me logical to integrate Outcomes directly into the Workshop. What about an Outcomes grading strategy subplugin, so peers can assess using outcomes description and scales.

- Tim's proposal (aka "David, I had a crazy idea about the workshop module rewrite."): a way to add peer assessment to anything in Moodle, like forum posts, wiki pages, database records etc. before they are published to other students

- Petr's proposal: TurnItIn or similar service integration

To be discussed/decided

Other links and resources

- Moodle Workshop Guide by Laura M. Christensen © 2007

- MDL-17827 Workshop upgrade/conversion from 1.9 to 2.0 (META)

- Yet another attempt to rewrite Workshop for 2.0 forum thread

- New Workshop Module forum thread

- the new workshop module forum thread

- a lot of interesting feature requests

Diagrams

Credits

Many thanks to Stephan Rinke for his valuable comments and ideas.