Talk:Logging 2: Difference between revisions

| Line 437: | Line 437: | ||

</pre> | </pre> | ||

[[User:Ankit Agarwal|Ankit Agarwal]] 12:20, 17 May 2013 (WST) | [[User:Ankit Agarwal|Ankit Agarwal]] 12:20, 17 May 2013 (WST) | ||

==Performance== | |||

I would like to see this spec propose some performance criteria at that start, that can be testing during development. For example: | |||

* How many writes / events per second should a log back-end be able to handle. (I know, it depends on the hardware.) | |||

* On a typical Moodle page load, how much of the load time should be taken up by the add_to_log call? Can we promise that it will be less than in Moodle 2.5? | |||

I guess that those are the two main ones, if we can find a way to quantify them. Note that performance of add_to_log on a developer machine is not an issue. The issue really comes with high load, where there might be lock contention that slows down log writes. | |||

Revision as of 07:16, 17 May 2013

Basically what's in here looks fine to me. I wrote a proposal Logging API proposal which includes some of these changes as a single development chunk, and should not cause problems for others implementing the rest of it later. The main focus of my proposal is providing the option to move logs out of the database (because the number of database writes is probably Moodle's single worst performance characteristic) but it should help enable the other enhancements from this proposal as well, in future.

Sam marshall 19:40, 12 February 2013 (WST)

Summary: Events/Logging API 2.6

Logging is implemented in form of event-listening by plugins. Plugins listening to the events may be:

- generic logging plugin implementing interface allowing other plugins to query information

- reporting plugin that aggregates only information that it needs and stores it optimised for its queries

Our tasks:

- Events API must ensure that everything that potentially may be interesting to the plugins is included in event data (but does not query extra data). There should be as fast as possible to get the list of listeners to notify: Moodle -> Logs

- Logging API is the form of plugins communication to the generic logging systems. It might not be extra effective but it stores and allows to retrieve everything that happens: Logs -> Moodle

- We make sure that everything that is logged/evented now continues to do so + log much more

- Create at least one plugin for generic logging

Marina Glancy 13:05, 15 May 2013 (WST)

New logging proposal

Current problems

- many actions are not logged

- some logged actions do not contain necessary information

- log storage does not scale

- hardcoded display actions, but only plugins know how to interpret data

- it is not possible to configure level of logging

- performance

Related problems

Events

Events are triggered in few places mostly for write actions only. The event data structure is inconsistent. Cron events are abused, stale and slow. The error states and transaction support is problematic.

Statisticts

Statistics processing is relatively slow. The results are not reliable becuase the algorithm does not fully understand the logged data.

Possible solution

We could either improve current system or implement a completely new logging framework. Improvements of the current log table and api cannot resolve all existing problems. Possible long term solution may be to split the logging into several self-contained parts:

- triggering events - equivalent to current calls of add_to_log() function

- storage of information - equivalent to current hardcoded storage in log database table

- fetching of information - equivalent to reading from log table

- processing of log information - such as processing of statistics

- reporting - log and participation reports

We could solve the logging and event problems at the same time. Technically all log actions should be accompanied by event triggers. The logging and event data are similar too.

Log and event data structures

Necessary event information:

- component - the frankenstyle component that understands the action, what changed

- action - similar to areas elsewhere

- user - who is responsible, who did it, current user usually

- context - where the event happened

- data - simple stdClass object that describes the action completely

- type - create/read/update/delete/other (CRUD)

- level - logging level 1...

- optional event data - 2.5 style event data for backwards compatibility

Optional information:

- studentid - affected student for fast filtering

- courseid - affected course

Backwards compatibility

add_to_log() calls would be ignored completely with debuggin message in the future. Old log table would be kept unchnaged, but no new data would be added.

Old event triggers would generate legacy events.

Event triggers would be gradually updated to trigger new events with more meta data, event may optionally contain legacy event data and legacy even name. The legacy events would be triggered automatically after handling new event.

The event handle definition would be fully backwards compatible, new events would be named component/action. All new events would be instant.

Cron events

The current cron even design is overcomplicated and often results in bad coding style. Cron events should be deprecated and forbidden for new events. Cron events also create performance problems.

- "Cron events are abused, stale and slow." - also the above. Please give specific examples of what you mean by this. Quiz uses cron events for a good reason (although you are welcome to suggest better ways to achieve the same results). This has never caused performance problems, and I don't recall any performance problem caused by cron events.--Tim Hunt 15:10, 17 May 2013 (WST)

Database transactions

At present there is a hack that delays events via cron if transaction in progress. Instead we could create buffer for events in memory and wait until we are notified from DML layer that transaction was committed or rolled back.

It is important here to minimise the event data size.

Auxiliary event data

At present we are sometimes sending a lot more even data because we may need things like full course records when even reference some course. This prevents repeated fetching of data dromdatabase.

This is bad for several reasons:

- we can not store this onformation in logs - it is too big and not necessary

- the data may get stale - especially in cron, but also other handlers may modify the data (event from event), the db transactions increase the chances for atle data too

The auxiliary data should be replaced by some new cache or extension of dml level - record cache. It could be prefilled automatically or manually whentriggering events.

Infinite recursion trouble

One event may modify data which results in new even which triggers the same event. We detect this somehow and stop event processing.

Catch all event handler

We need a '*' handler definition, this would allow log handlers to processes any subset of events without core modifications.

Handler definitions

At present handlers are stored in database table during installation and upgrade. That is not necessary any more and we could use MUC instead. The list of all handlers will be always relatively small and should fit into RAM.

Handler definitions should contain priority.

Implementation steps

- redesign events internals while keeping bc

- new logging API

- convert log actions and event triggers

- inplement log plugins

Event logger or (event and logging)

I agree with Petr's proposal to enhance event system, but I think event and logging should be kept separate. Mixing them will force developer to pollute event system which might lead to race-around problem.

With current proposal we are not considering logging event-less situations like sending email after forum is posted, or debug/exception or cron status. They all can be achieved by triggering an event but should be pollute event system for some use-cases like:

- Logging memory usage while executing an api (create_user, view create user page)

- Logging mail contents (for forum etc.)

- Logging debug information for replicating issue (Help admin and moodle developer to fix issues)

Also, we should consider how logforphp[1] and Monolog[2] are implemented. They use concepts of channelling log information, putting them in category and write to different logging stores. This will help us to create flexible system with can collect rich data for analysis, research and debugging. Also, we should consider logging general information in stream/file and important information in db to avoid performance issues.

We should also consider following standards like PSR-3 or RFC-5424

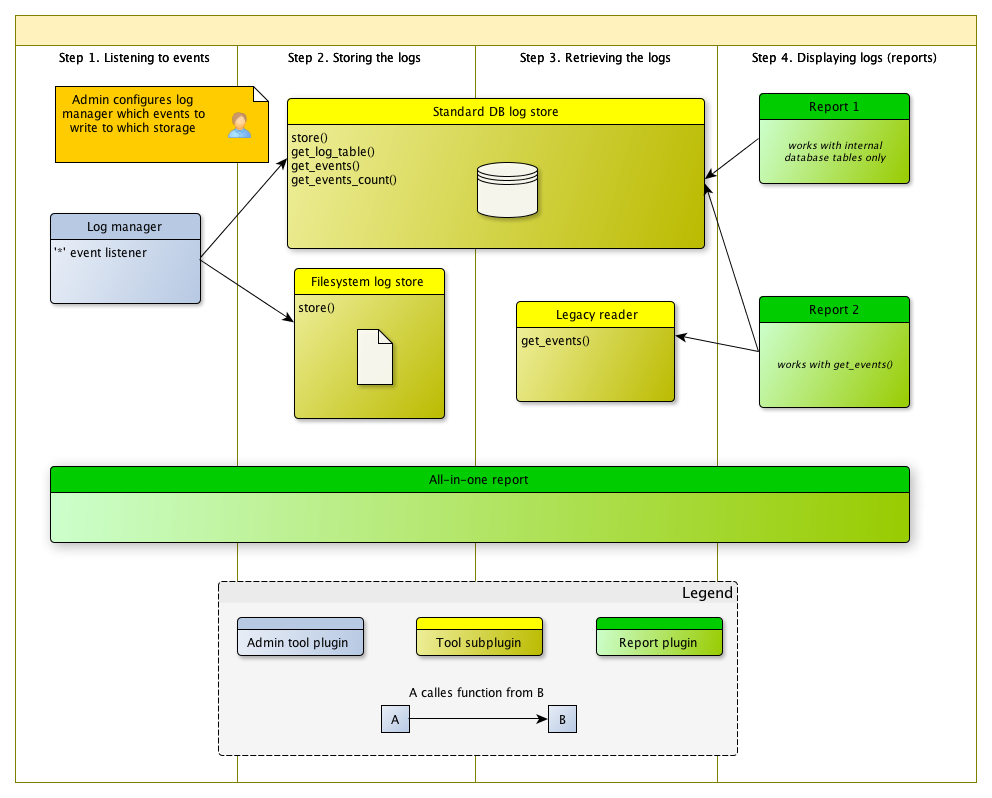

Logging plugins diagram

Configuration example:

Imagine admin created 2 instances of each "DB log storage", "Filesystem log storage" and "Log storage with built-in driver" and called them dbstorage1, dbstorage2, filestorage1, filestorage2, universalstorage1 and universalstorage2

DB log storage::log_storage_instances() will return:

- dbstorage1

- dbstorage2

Simple DB log driver::log_storage_instances() will return:

- dbstorage1 (Simple DB log driver)

- dbstorage2 (Simple DB log driver)

When Report1 is installed it will query all plugins that implement both functions: log_storage_instances() and get_log_records(), and then invoke log_storage_isntances() from each of them and return the list for admin to choose the data source from:

- filestorage1 (Records DB log driver)

- filestorage2 (Records DB log driver)

- dbstorage1 (Records DB log driver)

- dbstorage2 (Records DB log driver)

- filestorage1 (FS to DB log driver)

- filestorage2 (FS to DB log driver)

- universalstorage1

- universalstorage2

Marina Glancy 11:01, 16 May 2013 (WST)

Minor comments

The log and event data structure that Petr proposed to me initially looked good (though it does seem to be missing date / time which I am guessing is just an oversight). But after talking to Rajesh about querying the data I think that I might be more in favour of Some sort of flat system that doesn't require serialising the data. Adrian Greeve 09:32, 17 May 2013 (WST)

Fred's part

Logging

Data the logger should receive

Those are the information that should be passed on to the logging system. For performances, it's probably better that this information arrives full and that no more queries should be performed to retrieve, say, the course name. The more information we're passing, the more we can store. Once this is defined, then we will know what an event has to send when it's triggered.

Mandatory fields *

- Event specification version number*

- Event name*

- Type*

- Error

- User action

- Procedural action

- Manual action

- System log

- Datetime* (milliseconds?)

- Context ID*

- Category ID

- Category name

- Course ID*

- Course name

- Course module ID*

- Course module name

- Component*

- core

- course

- mod_assign

- mod_workshop

- Subject* (Defines the object on which the action is performed)

- user

- section

- assignment

- submission_phase

- Subject ID*

- Subject name (Human readable identifier of the object: Mark Johnson, Course ABC, ...)

- Subject URL (URL to notice the changes)

- Action* (The exact action that is performed on the object)

- created

- moved (for a course, a section, a module, a user between groups)

- submitted (for an assignment, or a message)

- ended (for a submission phase for instance)

- Actor/User ID (User performing the operation, or CLI, or Cron, or whatever)

- Associated object (Object associated to the subject. Ie: category of origin when a course is moved. User to whom a message is sent.)

- section

- category

- user (to whom you sent a message)

- Associated object ID

- Transaction type* (CRUD)

- create

- read

- update

- delete

- Level* (Not convinced that we should use that, because it's very subjective, except if we have guidelines and only a few levels (2, max 3))

- major

- normal

- minor

- Message (human readable log)

- Data (serialized data for more information)

- Current URL

We have to decide how extensively we want to provide information to external support. For example, when an assignment is submitted, we could provide the URL to the assignment. But that means that this event specifically has to receive the data, or the module has to provide a callback to generate the information based on the event data. Also, external systems cannot call the assignment callback.

In any case, not everyone is going to be happy. The more processing to get the data, the slower it gets. The less data we provide, the less information can be worked on...

Also, if we provide slots for information, but most of the events do not use them, the data becomes unusable as it is inconsistent except for the one event completing the fields.

Fields policy

We could define different levels of fields to be set depending on CRUD. For example, if an entry is deleted, we might want to log more than just the entry ID, but also its content, its name etc... surely the data field could contain most of it, but we still need to define policies.

Events

Important factors

- Processing has to be very quick

- Developers are lazy and so the function call should be as easy as possible

- Validating the data is very costly, we have to avoid that

- An event should not be defined, but triggered, for performance reason

An event is not defined, it is fired and that's the only moment the system knows about it. Two different components should not define the same action, for example: enrol/user:created and core/user:created. The user:created event should be triggered in the core method creating a user, once and only.

Triggering an event

Very basic and quick example of how we could now trigger the events, filling up the required data and parsing the rest to allow for the developers to quickly trigger one without having to provide extensive information.

event_trigger('mod_assign/assignment:submitted', $assignmentid, $extradata);

/**

* Trigger an event

*

* An event name should have the following form:

* $component/$subject:$action

*

* The component is the frankenstyle name of the plugin triggering the event.

* The subject is the object on which the action is performed. If the name could be

* bind the the corresponding model/tablename that would be great.

* The action is what is happening, typically created/read/updated/deleted, but

* it could also be 'moved', 'submitted', 'loggedin', ...

*

* The parameter $subjectid is ideally the ID of the subject in its own table.

*

* @param string $name of the event

* @param string $subjectid ID of the subject of the event

* @param array|stdClass of the data to pass on

* @param int $level of the event

* @return void

*/

function event_trigger($name, $subjectid, $data = null, $level = LEVEL_NORMAL) {

$data = (object) $data;

// For the specification 2, here are some hardcoded values.

$data->eventname = $name;

$data->version = 2;

$data->type = 'event';

// Get the component, event, action and subject.

// $name = $component/$action:$subject

// $event = $action:$subject

list($component, $event) = explode('/', $value, 2);

list($subject, $action) = explode(':', $event, 2);

$data->component = $component;

$data->subject = $subject;

$data->action = $action;

$data->subjectid = $subjectid;

$data->level = $level;

if (!isset($data->time)) {

$data->time = microtime(true);

}

if (!isset($data->contextid)) {

$data->contextid = $PAGE->get_contextid();

}

if (!isset($data->courseid)) {

$data->courseid = $PAGE->get_courseid();

}

if (!isset($data->moduleid)) {

$data->courseid = $PAGE->get_coursemoduleid();

}

if (!isset($data->crud)) {

if ($data->action == 'created' || $data->action == 'added') {

$data->crud = 'c';

} else if ($data->action == 'read' || $data->action == 'viewed') {

$data->crud = 'r';

} else if ($data->action == 'updated' || $data->action == 'edited') {

$data->crud = 'u';

} else if ($data->action == 'deleted' || $data->action == 'removed') {

$data->crud = 'd';

} else {

throw Exception('Crud is needed!');

}

}

// Not mandatory, but possible to be guessed.

if (!isset($data->currenturl)) {

$data->currenturl = $PAGE->get_url();

}

dispatch_event($name, $data);

}

Using such a function would:

- Force the developer to send the minimal required information via parameters

- Guess the fields such as component, action or subject from the event name (this processing is really small and easy versus the overhead of asking the dev to enter those)

- Allow for any information that can be guessed by the system to be defined in the $data parameter

- Allow any extra information to be defined in the $data parameter

Validation of event data

I don't think we should validate the information passed as this processing is relatively expensive. But we should be strict on the fact that extra data should be set in $data->data. A solution to prevent developers from abusing the stdClass properties, is to recreate the object based on the allowed keys, that's probably cheap. dispatch_event() could be the right place to do that.

Drop support for Cron events

An event is supposed to happen when it has been triggered, not being delayed. I think we should leave the plugins handle the events the way they want it, and schedule a cronjob is they want to delay the processing.

Backwards compatibility

If the event naming convention changes, this could be a bit trickier to achieve. Especially to ensure that 3rd party plugins trigger and catch events defined by other 3rd party plugins.

The old method events_trigger() should:

- Be deprecated;

- Trigger the old event;

- Not trigger the new events as it's tricky to remap the data to form the new $data parameter.

The new method to trigger events should

- Trigger the new event;

- Trigger the old-style event for non-updated observers;

- Keep the old $eventdata for old-style events.

Core events triggered

git gr events_trigger\( | sed -E 's/.*events_trigger\(([^,]+),.*/\1/' | sort | uniq

Frédéric Massart 11:15, 17 May 2013 (WST)

Logging bulk actions.

Just an off thought that I had. Some (if not all) of the functions that are called from the events_trigger only handle single actions (e.g. user_updated). To save a lot of processing, can we make sure that multiple updates / deletions / creations are done as one? Adrian Greeve 09:32, 17 May 2013 (WST)

Why observer priorites?

As discussed yesterday, I propose we must have priorities associated with event observers/handlers. This leads to possiblity of events being used by plugins and core extensively in future. A simple idea could be to re-write the completion system to use the new event infrastucture instead of making its on calls to view pages etc. Here is a simple use case that shows why priorities are needed:-

Consider a student called "Derp" enrolled in a course called "Fruits" which has two activites "Bananas" and "kiwi fruit" Derp completed the activity “Bananas” which triggers a event (say 'activity_complete') with two observers (say A and B). Observer A unlocks the other activity “Kiwi fruit” if activity “Bananas” is completed and if user is enrolled in course “Veggies” Observer B enrols user in course “veggies” if activity “Bananas” is completed. Without observer priorities observer B can be notified of the change before observer A or the other way around leading to conflicting and undesirable results.

Ankit Agarwal 10:48, 17 May 2013 (WST)

Can we support Tincan Experience Api via a retrival plugin/reporting tool

This section is a work in progress. Here is an example of tincan API call:-

Derp attempted 'Example Activity'

{

"id": "26e45efa-f243-419e-a603-0c69783df121",

"actor": {

"name": "Derp",

"mbox": "mailto:depr@derp.com",

"objectType": "Agent"

},

"verb": {

"id": "http://adlnet.gov/expapi/verbs/attempted",

"display": {

"en-US": "attempted"

}

},

"context": {

"contextActivities": {

"category": [

{

"id": "http://corestandards.org/ELA-Literacy/CCRA/R/2",

"objectType": "Activity"

}

]

}

},

"timestamp": "2013-05-17T04:02:18.298Z",

"stored": "2013-05-17T04:02:18.298Z",

"authority": {

"account": {

"homePage": "http://cloud.scorm.com/",

"name": "anonymous"

},

"objectType": "Agent"

},

"version": "1.0.0",

"object": {

"id": "http://www.example.com/tincan/activities/aoivGYMz",

"definition": {

"name": {

"en-US": "Example Activity"

},

"description": {

"en-US": "Example activity definition"

}

},

"objectType": "Activity"

}

}

Ankit Agarwal 12:20, 17 May 2013 (WST)

Performance

I would like to see this spec propose some performance criteria at that start, that can be testing during development. For example:

- How many writes / events per second should a log back-end be able to handle. (I know, it depends on the hardware.)

- On a typical Moodle page load, how much of the load time should be taken up by the add_to_log call? Can we promise that it will be less than in Moodle 2.5?

I guess that those are the two main ones, if we can find a way to quantify them. Note that performance of add_to_log on a developer machine is not an issue. The issue really comes with high load, where there might be lock contention that slows down log writes.