Talk:Logging 2: Difference between revisions

| Line 538: | Line 538: | ||

=== auth/mnet/auth.php === | === auth/mnet/auth.php === | ||

<code php> | |||

$mnethostlogssql = " | |||

SELECT | |||

mhostlogs.remoteid, mhostlogs.time, mhostlogs.userid, mhostlogs.ip, | |||

mhostlogs.course, mhostlogs.module, mhostlogs.cmid, mhostlogs.action, | |||

mhostlogs.url, mhostlogs.info, mhostlogs.username, c.fullname as coursename, | |||

c.modinfo | |||

FROM | |||

( | |||

SELECT | |||

l.id as remoteid, l.time, l.userid, l.ip, l.course, l.module, l.cmid, | |||

l.action, l.url, l.info, u.username | |||

FROM | |||

{user} u | |||

INNER JOIN {log} l on l.userid = u.id | |||

WHERE | |||

u.mnethostid = ? | |||

AND l.id > ? | |||

ORDER BY remoteid ASC | |||

LIMIT 500 | |||

) mhostlogs | |||

INNER JOIN {course} c on c.id = mhostlogs.course | |||

ORDER by mhostlogs.remoteid ASC"; | |||

</code> | |||

# Function keepalive_client: A heavy SQL query is performed that joins the log table to check whether the user who logged in via mnet is still active. | # Function keepalive_client: A heavy SQL query is performed that joins the log table to check whether the user who logged in via mnet is still active. | ||

=== lib/statslib.php === | === lib/statslib.php === | ||

<code php> | |||

$sql = "INSERT INTO {stats_user_weekly} (stattype, timeend, courseid, userid, statsreads) | |||

SELECT 'logins', timeend, courseid, userid, COUNT(statsreads) | |||

FROM ( | |||

SELECT $nextstartweek AS timeend, ".SITEID." as courseid, l.userid, l.id AS statsreads | |||

FROM {log} l | |||

WHERE action = 'login' AND $logtimesql | |||

) inline_view | |||

GROUP BY timeend, courseid, userid | |||

HAVING COUNT(statsreads) > 0"; | |||

$DB->execute($sql); | |||

</code> | |||

Function stats_cron_weekly: Used to gather weekly statistics. Retrieves all 'login' actions in the log table between a certain time period and inserts them into the stats_user_weekly table. | |||

<code php> | |||

$sql = "INSERT INTO {stats_user_monthly} (stattype, timeend, courseid, userid, statsreads) | |||

SELECT 'logins', timeend, courseid, userid, COUNT(statsreads) | |||

FROM ( | |||

SELECT $nextstartmonth AS timeend, ".SITEID." as courseid, l.userid, l.id AS statsreads | |||

FROM {log} l | |||

WHERE action = 'login' AND $logtimesql | |||

) inline_view | |||

GROUP BY timeend, courseid, userid"; | |||

$DB->execute($sql); | |||

</code> | |||

Function stats_cron_monthly: Used to gather monthly statistics. Retrieves all 'login' actions in the log table between a certain time period and inserts them into the stats_user_monthly table. | |||

<code php> | |||

if ($firstlog = $DB->get_field_sql('SELECT MIN(time) FROM {log}')) { | |||

return $firstlog; | |||

} | |||

</code> | |||

Function stats_get_start_from: Returns starting date of the statistics. If there are currently no stats the variable $CFG->statsfirstrun is checked, and if it is equal to 'all', then a simple database query is performed on the log table to retrieve the lowest time recorded. | |||

<code php> | |||

$sql = 'INSERT INTO {temp_log1} (userid, course, action) | |||

SELECT userid, course, action FROM {log} | |||

WHERE time >= ? AND time < ?'; | |||

$DB->execute($sql, array($timestart, $timeend)); | |||

</code> | |||

Function stats_temp_table_fill: Fills the temporary stats tables (temp_log1 and temp_log2) with new data by performing a single select query on the log table to retrieve data from a given time range. | |||

=== lib/datalib.php === | === lib/datalib.php === | ||

| Line 551: | Line 621: | ||

These are where the functions mentioned in the section above are used as they perform the actual query on the log table. | These are where the functions mentioned in the section above are used as they perform the actual query on the log table. | ||

# Function get_logs | # Function get_logs. | ||

# Function get_logs_usercourse | # Function get_logs_usercourse. | ||

# Function get_logs_userday | # Function get_logs_userday. | ||

=== lib/deprecatedlib.php === | === lib/deprecatedlib.php === | ||

<code php> | |||

$sql = "SELECT u.id, u.firstname, u.lastname, MAX(l.time) | |||

FROM {user} u, {role_assignments} ra, {log} l | |||

WHERE l.time > ? | |||

AND l.course = ? | |||

AND l.module = 'course' | |||

AND l.action = 'enrol' | |||

AND ".$DB->sql_cast_char2int('l.info')." = u.id | |||

AND u.id = ra.userid | |||

AND ra.contextid ".get_related_contexts_string($context)." | |||

GROUP BY u.id, u.firstname, u.lastname | |||

ORDER BY MAX(l.time) ASC"; | |||

$params = array($timestart, $courseid); | |||

return $DB->get_records_sql($sql, $params); | |||

</code> | |||

Function get_recent_enrolments: Performs a query with the user, role_assignments and log table to return recent enrolments. | |||

=== lib/cronlib.php === | === lib/cronlib.php === | ||

<code php> | |||

$sql = "SELECT info, count(*) | |||

FROM {log} | |||

WHERE module = 'login' AND action = 'error' | |||

AND time > ? | |||

GROUP BY ip | |||

HAVING count(*) >= ?"; | |||

</code> | |||

Function notify_login_failures: The above query is performed twice except the group by variable changes. | |||

# Gets all the IPs with more than notifyloginthreshold failures since lastnotifyfailure and insert them into the cache_flags temp table. | |||

# Get all the INFOs with more than notifyloginthreshold failures since lastnotifyfailure and insert them into the cache_flags temp table. | |||

The following is then performed - | |||

<code php> | |||

$sql = "SELECT * FROM ( | |||

SELECT l.*, u.firstname, u.lastname | |||

FROM {log} l | |||

JOIN {cache_flags} cf ON l.ip = cf.name | |||

LEFT JOIN {user} u ON l.userid = u.id | |||

WHERE l.module = 'login' AND l.action = 'error' | |||

AND l.time > ? | |||

AND cf.flagtype = 'login_failure_by_ip' | |||

UNION ALL | |||

SELECT l.*, u.firstname, u.lastname | |||

FROM {log} l | |||

JOIN {cache_flags} cf ON l.info = cf.name | |||

LEFT JOIN {user} u ON l.userid = u.id | |||

WHERE l.module = 'login' AND l.action = 'error' | |||

AND l.time > ? | |||

AND cf.flagtype = 'login_failure_by_info') t | |||

ORDER BY t.time DESC"; | |||

$params = array($CFG->lastnotifyfailure, $CFG->lastnotifyfailure); | |||

</code> | |||

This selects all the login errors logged belonging to the IPs and infos since lastnotifyfailure that are stored in the cache_flags table. | |||

=== report/outline/index.php === | === report/outline/index.php === | ||

<code php> | |||

if (!$logstart = $DB->get_field_sql("SELECT MIN(time) FROM {log}")) { | |||

print_error('logfilenotavailable'); | |||

} | |||

</code> | |||

A simple query to return the minimum time in the log table - used to make sure there are logs to report. | |||

<code php> | |||

$sql = "SELECT cm.id, COUNT('x') AS numviews, MAX(time) AS lasttime | |||

FROM {course_modules} cm | |||

JOIN {modules} m ON m.id = cm.module | |||

JOIN {log} l ON l.cmid = cm.id | |||

WHERE cm.course = ? AND l.action LIKE 'view%' AND m.visible = 1 | |||

GROUP BY cm.id"; | |||

$views = $DB->get_records_sql($sql, array($course->id)); | |||

</code> | |||

Returns the number of views a module has had. | |||

=== report/participation/index.php === | === report/participation/index.php === | ||

<code php> | |||

$minlog = $DB->get_field_sql('SELECT min(time) FROM {log} WHERE course = ?', array($course->id)); | |||

</code> | |||

A simple query to return the minimum time in the log table - used in later query. | |||

<code php> | |||

$sql = "SELECT ra.userid, u.firstname, u.lastname, u.idnumber, l.actioncount AS count | |||

FROM (SELECT * FROM {role_assignments} WHERE contextid $relatedcontexts AND roleid = :roleid ) ra | |||

JOIN {user} u ON u.id = ra.userid | |||

LEFT JOIN ( | |||

SELECT userid, COUNT(action) AS actioncount FROM {log} WHERE cmid = :instanceid AND time > :timefrom AND $actionsql GROUP BY userid | |||

) l ON (l.userid = ra.userid)"; | |||

</code> | |||

A more complex query used to return the number of times a user has either posted or viewed a module. | |||

== Files that make use of $DB functions to query the logs == | == Files that make use of $DB functions to query the logs == | ||

Revision as of 07:23, 22 May 2013

Basically what's in here looks fine to me. I wrote a proposal Logging API proposal which includes some of these changes as a single development chunk, and should not cause problems for others implementing the rest of it later. The main focus of my proposal is providing the option to move logs out of the database (because the number of database writes is probably Moodle's single worst performance characteristic) but it should help enable the other enhancements from this proposal as well, in future.

Sam marshall 19:40, 12 February 2013 (WST)

Summary: Events/Logging API 2.6

Logging is implemented in form of event-listening by plugins. Plugins listening to the events may be:

- generic logging plugin implementing interface allowing other plugins to query information

- reporting plugin that aggregates only information that it needs and stores it optimised for its queries

Our tasks:

- Events API must ensure that everything that potentially may be interesting to the plugins is included in event data (but does not query extra data). There should be as fast as possible to get the list of listeners to notify: Moodle -> Logs

- Logging API is the form of plugins communication to the generic logging systems. It might not be extra effective but it stores and allows to retrieve everything that happens: Logs -> Moodle

- We make sure that everything that is logged/evented now continues to do so + log much more

- Create at least one plugin for generic logging

Marina Glancy 13:05, 15 May 2013 (WST)

New logging proposal

Current problems

- many actions are not logged

- some logged actions do not contain necessary information

- log storage does not scale

- hardcoded display actions, but only plugins know how to interpret data

- it is not possible to configure level of logging

- performance

Related problems

Events

Events are triggered in few places mostly for write actions only. The event data structure is inconsistent. Cron events are abused, stale and slow. The error states and transaction support is problematic.

Statisticts

Statistics processing is relatively slow. The results are not reliable becuase the algorithm does not fully understand the logged data.

Possible solution

We could either improve current system or implement a completely new logging framework. Improvements of the current log table and api cannot resolve all existing problems. Possible long term solution may be to split the logging into several self-contained parts:

- triggering events - equivalent to current calls of add_to_log() function

- storage of information - equivalent to current hardcoded storage in log database table

- fetching of information - equivalent to reading from log table

- processing of log information - such as processing of statistics

- reporting - log and participation reports

We could solve the logging and event problems at the same time. Technically all log actions should be accompanied by event triggers. The logging and event data are similar too.

Log and event data structures

Necessary event information:

- component - the frankenstyle component that understands the action, what changed

- action - similar to areas elsewhere

- user - who is responsible, who did it, current user usually

- context - where the event happened

- data - simple stdClass object that describes the action completely

- type - create/read/update/delete/other (CRUD)

- level - logging level 1...

- time when event was triggered

- optional event data - 2.5 style event data for backwards compatibility

Optional information:

- studentid - affected student for fast filtering

- courseid - affected course

Backwards compatibility

add_to_log() calls would be ignored completely with debuggin message in the future. Old log table would be kept unchnaged, but no new data would be added.

Old event triggers would generate legacy events.

Event triggers would be gradually updated to trigger new events with more meta data, event may optionally contain legacy event data and legacy even name. The legacy events would be triggered automatically after handling new event.

The event handle definition would be fully backwards compatible, new events would be named component/action. All new events would be instant.

Cron events

The current cron even design is overcomplicated and often results in bad coding style. Cron events should be deprecated and forbidden for new events. Cron events also create performance problems.

- "Cron events are abused, stale and slow." - also the above. Please give specific examples of what you mean by this. Quiz uses cron events for a good reason (although you are welcome to suggest better ways to achieve the same results). This has never caused performance problems, and I don't recall any performance problem caused by cron events.--Tim Hunt 15:10, 17 May 2013 (WST)

Database transactions

At present there is a hack that delays events via cron if transaction in progress. Instead we could create buffer for events in memory and wait until we are notified from DML layer that transaction was committed or rolled back.

It is important here to minimise the event data size.

Auxiliary event data

At present we are sometimes sending a lot more even data because we may need things like full course records when even reference some course. This prevents repeated fetching of data dromdatabase.

This is bad for several reasons:

- we can not store this onformation in logs - it is too big and not necessary

- the data may get stale - especially in cron, but also other handlers may modify the data (event from event), the db transactions increase the chances for atle data too

The auxiliary data should be replaced by some new cache or extension of dml level - record cache. It could be prefilled automatically or manually whentriggering events.

Infinite recursion trouble

One event may modify data which results in new even which triggers the same event. We detect this somehow and stop event processing.

Catch all event handler

We need a '*' handler definition, this would allow log handlers to processes any subset of events without core modifications.

Handler definitions

At present handlers are stored in database table during installation and upgrade. That is not necessary any more and we could use MUC instead. The list of all handlers will be always relatively small and should fit into RAM.

Handler definitions should contain priority.

Implementation steps

- redesign events internals while keeping bc

- new logging API

- convert log actions and event triggers

- inplement log plugins

Event logger or (event and logging)

I agree with Petr's proposal to enhance event system, but I think event and logging should be kept separate. Mixing them will force developer to pollute event system which might lead to race-around problem.

With current proposal we are not considering logging event-less situations like sending email after forum is posted, or debug/exception or cron status. They all can be achieved by triggering an event but should be pollute event system for some use-cases like:

- Logging memory usage while executing an api (create_user, view create user page)

- Logging mail contents (for forum etc.)

- Logging debug information for replicating issue (Help admin and moodle developer to fix issues)

Also, we should consider how logforphp[1] and Monolog[2] are implemented. They use concepts of channelling log information, putting them in category and write to different logging stores. This will help us to create flexible system with can collect rich data for analysis, research and debugging. Also, we should consider logging general information in stream/file and important information in db to avoid performance issues.

We should also consider following standards like PSR-3 or RFC-5424

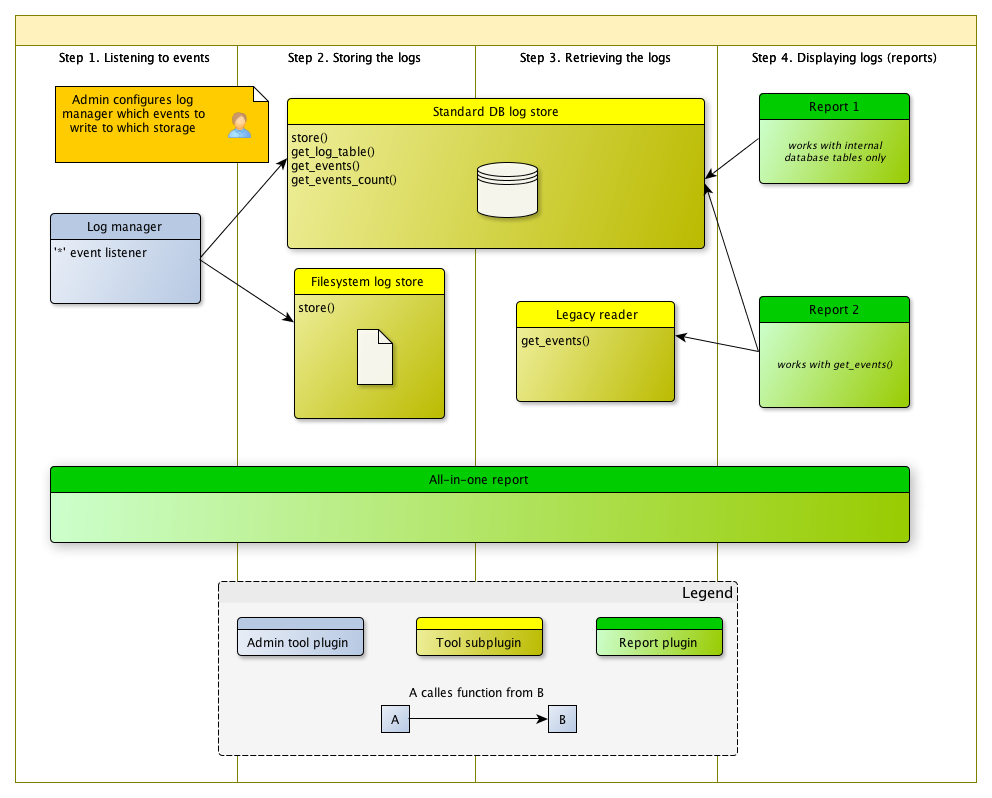

Logging plugins diagram

Configuration example:

Imagine admin created 2 instances of each "DB log storage", "Filesystem log storage" and "Log storage with built-in driver" and called them dbstorage1, dbstorage2, filestorage1, filestorage2, universalstorage1 and universalstorage2

DB log storage::log_storage_instances() will return:

- dbstorage1

- dbstorage2

Simple DB log driver::log_storage_instances() will return:

- dbstorage1 (Simple DB log driver)

- dbstorage2 (Simple DB log driver)

When Report1 is installed it will query all plugins that implement both functions: log_storage_instances() and get_log_records(), and then invoke log_storage_isntances() from each of them and return the list for admin to choose the data source from:

- filestorage1 (Records DB log driver)

- filestorage2 (Records DB log driver)

- dbstorage1 (Records DB log driver)

- dbstorage2 (Records DB log driver)

- filestorage1 (FS to DB log driver)

- filestorage2 (FS to DB log driver)

- universalstorage1

- universalstorage2

Marina Glancy 11:01, 16 May 2013 (WST)

Minor comments

The log and event data structure that Petr proposed to me initially looked good (though it does seem to be missing date / time which I am guessing is just an oversight). But after talking to Rajesh about querying the data I think that I might be more in favour of Some sort of flat system that doesn't require serialising the data. Adrian Greeve 09:32, 17 May 2013 (WST)

Fred's part

Logging

Data the logger should receive

Those are the information that should be passed on to the logging system. For performances, it's probably better that this information arrives full and that no more queries should be performed to retrieve, say, the course name. The more information we're passing, the more we can store. Once this is defined, then we will know what an event has to send when it's triggered.

Mandatory fields *

- Event specification version number*

- Event name*

- Type*

- Error

- User action

- Procedural action

- Manual action

- System log

- Datetime* (milliseconds?)

- Context ID*

- Category ID

- Category name

- Course ID*

- Course name

- Course module ID*

- Course module name

- Component*

- core

- course

- mod_assign

- mod_workshop

- Subject* (Defines the object on which the action is performed)

- user

- section

- assignment

- submission_phase

- Subject ID*

- Subject name (Human readable identifier of the object: Mark Johnson, Course ABC, ...)

- Subject URL (URL to notice the changes)

- Action* (The exact action that is performed on the object)

- created

- moved (for a course, a section, a module, a user between groups)

- submitted (for an assignment, or a message)

- ended (for a submission phase for instance)

- Actor* (user, cli, cron, ...)

- Actor ID/User ID* (ID assiacted to the actor, typically the user id)

- Real actor/user ID* (When logged in as, store the real user ID)

- Actor IP address*

- Associated object (Object associated to the subject. Ie: category of origin when a course is moved. User to whom a message is sent.)

- section

- category

- user (to whom you sent a message)

- Associated object ID

- Transaction type* (CRUD)

- create

- read

- update

- delete

- Level* (Not convinced that we should use that, because it's very subjective, except if we have guidelines and only a few levels (2, max 3))

- major

- normal

- minor

- Message (human readable log)

- Data (serialized data for more information)

- Current URL

We have to decide how extensively we want to provide information to external support. For example, when an assignment is submitted, we could provide the URL to the assignment. But that means that this event specifically has to receive the data, or the module has to provide a callback to generate the information based on the event data. Also, external systems cannot call the assignment callback.

In any case, not everyone is going to be happy. The more processing to get the data, the slower it gets. The less data we provide, the less information can be worked on...

Also, if we provide slots for information, but most of the events do not use them, the data becomes unusable as it is inconsistent except for the one event completing the fields.

Fields policy

We could define different levels of fields to be set depending on CRUD. For example, if an entry is deleted, we might want to log more than just the entry ID, but also its content, its name etc... surely the data field could contain most of it, but we still need to define policies.

Events

Important factors

- Processing has to be very quick

- Developers are lazy and so the function call should be as easy as possible

- Validating the data is very costly, we have to avoid that

- An event should not be defined, but triggered, for performance reason

An event is not defined, it is fired and that's the only moment the system knows about it. Two different components should not define the same action, for example: enrol/user:created and core/user:created. The user:created event should be triggered in the core method creating a user, once and only.

Triggering an event

Very basic and quick example of how we could now trigger the events, filling up the required data and parsing the rest to allow for the developers to quickly trigger one without having to provide extensive information.

event_trigger('mod_assign/assignment:submitted', $assignmentid, $extradata);

/**

* Trigger an event

*

* An event name should have the following form:

* $component/$subject:$action

*

* The component is the frankenstyle name of the plugin triggering the event.

* The subject is the object on which the action is performed. If the name could be

* bind the the corresponding model/tablename that would be great.

* The action is what is happening, typically created/read/updated/deleted, but

* it could also be 'moved', 'submitted', 'loggedin', ...

*

* The parameter $subjectid is ideally the ID of the subject in its own table.

*

* @param string $name of the event

* @param string $subjectid ID of the subject of the event

* @param array|stdClass of the data to pass on

* @param int $level of the event

* @return void

*/

function event_trigger($name, $subjectid, $data = null, $level = LEVEL_NORMAL) {

global $USER;

$data = (object) $data;

// For the specification 2, here are some hardcoded values.

$data->eventname = $name;

$data->version = 2;

$data->type = 'event';

// Get the component, event, action and subject.

// $name = $component/$action:$subject

// $event = $action:$subject

list($component, $event) = explode('/', $value, 2);

list($subject, $action) = explode(':', $event, 2);

$data->component = $component;

$data->subject = $subject;

$data->action = $action;

$data->subjectid = $subjectid;

$data->level = $level;

// Defaults.

if (!isset($data->time)) {

$data->time = microtime(true);

}

if (!isset($data->contextid)) {

$data->contextid = $PAGE->get_contextid();

}

if (!isset($data->courseid)) {

$data->courseid = $PAGE->get_courseid();

}

if (!isset($data->moduleid)) {

$data->courseid = $PAGE->get_coursemoduleid();

}

if (!isset($data->actor) && !isset($data->actorid)) {

$data->actor = 'user';

$data->actorid = $USER->id;

} else {

throw Exception('Actor and Actor ID must both be specified');

}

if (session_is_loggedinas()) {

$data->realuserid = session_get_realuser()->id;

}

// It would be preferrable that this data is not computed on the fly,

// but I have added that for now so that the dev doesn't have to set

// one extra parameter.

if (!isset($data->crud)) {

if ($data->action == 'created' || $data->action == 'added') {

$data->crud = 'c';

} else if ($data->action == 'read' || $data->action == 'viewed') {

$data->crud = 'r';

} else if ($data->action == 'updated' || $data->action == 'edited') {

$data->crud = 'u';

} else if ($data->action == 'deleted' || $data->action == 'removed') {

$data->crud = 'd';

} else {

throw Exception('Crud is needed!');

}

}

// Not mandatory, but possible to be guessed.

if (!isset($data->currenturl)) {

$data->currenturl = $PAGE->get_url();

}

dispatch_event($name, $data);

}

Using such a function would:

- Force the developer to send the minimal required information via parameters

- Guess the fields such as component, action or subject from the event name (this processing is really small and easy versus the overhead of asking the dev to enter those)

- Allow for any information that can be guessed by the system to be defined in the $data parameter

- Allow any extra information to be defined in the $data parameter

An alternative way would be send an event object to this method. An event object would be a class event_create() for example, in which should be set all the required variables. I didn't get in that direction because I think it's taking up a bit more memory, but also this could be more work for developers just to trigger an event.

Both ways have definitely their pros and cons.

Validation of event data

I don't think we should validate the information passed as this processing is relatively expensive. But we should be strict on the fact that extra data should be set in $data->data. A solution to prevent developers from abusing the stdClass properties, is to recreate the object based on the allowed keys, that's probably cheap. dispatch_event() could be the right place to do that.

Deprecate Cron events

An event is supposed to happen when it has been triggered, not being delayed. I think we should leave the plugins handle the events the way they want it, and schedule a cronjob is they want to delay the processing. Of course, we should still support them for a while.

Backwards compatibility

If the event naming convention changes, this could be a bit trickier to achieve. Especially to ensure that 3rd party plugins trigger and catch events defined by other 3rd party plugins.

The old method events_trigger() should:

- Be deprecated;

- Trigger the old event;

- Not trigger the new events as it's tricky to remap the data to form the new $data parameter.

The new method to trigger events should

- Trigger the new event;

- Trigger the old-style event for non-updated observers;

- Keep the old $eventdata for old-style events.

Core events triggered

git gr events_trigger\( | sed -E 's/.*events_trigger\(([^,]+),.*/\1/' | sort | uniq

Frédéric Massart 11:07, 20 May 2013 (WST)

Logging bulk actions.

Just an off thought that I had. Some (if not all) of the functions that are called from the events_trigger only handle single actions (e.g. user_updated). To save a lot of processing, can we make sure that multiple updates / deletions / creations are done as one? Adrian Greeve 09:32, 17 May 2013 (WST)

Why observer priorites?

As discussed yesterday, I propose we must have priorities associated with event observers/handlers. This leads to possiblity of events being used by plugins and core extensively in future. A simple idea could be to re-write the completion system to use the new event infrastucture instead of making its on calls to view pages etc. Here is a simple use case that shows why priorities are needed:-

Consider a student called "Derp" enrolled in a course called "Fruits" which has two activites "Bananas" and "kiwi fruit" Derp completed the activity “Bananas” which triggers a event (say 'activity_complete') with two observers (say A and B). Observer A unlocks the other activity “Kiwi fruit” if activity “Bananas” is completed and if user is enrolled in course “Veggies” Observer B enrols user in course “veggies” if activity “Bananas” is completed. Without observer priorities observer B can be notified of the change before observer A or the other way around leading to conflicting and undesirable results.

Ankit Agarwal 10:48, 17 May 2013 (WST)

Can we support Tincan Experience API via a retrieval plugin/reporting tool

Here is an example of tincan API call:-

Derp attempted 'Example Activity'

{

"id": "26e45efa-f243-419e-a603-0c69783df121",

"actor": {

"name": "Derp",

"mbox": "mailto:depr@derp.com",

"objectType": "Agent"

},

"verb": {

"id": "http://adlnet.gov/expapi/verbs/attempted",

"display": {

"en-US": "attempted"

}

},

"context": {

"contextActivities": {

"category": [

{

"id": "http://corestandards.org/ELA-Literacy/CCRA/R/2",

"objectType": "Activity"

}

]

}

},

"timestamp": "2013-05-17T04:02:18.298Z",

"stored": "2013-05-17T04:02:18.298Z",

"authority": {

"account": {

"homePage": "http://cloud.scorm.com/",

"name": "anonymous"

},

"objectType": "Agent"

},

"version": "1.0.0",

"object": {

"id": "http://www.example.com/tincan/activities/aoivGYMz",

"definition": {

"name": {

"en-US": "Example Activity"

},

"description": {

"en-US": "Example activity definition"

}

},

"objectType": "Activity"

}

}

From tincan specs, here the properties of a call:-

- UUID assigned by LRS if not set by the Activity Provider.

- actor* Who the Statement is about, as an Agent or Group Object. Represents the "I" in "I Did This".

- verb* Action of the Learner or Team Object. Represents the "Did" in "I Did This".

- object* Activity, Agent, or another statement that is the Object of the Statement.Represents the "This" in "I Did This". Note that Objects which are provided as a value for this field should include an "objectType" field. If not specified, the Object is assumed to be an Activity.

- result Result Object, further details representing a measured outcome relevant to the specified Verb.

- context Context that gives the Statement more meaning. Examples: a team the Actor is working with, altitude at which a scenario was attempted in a flight simulator.

- timestamp Timestamp (Formatted according to ISO 8601) of when the events described within this Statement occurred. If not provided, LRS should set this to the value of "stored" time.

- stored Timestamp (Formatted according to ISO 8601) of when this Statement was recorded. Set by LRS.

- authority Agent who is asserting this Statement is true. Verified by the LRS based on authentication, and set by LRS if left blank.

- version The Statement‟s associated xAPI version, formatted according to Semantic Versioning 1.0.0

- attachments Array of Headers for attachments to the Stateme attachment

Among these actor, verb and object are mandatory fields. We are recording all those in our logs. In addition the LRS (retrival plugin?) can populate rest of the fields if it wanted to. The only information that will not be stored by the default storage plugin is "stored" field.

Related links

- Official Tincan website

- List of standard verbs

- What is an LRS

- A simple tincan statment generator

- Tincan specs

Ankit Agarwal 12:20, 17 May 2013 (WST)

Performance

I would like to see this spec propose some performance criteria at that start, that can be testing during development. For example:

- How many writes / events per second should a log back-end be able to handle. (I know, it depends on the hardware.)

- On a typical Moodle page load, how much of the load time should be taken up by the add_to_log call? Can we promise that it will be less than in Moodle 2.5?

I guess that those are the two main ones, if we can find a way to quantify them. Note that performance of add_to_log on a developer machine is not an issue. The issue really comes with high load, where there might be lock contention that slows down log writes.--Tim Hunt 15:16, 17 May 2013 (WST)

Existing log reading analysis

Files that make use of get_logs*

There are core functions (get_logs, get_logs_usercourse and get_logs_userday) but other classes also contain functions with the same name which are not related to the mdl_log table. Will we will be replacing these?

Locations using or declaring the functions get_logs*

backup/util/structure/backup_nested_element.class.php

The backup process contains it's own get_logs function that does not obtain data from the Moodle log table, but it's own backup_logs table. The structure is as follows -

id bigint

backupid character varying(32)

loglevel smallint

message character varying(255)

timecreated bigint

A few things to consider. How will this integrate into the new system with the backupid? Which field would that be placed in? It also has a loglevel, is that going to be a universal log level or unique to backup? If so, which fields to store in? Are we just going to leave it as it is?

course/lib.php

This is called for the function build_logs_array which is only ever called when the user has requested to print the logs for the course via the log report (report/log). So, is not called all the time (fortunately).

lib/datalib.php

This is where the core log functions are created, but are not used here. The functions get_logs_usercourse and get_logs_userday are only ever called in the report/log/graphs.php.

mod/workshop/allocation/scheduled/lib.php

This is a stdClass unique to the workshop that is json_encoded before being saved in the database field resultlog in the table 'workshopallocation_scheduled'.

The log stdClass is as follows.

$log = new stdClass();

$log->message = $message;

$log->type = $type;

$log->indent = $indent;

report/log/graph.php

Self explanatory. This is a report based on the log records. Could potentially execute large queries but at least it is only performed when requested by user.

Files that contain usages of {log} in database queries

auth/mnet/auth.php

$mnethostlogssql = "

SELECT

mhostlogs.remoteid, mhostlogs.time, mhostlogs.userid, mhostlogs.ip,

mhostlogs.course, mhostlogs.module, mhostlogs.cmid, mhostlogs.action,

mhostlogs.url, mhostlogs.info, mhostlogs.username, c.fullname as coursename,

c.modinfo

FROM

(

SELECT

l.id as remoteid, l.time, l.userid, l.ip, l.course, l.module, l.cmid,

l.action, l.url, l.info, u.username

FROM

{user} u

INNER JOIN {log} l on l.userid = u.id

WHERE

u.mnethostid = ?

AND l.id > ?

ORDER BY remoteid ASC

LIMIT 500

) mhostlogs

INNER JOIN {course} c on c.id = mhostlogs.course

ORDER by mhostlogs.remoteid ASC";

- Function keepalive_client: A heavy SQL query is performed that joins the log table to check whether the user who logged in via mnet is still active.

lib/statslib.php

$sql = "INSERT INTO {stats_user_weekly} (stattype, timeend, courseid, userid, statsreads)

SELECT 'logins', timeend, courseid, userid, COUNT(statsreads)

FROM (

SELECT $nextstartweek AS timeend, ".SITEID." as courseid, l.userid, l.id AS statsreads

FROM {log} l

WHERE action = 'login' AND $logtimesql

) inline_view

GROUP BY timeend, courseid, userid

HAVING COUNT(statsreads) > 0";

$DB->execute($sql);

Function stats_cron_weekly: Used to gather weekly statistics. Retrieves all 'login' actions in the log table between a certain time period and inserts them into the stats_user_weekly table.

$sql = "INSERT INTO {stats_user_monthly} (stattype, timeend, courseid, userid, statsreads)

SELECT 'logins', timeend, courseid, userid, COUNT(statsreads)

FROM (

SELECT $nextstartmonth AS timeend, ".SITEID." as courseid, l.userid, l.id AS statsreads

FROM {log} l

WHERE action = 'login' AND $logtimesql

) inline_view

GROUP BY timeend, courseid, userid";

$DB->execute($sql);

Function stats_cron_monthly: Used to gather monthly statistics. Retrieves all 'login' actions in the log table between a certain time period and inserts them into the stats_user_monthly table.

if ($firstlog = $DB->get_field_sql('SELECT MIN(time) FROM {log}')) {

return $firstlog;

}

Function stats_get_start_from: Returns starting date of the statistics. If there are currently no stats the variable $CFG->statsfirstrun is checked, and if it is equal to 'all', then a simple database query is performed on the log table to retrieve the lowest time recorded.

$sql = 'INSERT INTO {temp_log1} (userid, course, action)

SELECT userid, course, action FROM {log}

WHERE time >= ? AND time < ?';

$DB->execute($sql, array($timestart, $timeend));

Function stats_temp_table_fill: Fills the temporary stats tables (temp_log1 and temp_log2) with new data by performing a single select query on the log table to retrieve data from a given time range.

lib/datalib.php

These are where the functions mentioned in the section above are used as they perform the actual query on the log table.

- Function get_logs.

- Function get_logs_usercourse.

- Function get_logs_userday.

lib/deprecatedlib.php

$sql = "SELECT u.id, u.firstname, u.lastname, MAX(l.time)

FROM {user} u, {role_assignments} ra, {log} l

WHERE l.time > ?

AND l.course = ?

AND l.module = 'course'

AND l.action = 'enrol'

AND ".$DB->sql_cast_char2int('l.info')." = u.id

AND u.id = ra.userid

AND ra.contextid ".get_related_contexts_string($context)."

GROUP BY u.id, u.firstname, u.lastname

ORDER BY MAX(l.time) ASC";

$params = array($timestart, $courseid);

return $DB->get_records_sql($sql, $params);

Function get_recent_enrolments: Performs a query with the user, role_assignments and log table to return recent enrolments.

lib/cronlib.php

$sql = "SELECT info, count(*)

FROM {log}

WHERE module = 'login' AND action = 'error'

AND time > ?

GROUP BY ip

HAVING count(*) >= ?";

Function notify_login_failures: The above query is performed twice except the group by variable changes.

- Gets all the IPs with more than notifyloginthreshold failures since lastnotifyfailure and insert them into the cache_flags temp table.

- Get all the INFOs with more than notifyloginthreshold failures since lastnotifyfailure and insert them into the cache_flags temp table.

The following is then performed -

$sql = "SELECT * FROM (

SELECT l.*, u.firstname, u.lastname

FROM {log} l

JOIN {cache_flags} cf ON l.ip = cf.name

LEFT JOIN {user} u ON l.userid = u.id

WHERE l.module = 'login' AND l.action = 'error'

AND l.time > ?

AND cf.flagtype = 'login_failure_by_ip'

UNION ALL

SELECT l.*, u.firstname, u.lastname

FROM {log} l

JOIN {cache_flags} cf ON l.info = cf.name

LEFT JOIN {user} u ON l.userid = u.id

WHERE l.module = 'login' AND l.action = 'error'

AND l.time > ?

AND cf.flagtype = 'login_failure_by_info') t

ORDER BY t.time DESC";

$params = array($CFG->lastnotifyfailure, $CFG->lastnotifyfailure);

This selects all the login errors logged belonging to the IPs and infos since lastnotifyfailure that are stored in the cache_flags table.

report/outline/index.php

if (!$logstart = $DB->get_field_sql("SELECT MIN(time) FROM {log}")) {

print_error('logfilenotavailable');

}

A simple query to return the minimum time in the log table - used to make sure there are logs to report.

$sql = "SELECT cm.id, COUNT('x') AS numviews, MAX(time) AS lasttime

FROM {course_modules} cm

JOIN {modules} m ON m.id = cm.module

JOIN {log} l ON l.cmid = cm.id

WHERE cm.course = ? AND l.action LIKE 'view%' AND m.visible = 1

GROUP BY cm.id";

$views = $DB->get_records_sql($sql, array($course->id));

Returns the number of views a module has had.

report/participation/index.php

$minlog = $DB->get_field_sql('SELECT min(time) FROM {log} WHERE course = ?', array($course->id));

A simple query to return the minimum time in the log table - used in later query.

$sql = "SELECT ra.userid, u.firstname, u.lastname, u.idnumber, l.actioncount AS count

FROM (SELECT * FROM {role_assignments} WHERE contextid $relatedcontexts AND roleid = :roleid ) ra

JOIN {user} u ON u.id = ra.userid

LEFT JOIN (

SELECT userid, COUNT(action) AS actioncount FROM {log} WHERE cmid = :instanceid AND time > :timefrom AND $actionsql GROUP BY userid

) l ON (l.userid = ra.userid)";

A more complex query used to return the number of times a user has either posted or viewed a module.

Files that make use of $DB functions to query the logs

I am ignoring the usages of log_display - based on what Petr said I assume we can just get rid of this completely

admin/handlevirus.php

$log = $DB->get_record("log", array("module"=>"upload", "info"=>$file, "action"=>"upload")

A simple check to see if the file was recorded as being uploaded.

backup/util/helper/backup_cron_helper.class.php

$logexists = $DB->record_exists_select('log', $sqlwhere, $params)

This query is performed twice in this file.

blocks/recent_activity/block_recent_activity.php

$logs = $DB->get_records_select('log',

"time > ? AND course = ? AND

module = 'course' AND

(action = 'add mod' OR action = 'update mod' OR action = 'delete mod')",

array($timestart, $course->id), "id ASC");

Returns list of recent changes in course structure in the function get_structural_changes(). Used only within this block.

course/lib.php

$params = array('userid'=>$USER->id, 'url'=>"view.php?id=$courseid", 'since'=>$since);

$select = "module = 'course' AND action = 'new' AND userid = :userid AND url = :url AND time > :since";

return $DB->record_exists_select('log', $select, $params);

This is used in the function can_delete_course() if the user does not have the capability moodle/course:delete but has the capability moodle/course:create. The above checks when the user created the course, and if it was less than a day ago they can delete it.

lib/datalib.php

if (is_siteadmin()) {

if ($count->attempts = $DB->count_records_select('log', $select, $params)) {

$count->accounts = $DB->count_records_select('log', $select, $params, 'COUNT(DISTINCT info)');

return $count;

}

} else if ($mode == 'everybody') {

if ($count->attempts = $DB->count_records_select('log', "$select AND info = :username", $params)) {

return $count;

}

}

This code is located in the function count_login_failures() and is used by renderers (both lib/outputrenderers.php and mymobile/renderers.php).

lib/deprecatedlib.php

$logs = $DB->get_records_select('log', "time > ? AND course = ? AND

module = 'course' AND

(action = 'add mod' OR action = 'update mod' OR action = 'delete mod')",

array($timestart, $course->id), "id ASC");

This is located in the function print_recent_activity().

lib/uploadlib.php

if (!$record = $DB->get_record('log', array('info'=>$oldpath, 'module'=>'upload'))) {

return false;

}

Used in the function clam_change_log(). This function does not seem to be used anywhere within Moodle.

mod/book/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'book',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function book_user_outline().

mod/folder/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'folder',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function folder_user_outline().

- Used in the function folder_user_complete().

mod/imscp/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'imscp',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function imscp_user_outline().

- Used in the function imscp_user_complete().

mod/page/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'page',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function page_user_outline().

- Used in the function page_user_complete().

mod/resource/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'resource',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function resource_user_outline().

- Used in the function resource_user_complete().

mod/url/lib.php

$logs = $DB->get_records('log', array('userid'=>$user->id, 'module'=>'url',

'action'=>'view', 'info'=>$folder->id), 'time ASC')

- Used in the function url_user_outline().

- Used in the function url_user_complete().

Existing log writing analysis

add_to_log

- course id

- module name (forum, journal, resource, course, user etc)

- action (view, update, add, delete, possibly followed by another word to clarify)

- url (file and parameters used to see the results of the action)

- info (additional description information)

- cm (course module ID)

- user (if the user is different to $USER)

If the user is logged in as someone else than no logs are created.

If $CFG->logguests is set and false then no logs are created.

Multiple checks are done on the different fields to ensure that the data is ok for inserting.

try catch for inserting the record (insert_record_raw - for no checking, insert into the database as quickly as possible)

if there is an error a debugging message is sent. if $CFG->supportemail is true and $CFG->noemailever is not set then an email is sent with a limit of one email a day.

info section Some logs have:

- written information

- just a number

- user ID

- course ID

- question type

Action contains:

- action using get string

- Straight English

- nothing at all.

Some sections don't log anything eg bulk upload of users via csv.

See the function add_to_log for further details. lib/datalib.php

user_accesstime_log

- course id

no log created if the user isn't logged in, is logged in as someone else, or is a guest user.

updates the user table with (update_record_raw - see above) and changes $USER->lastaccess at the same time.

user ID Last ip address last access time

Checks made on user_lastaccess table and inserts / updates are made as appropriate.

See the function user_accesstime_log for further details. lib/datalib.php

Other logs

Only one insert into the config_log table (lib/adminlib.php) - lib function config_write (30 calls in 4 files) log contains:

- user ID

- time modified

- plugin

- name

- value

- old value

log_trasfer called three times

two direct inserts into the portfolio log (lib/portfolio/exporter.php) - lib functions log_transfer (3 calls in 3 files) log contains:

- user ID

- portfolio

- caller file

- caller component

- caller sha1

- caller class

- continue url

- return url

- temp data ID

- time

upgrade_log (12 calls in 1 file)

log contains:

- type

- plugin

- version

- target version

- info

- details

- backtrace

- userid

- timemodified

Adrian Greeve 09:47, 20 May 2013 (WST)

Object-oriented model of events

This is the proposal for object-oriented events:

- All events descriptions are objects extending event_base (which is defined in core)

- Object class name is unique identifier of the event

- Class name is pluginfullname_event_xxx. Core events will have prefix 'moodle'

- Plugins store each event object in plugindir/classes/event/xxx.php (Petr is considering autoloading and/or namespaces)

- event_base also implements cacheable_object

Event object has:

- the protected properties that Fred is working on, also public getter methods for each of them

- properties 'legacyname' and 'legacydata'

- Two constructors (or static methods creating an instance):

- to create event that is about to be triggered with autofilling constant and/or default data for this particular event

- to restore event object after it has been stored in log. No default attributes here, it may even be declared final

- Function to trigger event. Also static method that creates an instance and triggers event - for lazy developers.

- Static function returning human readable event name, preferably using get_sting (lang_string)

- Static function returning human readable event description

- Function returning human readable event contents (to be used on events restored from log)

- Function checking if current user is able to see this event (to be used on events restored from log)

Yes, this is slightly slower from performance point of view than passing an array/stdClass with data. But the difference is not so big and such structure is easier to understand and implement for developers and at the same time solves a lot of problems when displaying logs. Only constructor and trigger functions are used when event is actually triggered. Everything else might or might not be used when displaying the logs.

Backward compartibility:

- Function events_trigger() will create and trigger an instance of event_legacy class

- For events that already exist in Moodle 2.5 the additional legacy information should be added to the event data (in properties 'legacyname' and 'legacydata')

Event handlers:

- Event handlers can be described as it is done now in plugindir/db/events.php, this file is parsed during install/upgrade of plugin and all handlers are removed on uninstall

- It is possible to subscribe to all events (*)

- Event handlers can also have an attribute 'sortorder' (positive or negative, default 0). At the same time admin can overwrite the handlers sequence

- If event handler refers to the old (2.5) name of event, it will be used only for events that contain legacy name and data. If it refers to 2.6 event class name it will be used with full data

- Core contains class events_manager and function get_events_manager() that returns a single instance of it. This class is responsible for retrieving/updating information about event handlers, processing event triggers, managing the events queue, processing events in cron.

- events_manager has methods to dynamically register/unregister handlers but they are not recommended (same as it is with dynamic caches definitions). Ideally they should only be used in unit and/or behat tests

How to store and retrieve the list of handlers is the most vulnerable part for performance. AFAIK Petr already has ideas about it.

And I want to emphasize again (also Tim raised this point again), that performance of storing and retrieving of the events data in log tables is the responsibility of plugins and not core. We will include in core a plugin that repeats the 2.5 functionality and stores events with legacy data in table {log}. Also we will develop three plugins:

- events dispatcher that allows to configure the filter which events to store in log

- DB log storage with auto-archiving of the old data

- driver for DB log storage that given the time range and other filters returns the list of events from DB log storage.

At the same time we will rewrite all reports that use table {log} to the new engine and move the functions that use {log} table to the legacy log plugin.

abstract class event_base {

// ... constants and all properties as protected variables

protected final function __construct() {}

protected function __construct($args);

public static final function create($args) {

return new self($args);

}

public static final funciton restore($object) {

$event = new self();

// .. restore each property from $object to $event

}

public static final function create_and_trigger($args) {

$event = self::create($args);

$event->trigger();

}

public final function trigger() {

// ...

}

public static function event_name();

public static function event_description();

public function can_view($user = null);

public function event_data();

}

Assuming we have class mod_myplugin_event_something_happened extends event_base, to trigger event we may use:

mod_myplugin_event_something_happened::create_and_trigger(array('courseid' => XXX, 'userid' => YYY, 'data' => ZZZ));

or

$event = mod_myplugin_event_something_happened::create(array('courseid' => XXX, 'userid' => YYY, 'data' => ZZZ));

// ... more code that may delete or modify the entities used when creating an event object

$event->trigger();

Marina Glancy 11:08, 20 May 2013 (WST)

Public standards compliance

According to PHP-FIG (PHP Framework Interop Group), we should try implement LoggerInterface. It is not important for us to implement this, but as they follow RFC standards and supported by open source community like symphony, it will be nice to consider.

To implement standard logging protocols like RFC 5424 or RFC-3164, we should have following information in event:

- $type: The category to which this message belongs.

- $message: The message to store in the log.

- $variables: Array of variables to replace in the message on display or NULL if message is already translated or not possible to translate.

- $severity: The severity of the message; one of the following values as defined in RFC 3164:

- EMERGENCY: Emergency, system is unusable.

- ALERT: Alert, action must be taken immediately.

- CRITICAL: Critical conditions.

- ERROR: Error conditions.

- WARNING: Warning conditions.

- NOTICE: (default) Normal but significant conditions.

- INFO: Informational messages.

- DEBUG: Debug-level messages.

- $timestamp: Time when event happened.

--Rajesh Taneja 11:13, 20 May 2013 (WST)