Overview of the Moodle question engine

- Goals

- Rationale

- How it currently works

- New system overview

- Detailed design

- Question Engine 2 Developer docs:

- Implementation plan

- Testing

This page summarises how the new question engine works.

Previous section: Design

Note: This page is a work-in-progress. Feedback and suggested improvements are welcome. Please join the discussion on moodle.org or use the page comments.

What does a question engine have to do?

We are in a question engine for online assessment. Clearly, we have questions, but we don't need to worry about them much here, they come from the question bank. We can take them as given.

We are really interested in what happens when a student attempts some questions (for example, when they attempt a quiz). We can handle this by thinking about the student's attempt at each question separately, and then aggregate those separate question attempts into the set of those that comprise the student's attempt at a particular activity.

For each question attempt, we need some notion of what state it is currently in (in-progress, completed correctly, ...) whether it has been graded, and if so, what mark was awarded. Also, there may be some state private to the question when it is started. For example the calculated question assigns random values to each variable when the question is attempted, and the choices for a multiple choice question may be shuffled.

Given the current state of a question, we need to be able to display it in HTML. The question may need to be displayed in various ways. For example, perhaps students are not allowed to see the information about the grades awarded yet, but if a teacher reviews it they should see the grades. Teachers may also need to see other bits of interface, like edit links. There may be one or more questions displayed embedded in a particular HTML page, for example a page of a quiz attempt.

Finally, the question needs to move from one state to another. There two sorts of way that can happen. First, the student may have interacted with some questions that have been displayed as part of a HTML page, and then submitted that page. That is, the question engine needs to directly process data submitted as part of a HTTP POST request. Second, the student may have performed some other action (for example clicked the Start attempt or Submit all and finish buttons in the quiz), and as a result, some other part of the Moodle code may wish to cause some questions to change state.

Not only to we need to consider the current state of each question. We also need a record of the whole history of what happened. For example a teacher may wish to review everything the student did. Or, when we regrade an interactive question, we need to play back the entire sequence of what the student did.

To be flexible, the question engine needs to cope with any question-type plug-in. It also needs to be flexible in the ways questions behave. For example some questions can only be graded manually by a teacher. Automatically graded questions can be configured to behave in different ways, for example in interactively with immediate feedback and multiple tries during a single question attempt, or with deferred feedback, where the questions are only graded when the student does submit all and finish.

The state of a question

As noted above, a key issues is representing the state of a question that is being attempted, and, indeed, the complete history of the states it has passed through. This is the responsibility of the question_attempt class. As we have noted, we are not just interested in the current state of the question, but the complete history of states a question has been through. Therefore, a question_attempt, largely consists of a list of question_attempt_steps.

Attempt steps

A question_attempt_step has the following fields:

- state

- The state the question is at this step. This will be a question_state like question_state::$correct.

- fraction

- The score the student got for this question so far. This will start off null, and later change. See the note about scores below.

- timecreated

- The timestamp of when the action that created this state happened.

- userid

- The user whose action created this state. During the attempt, this will be the id of the user who attempted the question, but suppose a teacher manually grades the finished question later, then it will be the id of the teacher.

In addition to these fields, each step has an associative array (that is, name => value pairs) of extra data. We noted above that the most common cause of a question moving from one state to another was as the result of a HTTP POST request. Well, a POST request basically comprises a set of name => value pairs. For a typical step, the data array will hold those parts of the post data that belong to this question.

Not all state changes are caused by a POST request. Some are caused by API calls like $quba->finish_question(...). Internally, all these API calls are handled by converting them to special sets of name => value pairs. This means that every action is represented in the same way, which makes it much easier to implement processing like re-grading. Internally, every change of action is caused by a call to the $questionattempt->process_action($dataarray, ...) method. As an example, $quba->finish_question(...) gets handled by calling $questionattempt->process_action(array('!finish' => 1)).

A note about scores

The question engine stores scores in two different ways.

Normally, each question in a, say, quiz attempt, will be worth a certain number of marks. For example, question 1 may be worth 5 marks, question 2 worth 3 marks, and so on.

However, sometimes these marks have to change. For example, perhaps question 2 is now thought to be unsound, and so we want to change the scoring of the quiz so that it now is worth zero marks. But then later we may re-consider, and want to set it back to being worth 3 marks again.

In order to make it easy to handle this sort of change, almost all scores are internally stored on a scale of 0 to 1. Then there is a field $questionattempt->maxmark. To get the actual mark for a question you need to compute $fraction * $questionattempt->maxmark.

In the code, things called fraction are always on the 0 to 1 scale; things called mark are always on the scale of fraction * maxmark. Marks are what is displayed to users in the UI.

More about question attempts

As mentioned before, a question_attempt is mainly a list of question_attempt_steps. However, it also stores some other important information.

First, we need to know what this attempt is, so there are some links to other objects. There is a link to the question_definition of the question being attempted. There is a link to the question_behaviour that controls how the question behaves. Also, the question_attempt will be part of some student's work for some activity. For example, it may be part of a quiz attempt. The collection of all question_attempts that make up that quiz attempt (or whatever) is represented by a class called question_usage_by_activity, of which more in a moment. We store the id of this $quba, and the index number of this question within the usage.

Second, there is some metadata, stored in the following fields:

- maxmark

- How many marks this question is worth. All scores that are stored as fractions need to be multiplied by this before being displayed.

- minfraction

- I said above that fraction scores were stored on a scale of 0 to 1. That was a lie. Some questions can return negative marks. For example, a multiple choice question with 5 choices may return +1 for right, and -0.25 for wrong, so that the average score for guessing is 0. Or, if certainty based marking is used, and the student is confident but wrong, they may be awarded a negative mark. The correct statement is that fraction scores are on a scale of minfraction to 1.

- flagged

- Questions can be flagged. This is an on/off boolean that can be toggled by the student. Think of this as a simple form of bookmarking. That is stored here. (The history of changes to the flagged state are not considered important. That is why toggling the flag does not create a new step.)

Thirdly, and finally, some information is recorded to make ti easier to run reports efficiently.

- questionsummary

- This is a plain-text summary of the question the student was asked. (This makes most sense if you think of a question with randomisation like calculated questions. This field sumarieses the specific question the student was asked.)

- responsesummary

- This is a plain-text summary of the response the student gave.

- rightanswer

- This is a plain-text summary of what the right answer is.

Question usages

The question_usage_by_activity class, in terms of data storage, is really little more than a list of question attempts.

The little extra comprises:

- owningplugin

- the part of Moodle that this usage belongs to. For example 'mod_quiz' or 'core_question_preview'.

- context

- the context that the usage belongs to. For a quiz attempt, this will be the quiz context.

- preferredbehaviour

- The behaviour that should be used, where possible, when a question is added to this usage.

Question behaviours

TODO

More on question states

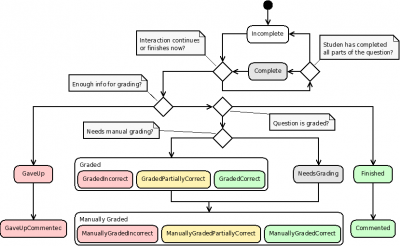

As above, question_attempt_steps::state field is one of the question_state constants. There are quite a lot of these states (about 16) and the state diagram here may help to understand them.

However, please by aware that although this diagram only shows particular state transitions, it is really up to the behaviour to decide how the question attempt moves from one state to another. Anything is possible, at least in theory. The diagram tried to show what might be sensible.

Database tables

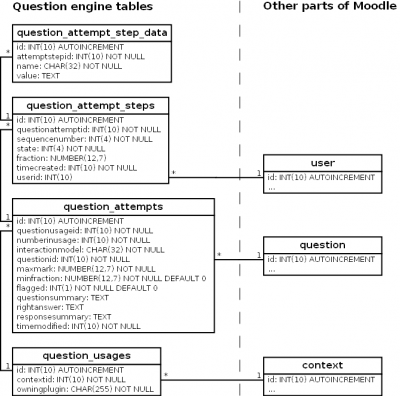

As explained above, all the state is represented by the classes question_useage_by_activity, which is a list of question_attempts, each of which is a list of question_attempt_steps. This is naturally stored in three linked database tables, except that because each step can contain an arbitrary list of name => value pairs, we get a fourth question_attempt_step_data table. This is shown in the diagram.

Detailed data about an attempt

SELECT

quiza.userid,

quiza.quiz,

quiza.attempt,

quiza.sumgrades,

qu.preferredbehaviour,

qa.slot,

qa.behaviour,

qa.questionid,

qa.maxmark,

qa.minfraction,

qa.flagged,

qas.sequencenumber,

qas.state,

qas.fraction,

timestamptz 'epoch' + qas.timecreated * interval '1 second',

qas.userid,

qasd.name,

qasd.value,

qa.questionsummary,

qa.rightanswer,

qa.responsesummary

FROM mdl_quiz_attempts quiza

JOIN mdl_question_usages qu ON qu.id = quiza.uniqueid

JOIN mdl_question_attempts qa ON qa.questionusageid = qu.id

JOIN mdl_question_attempt_steps qas ON qas.questionattemptid = qa.id

JOIN mdl_question_attempt_step_data qasd ON qasd.attemptstepid = qas.id

WHERE quiza.id = 675767

ORDER BY qas.timecreated, qu.id, qa.slot, qas.sequencenumber, qasd.name

Key processes

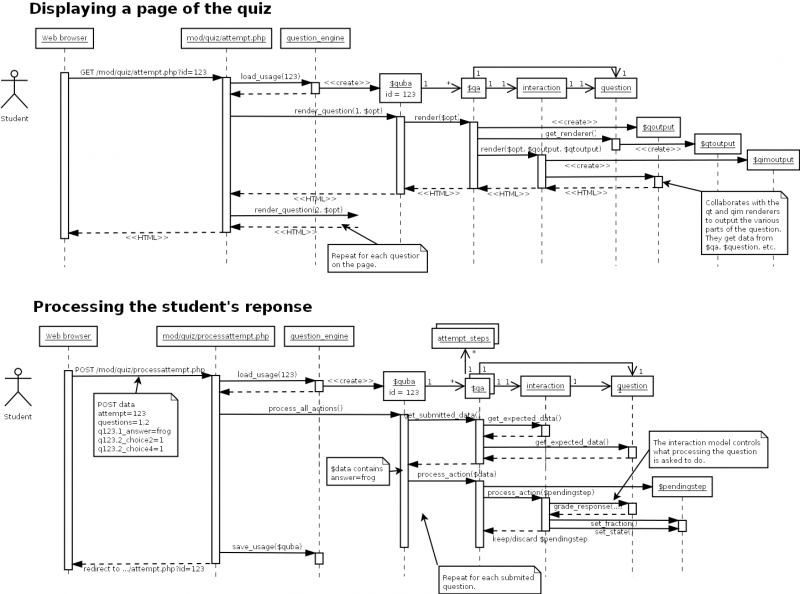

These two sequence diagrams summarise what happens during the two key operations, displaying a page of the quiz, and processing the student's responses.

See also

In the next section, Developing a Question Behaviour I describe what a developer will need to do to create a Question behaviour plugin for the new system.

- Back to Question Engine 2