Analytics API

Summary

Analytics API allow Moodle sites managers to define prediction models that combine indicators and a target. The target is what we want to predict, the indicators is what we think that will lead to an accurate prediction. Moodle is able to evaluate these models and, if the predictions accuracy is good enough, Moodle internally trains a machine learning algorithm by calculating the defined indicators with the site data. Once new data that matches the criteria defined by the model is available Moodle starts predicting what is most likely to happen. Targets are free to define what actions will be performed for each prediction, from sending messages or feeding reports to building new adaptive learning activities.

An obvious example of a model you may be interested on is prevention of students at risk of drop out: Lack of participation or bad grades in previous activities could be indicators, the target could be whether the student is able to complete the course or not. Moodle calculates these indicators and the target for each student in a finished course and predict which students are at risk of dropping out in ongoing courses.

API components

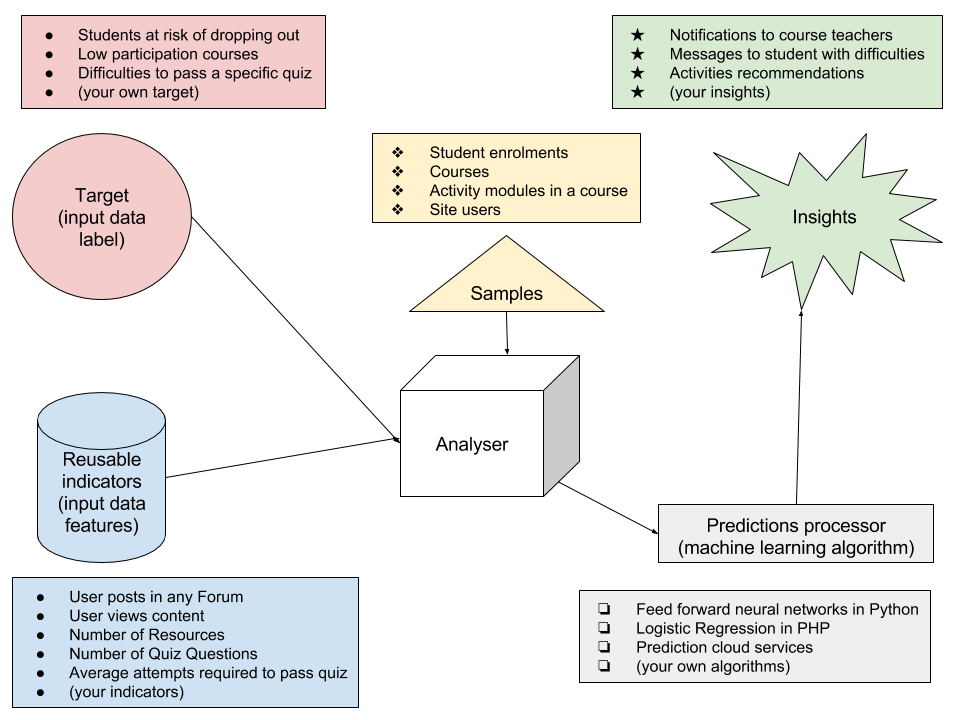

This diagram shows how the different components of the analytics API interact between them.

Data flow

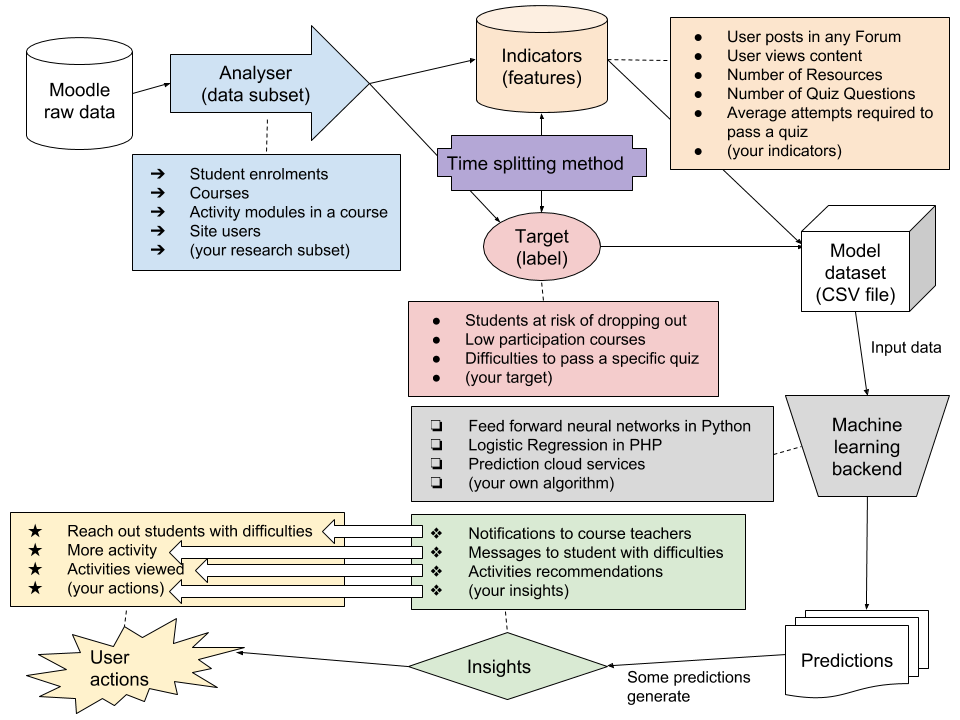

The diagram below shows the different stages data goes through. From the data any Moodle site contains to actionable insights.

Built-in models

People use Moodle in very different ways and even same site courses can vary significantly. Moodle should only be shipped with models that have been proven to be good at predicting in a wide range of sites and courses.

To diversify the samples and to cover a wider range of cases Moodle HQ research team is collecting anonymised Moodle site's datasets from collaborating institutions and partners to train the machine learning algorithms with them. The models that Moodle is shipped with are obviously better at predicting on these institutions sites, although some other datasets are used as test data for the machine learning algorithm to ensure that the models are good enough to predict accurately in any Moodle site. If you are interested in collaborating please contact Elizabeth Dalton at [elizabeth@moodle.com] to get information about the process.

Even if the models we ship Moodle with are already trained by Moodle HQ, each different site will continue training that site machine learning algorithms with its own data, which should lead to better prediction accuracy over time.

Concepts

Training

Definition for people not familiar with machine learning concepts: It is a process we need to run before being able to predict anything, we record what already happened so we can predict later what is likely to happen under the same circumstances. What we train are machine learning algorithms.

Prediction model

As explained above a prediction model is a combination of indicators and targets.

The relation between indicators and targets is stored in analytics_models database table.

The class \core_analytics\model manages all models related actions. evaluate(), train() and predict() forward the calculated indicators to the machine learning backends. \core_analytics\model delegates all heavy processing to analysers and machine learning backends. It also manages prediction models evaluation logs.

\core_analytics\model class is not expected to be extended.

Static models

Some prediction models do not need a powerful machine learning algorithm behind them processing tons of data to make more or less accurate predictions. There are obvious things different stakeholders may be interested in knowing that we can easily calculate. These *Static models* predictions are directly based on indicators calculations. They are based on the assumptions defined in the target but they should still be based on indicators so all these indicators can still be reused across different prediction models.

Some examples of static models:

- Courses without teachers

- Courses without activities

- Courses with students submissions requiring attention and no teachers accessing the course

- Courses that started 1 month ago and never accessed by anyone

- Students that have never logged into the system

- ....

These cases are nothing new and we could be already generating notifications for the examples above but there are some benefits on doing it using Moodle analytics API:

- Everything is easier and faster to code from a dev point of view as analytics subsystem provides APIs for everything

- New Indicators will be part of the core indicators pool that researchers (and 3rd party developers in general) can reuse in their own models

- Existing core indicators can be reused as well (the same indicators used for insights that depend on machine learning backends)

- Notifications are displayed using the core insights system, which is also responsible of sending the notifications and all related stuff.

- Analytics API tracks user actions after viewing the predictions, so we can know if insights derive in actions, which insights are not useful...

Analyser

Analyser classes are responsible of creating the dataset files that will be sent to the machine learning processors.

The base class \core_analytics\local\analyser\base does most of the stuff, it contains a key abstract method though, get_all_samples(), this method is what defines what is a sample. A sample can be any moodle entity: a course, a user, an enrolment, a quiz attempt... Samples are nothing by themselves, just a list of ids, they make sense once they are combined with the target and the indicator classes.

Other analyser classes responsibilities:

- Define the context of the predictions

- Discard invalid data

- Filter out already trained samples

- Include the time factor (time range processors, explained below)

- Forward calculations to indicators and target classes

- Record all calculations in a file

- Record all analysed sample ids in the database

If you are introducing a new analyser there is an important non-obvious fact you should know about: For scalability reasons all calculations at course level are executed in per-course basis and the resulting datasets are merged together once all site courses analysis is complete. We do it this way because depending on the sites' size it could take hours to complete the analysis of all the site, this is a good way to break the process up into pieces. When coding a new analyser you need to decide if you want to extend \core_analytics\local\analyser\by_course (your analyser will process a list of courses) or \core_analytics\local\analyser\sitewide (your analyser will receive just one analysable element, the site)

Target

Target classes define what we want to predict and calculate it across the site. It also defines the actions to perform depending on the received predictions.

Targets depend on analysers because analysers provide them with the samples they need. Analysers are separate entities to targets because analysers can be reused across different targets. Each target needs to specify what analyser it is using. A few examples in case it is not clear the difference between analysers, samples and targets:

- Target: 'students at risk of dropping out'. Analyser provides: 'course student'

- Target: 'spammer'. Analyser provides: 'site users'

- Target: 'ineffective course'. Analyser provides: 'courses'

- Target: 'difficulties to pass a specific quiz'. Analyser provides: 'quiz attempts in a specific quiz'

A callback defined by the target will be executed once new predictions start coming so each target have control over the prediction results.

Only binary classifications will be initially supported although the API will be extended in future to support discrete (multiclass classification) and continuous values (linear regression). See [https://tracker.moodle.org/browse/MDL-59044 MDL-59044] for more info.

Insights

Another aspect controlled by targets is the insights generation. Analysers samples always have a context, the context level (activity module, course, user...) depends on the sample but they always have a context, this context will be used to notify users with moodle/analytics:listinsights capability (teacher role by default) about new insights being available. These users will receive a notification with a link to the predictions page where all predictions of that context are listed.

A set of suggested actions will be available for each prediction, in cases like Students at risk of dropping out the actions can be things like to send a message to the student, to view their course activity report...

Indicator

Also defined as PHP classes, their responsibility is quite simple, to calculate the indicator using the provided sample.

Indicators are not limited to one single analyser like targets are, this makes indicators easier to reuse in different models. Indicators specify a minimum set of data they need to perform the calculation. The indicator developer should also make an effort to imagine how the indicator will work when different analysers are used. For example an indicator named Posts in any forum could be initially coded for a Shy students in a course target; this target would use course enrolments analyser, so the indicator developer knows that a course and a user will be provided by that analyser, but this indicator can be easily coded so the indicator can be reused by other analysers like courses or users'. In this case the developer can chose to require course or user, the name of the indicator would change according to that: User posts in any forum, which could be used in models like Inactive users or Posts in any of the course forums, which could be used in models like Low participation courses

The calculated value can go from -1 (minimum) to 1 (maximum). This guarantees that we will not have indicators like absolute number of write actions because we will be forced to limit the calculation to a range, e.g. -1 = 0 actions, -0.33 = some basic activity, 0.33 = activity, 1 = plenty of activity.

Time splitting methods

In some cases the time factor is not important and we just want to classify a sample, that is fine, things get more complicated when we want to predict what will happen in future. E.g. predictions about students at risk of dropping out are not useful once the course is over or when it is too late for any intervention.

Calculations in time ranges is can be a challenging aspect of some prediction models. Indicators need to be designed with this in mind and we need to include time-dependant indicators to the calculated indicators so machine learning algorithms are smart enough to avoid mixing calculations belonging to the beginning of the course with calculations belonging to the end of the course.

There are many different ways to split up a course in time ranges: in weeks, quarters, 8 parts, ranges with longer periods at the beginning and shorter periods at the end... And the ranges can be accumulative (from the beginning of the course) or only from the start of the time range.

Which time split method will be better at predicting depends on the prediction model. The model evaluation process iterates through all enabled time splitting methods in the system and returns the prediction accuracy of each of them.

Machine learning backends

They process the datasets generated from the calculated indicators and targets. They are a new plugin type with a common interface:

- Evaluate a provided prediction model

- Train a machine learning algorithm with the existing site data

- Predict targets based on previously trained algorithms

The communication between prediction processors and Moodle is through files because the code that will process the dataset can be written in PHP, in Python, in other languages or even use cloud services. This needs to be scalable so they are expected to be able to manage big files and train algorithms reading input files in batches if necessary.

Design

The system is designed as a Moodle subsystem and API, it lives in analytics/. Machine learning backends belong to lib/mlbackend and uses of the analytics API are located in different Moodle components, being core (lib/classes/analytics) the component that hosts general purpose uses of the API.

Extendable classes

Moodle components (core subsystems, core plugins and 3rd party plugins) will be able to add and/or redefine any of the entities involved in all the data modeling process.

Some of the base classes to extend or follow as example:

- \core_analytics\local\analyser\base

- \core_analytics\local\time_splitting\base

- \core_analytics\local\indicator\base

- \core_analytics\local\target\base

Read more in #Extensibility.

Interfaces

Predictor

Interface to be implemented by machine learning backends. Pretty basic interface, just methods to train, predict and evaluate datasets.

Analysable

Analysable items are analysed by analysers :P In most of the cases an analysable will be a course, although it can also be the site or any other element.

We will include two analysers \core_analytics\course and \core_analytics\site they should be enough for most of the stuff you might want to analyse. They need to provide an id, a \context and get_start() and get_end() methods if you expect them to be used on models that require time splitting. Read related comments above in #Analyser.

A possible extension point of \core_analytics\site would be to redefine get_start() and get_end().

Calculable

Indicators and targets must implement this interface.

It is already implemented by \core_analytics\local\indicator\base and \core_analytics\local\target\base but you can still code targets or indicators from the \core_analytics\calculable base if you need more control.

Extensibility

This projects aims to be as extendable as possible. Any moodle component, including third party plugins, will be able to define indicators, targets, analysers and time splitting processors.

An example of a possible extension would be a plugin with indicators that fetch student academic records from the Universities' student information system; the site admin could build a new model on top of the built-in 'students at risk of drop out detection' adding the SIS indicators to improve the model accuracy or for research purposes.