Configuraciones de analítica

El sistema de analítica del aprendizaje de Moodle requiere un poco de configuración inicial antes de que pueda ser usado. Usted puede acceder a Configuraciones de analítica desde Administración del sitio > Analítica > Configuraciones de analítica.

Información del sitio

¡Nueva característica

en Moodle 3.7!

La información del sitio será usada para ayudar a los modelos de analítica del aprendizaje a que tomen en cuenta las características de la institución. Esta información también es reportada como parte de la recolección de datos del sitio al momento de registrar el sitio. Esto le permite al Cuartel General de Moodle comprender que áreas de la analítica del aprendizaje están siendo más utilizadas y priorizar apropiadamente los recursos para el desarrollo.

Configurar configuraciones de analítica del aprendizaje

¡Nueva característica

en Moodle 3.8!

La analítica puede deshabilitarse desde Administración del sitio / Características avanzadas

La analítica puede entonces ser configurada desde la Administración del sitio / Analítica.

Procesador de predicciones

Los procesadores de predicciones son los backends del aprendizaje de máquina que procesan los conjuntos de datos generados a partir de las metas y los indicadores calculados y regresan predicciones. El núcleo de Moodle incluye 2 procesadores de predicciones:

Nota: Urgente de Traducir. ¡ Anímese a traducir esta muy importante página !. ( y otras páginas muy importantes que urge traducir)

PHP predictions processor

El procesador PHP es el predeterminado. No hay otros requisitos del sistema para usar este procesador.

Python predictions processor

El procesador Python es más poderoso y genera gráficas que explican el desempeño del modelo. Requiere que se configuren herramientas adicionales: Python mismo (https://wiki.python.org/moin/BeginnersGuide/Download) y el paquete moodlemlbackend python. The package can be installed in the web server (in all the codes if in a clustered environment) or in a separate server.

Installed in the web server

La versión más reciente del paquete para Moodle 3.8 es compatible con Python 3.4, 3.5, 3.6 and 3.7. Tenga en cuenta que el paquete debería estar disponible para ambos, el usuario de la Interfase de Línea de Comandos (CLI) y el usuario que ejecuta el servidor web (por ejemplo www-data).

Nota: Urgente de Traducir. ¡ Anímese a traducir esta muy importante página !. ( y otras páginas muy importantes que urge traducir)

- If necessary, install Python 3 (and pip for Python 3)

- Ensure that you use Python 3 to install the moodlemlbackend package:

sudo -H python3 -m pip install "moodlemlbackend==2.3.*"

- You must also enter the path to the Python 3 executable in Site administration -> Server -> System paths:

Installed in a separate server

To install the python package in a separate server instead of installing it on the web server/s have some advantages:

- Keeps the python ML backend as an external service

- To keep a separated control of the resources the web server/s dedicate to serving Moodle and the resources dedicated to the python ML backend

- You can reuse the same ML server for multiple Moodle sites. Easier to setup and maintain than to install/upgrade the python package in all nodes in the cluster

- You can install the package as a new docker container in your dockerized environment

- You can serve the ML backend from AWS through the API gateway and AWS lambda, storing the trained model files in S3

On the other hand, it is expected that there can be some added latency in connecting to the python ML backend server.

The python backend is exposed as a Flask application. The Flask application is part of the official 'moodlemlbackend' Python package and its FLASK_APP script is 'webapp', in the root of the package. You are free to use the setup that better suits your existing infrastructure.

New server in your infrastructure

The python ML backend is exposed as a Flask application, which uses a WSGI server (https://wsgi.readthedocs.io/en/latest/what.html) to be exposed to the www. The official documentation on how to deploy a Flask app can be found in https://flask.palletsprojects.com/en/1.0.x/tutorial/deploy/.

- Use MOODLE_MLBACKEND_PYTHON_USERS environment var to set a list of users and password (comma-separated). The value is 'default:sshhhh' (user: default, password: sshhhh).

- Set MOODLE_MLBACKEND_PYTHON_DIR to the path you want to use to store the data generated by the package

Docker

https://hub.docker.com/r/moodlehq/moodle-mlbackend-python is the official moodle-mlbackend-python docker image. We use it internally at Moodle HQ for internal testing and you can use it as well. You may want more control over the image, if that is the case https://github.com/moodlehq/moodle-docker-mlbackend-python/blob/master/Dockerfile can serve as an example of what is needed to get the python moodlemlbackend package working.

- Use MOODLE_MLBACKEND_PYTHON_USERS environment var to set a list of users and password (comma-separated). The value is 'default:sshhhh' (user: default, password: sshhhh).

To run the docker container locally you can execute:

docker pull moodlehq/moodle-mlbackend-python:2.4.0-python3.7.5

- Note that you need to add --network=moodledocker_default if your are using moodle-docker and you want this container to be visible from the web server.

docker run -d -p 5000:5000 --name=mlbackendpython --rm --add-host=mlbackendpython:0.0.0.0 moodlehq/moodle-mlbackend-python:2.4.0-python3.7.5

Then add this to your config.php file:

$CFG->pathtopython = 'python';

define('TEST_MLBACKEND_PYTHON_HOST', 'localhost'); // Change to "mlbackendpython" if your are using moodle-docker.

define('TEST_MLBACKEND_PYTHON_PORT', 5000);

define('TEST_MLBACKEND_PYTHON_USERNAME', 'default');

define('TEST_MLBACKEND_PYTHON_PASSWORD', 'sshhhh');

To check if it works, this command should not Skip any test:

vendor/bin/phpunit analytics/tests/prediction_test.php --verbose

AWS serverless service

You can serve the Flask application as a serverless application using the AWS API gateway and AWS lambda. The easiest way to do it is using Zappa https://github.com/Miserlou/Zappa to deploy the Flask application contained in the python ML package.

- Use MOODLE_MLBACKEND_PYTHON_USERS environment var to set a list of users and password (comma-separated). The value is 'default:sshhhh' (user: default, password: sshhhh).

- Set MOODLE_MLBACKEND_PYTHON_DIR to the path you want to use to store the data generated by the package

- Set these environment variables below to setup the S3 access:

- MOODLE_MLBACKEND_PYTHON_S3_BUCKET_NAME to the bucket name

- AWS_ACCESS_KEY_ID as usual

- AWS_SECRET_ACCESS_KEY as usual

Once this has been done, you can select the Python prediction processor as the default or for an individual model:

Almacén de bitácoras

From Moodle version 2.7 and up, the “Standard logstore” is the default. If for some reason you also have data in the older “legacy logs,” you can enable the Moodle Learning Analytics system to access them instead.

Intervalos de análisis

Intervalos de análisis determine how often insights will be generated, and how much information to use for each calculation. Using proportional analysis intervals allows courses of different lengths to be used to train a single model.

Several analysis intervals are available for models in the system. In this setting, the analysis intervals that will be used to evaluar modelos are defined, e.g. so the best analysis interval identified by the evaluation process can be selected for the model. This setting does not restrict the analysis intervals that can be used for specific models.

Each analysis interval divides the course duration into segments. At the end of each defined segment, the predictions engine will run and generate insights. It is recommended that you only enable the analysis intervals you are interested in using; the evaluation process will iterate through all enabled analysis intervals, so the more analysis intervals enabled, the slower the evaluation process will be.

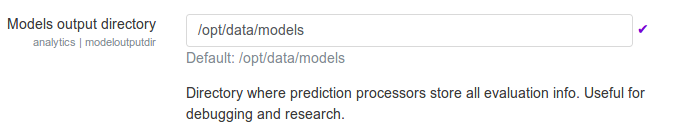

Directorio de salida de modelos

This setting allows you to define a directory where machine learning backends data is stored. Be sure this directory exists and is writable by the web server. This setting can be used by Moodle sites with multiple frontend nodes (a cluster) to specify a shared directory across nodes. This directory can be used by machine learning backends to store trained algorithms (its internal variables weights and stuff like that) to use them later to get predictions. Moodle cron lock will prevent multiple executions of the analytics tasks that train machine learning algorithms and get predictions from them.

Trabajos agendados

Most analytics API processes are executed through trabajos agendados. These processes usually read the activity log table and can require some time to finish. You can find Train models and Predict models scheduled tasks listed in Administration > Site administration > Server > Scheduled tasks. It is recommended to edit the tasks schedule so they run nightly.

Definiendo roles

Nota: Urgente de Traducir. ¡ Anímese a traducir esta muy importante página !. ( y otras páginas muy importantes que urge traducir)

Moodle learning analytics makes use of a number of capabilities. These can be added or removed from roles at the site level or within certain contexts to customise who can view insights.

To receive notifications and view insights, a user must have the analytics:listinsights capability within the context used as the "Analysable" for the model. For example, the Estudiantes en riesgo de abandonar model operates within the context of a course. Insights will be generated for each enrolment within any course matching the criteria of the model (courses with a start date in the past and an end date in the future, with at least one teacher and student), and these insights will be sent to anyone with the listinsights capability in that course. By default, the roles of Profesor, Profesor no-editor, and Mánager (gestor) have this capability.

Some models (e.g. the No teaching model) generate insights at the Site level. To receive insights from these models, the user must have a role assignment at the System level which includes the listinsights capability. By default, this is included in the Rol de Mánager if assigned at the site level.

Note: Site administrators do not automatically receive insight notifications, though they can choose to view details of any insight notifications on the system. To enable site administrators to receive notifications of insights, assign an additional role that includes the listinsights capability to the site administrator at the system level (e.g. the Rol de Mánager).