Workshop grading strategies: Difference between revisions

No edit summary |

Mary Cooch (talk | contribs) m (changing school demo link) |

||

| Line 91: | Line 91: | ||

==Experiencing Real Workshop== | ==Experiencing Real Workshop== | ||

If you would like to try out a real workshop, please log in to the [http://school. | If you would like to try out a real workshop, please log in to the [http://school.moodledemo.net/ School demo Moodle] with the username ''teacher'' and password ''moodle''. You can access the different stages of and explore the grading and phases of a [http://school.moodledemo.net/mod/workshop/view.php?id=651 completed workshop with data] on My home country. | ||

[[es:Estrategias de calificación de taller]] | [[es:Estrategias de calificación de taller]] | ||

[[de:Bewertungsstrategien bei gegenseitigen Beurteilungen]] | [[de:Bewertungsstrategien bei gegenseitigen Beurteilungen]] | ||

Revision as of 10:44, 2 September 2019

Simply said, selected grading strategy determines how the assessment form may look like And how the grade for a submission given by a certain assessment is calculated based on the assessment form. Workshop ships with four standard grading strategies. More strategies can be developed as pluggable extensions.

Accumulative grading strategy

In this case, the assessment form consists of a set of criteria. Each criterion is graded separately using either a number grade (eg out of 100) or a scale (using either one of site-wide scale or a scale defined in a course). Each criterion can have its weight set. Reviewers can put comments to all assessed criteria.

When calculating the total grade for the submission, the grades for particular criteria are firstly normalized to a range from 0% to 100%. Then the total grade by a given assessment is calculated as weighted mean of normalized grades. Scales are considered as grades from 0 to M-1, where M is the number of scale items.

- where is the grade given to the i-th criterion, is the maximal possible grade of the i-th criterion, is the weight of the i-th criterion and is the number of criteria in the assessment form.

It is important to realize that the influence of a particular criterion is determined by its weight only, not the grade type or range used. Let us have three criteria in the form, first using 0-100 grade, the second 0-20 grade and the third using a three items scale. If they all have the same weight, then giving grade 50 in the first criteria has the same impact as giving grade 10 for the second criteria.

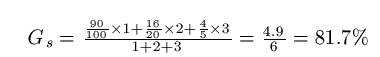

Take the case above as an example. Suppose that the third criterion uses scale: 6 levels. An assessor gives grade 90 for the first criterion (or aspect 1), grade 16 for the second criterion and grade 9 very good for the last criterion. And the weights of the three criteria are 1, 2, and 3, respectively. Because for the third criterion, the scale has 6 items and grade 9 very good is the second one of the grade sequence ordered from highest to lowest, grade 9 will be mapped to 4. That is, in the formula above, g3 = 4 and max3 = 5.Then the final grade given by this assessment will be:

Comments

The assessment form is similar to the one used in accumulative grading strategy but no grades can be given, just comments. The total grade for the assessed submission is always set to 100%. This strategy can be effective in repetitive workflows when the submissions are firstly just commented by reviewers to provide initial feedback to the authors. Then Workshop is switched back to the submission phase and the authors can improve it according the comments. Then the grading strategy can be changed to another one using proper grading and submissions are assessed again using a different assessment form.

Number of errors

In Moodle 1.x, this was called Error banded strategy. The assessment form consists of several assertions, each of them can be marked as passed or failed by the reviewer. Various words can be set to express the pass or failure state - eg Yes/No, Present/Missing, Good/Poor, etc.

The grade given by a particular assessment is calculated from the weighted count of negative assessment responses (failed assertions). Here, the weighted count means that a response with weight is counted -times. Course facilitators define a mapping table that converts the number of failed assertions to a percent grade for the given submission. Zero failed assertion is always mapped to 100% grade.

This strategy may be used to make sure that certain criteria were addressed in the submission. Examples of such assessment assertions are: Has less than 3 spelling errors, Has no formatting issues, Has creative ideas, Meets length requirements etc. This assessment method is considered as easier for reviewers to understand and deal with. Therefore it is suitable even for younger participants or those just starting with peer assessment, while still producing quite objective results.

You can edit the original assessment form by following the steps below:

- Write down the corresponding description for each assertion in the blank. Then fill in the blanks of word for the error and word for the success. Set the weight for each assertion. As you can see, the grade mapping table is still blank now.

- Click the ‘save and close’ button at the end of this page.

- Click the ‘Edit assessment form’ link at the shade area titled setup phase in the upper part of this page and view the assessment form again. At this time, you can see that the grade mapping table has already been set. (Note: Initially all the field are blank. You need to choose the right value from each list to make this grading strategy work properly.)

For example, if an assessment form contains three assertions:

| Assertion No. | Content | Pass or failure state | Weight |

|---|---|---|---|

| 1 | Has the suitable title | Yes/No | 1 |

| 2 | Has creative ideas | Present/Miss | 2 |

| 3 | The abstract is well-written | Yes/No | 3 |

In the example above, suppose that a reviewer gives one certain work Yes/Miss/Yes as the assessment. Since the submission only fails to meet the second criterion and the weight of the second criterion is 2, the total number of errors will be 2. Thus the grade for submission given by this assessment is 66%. Suppose that the maximum grade for submission set in grade settings is 100, therefore the final grade for this submission given by this assessment is grade 66.

Rubric

See the description of this scoring tool at Wikipedia. The rubric assessment form consists of a set of criteria. For each criterion, several ordered descriptive levels are provided. A number grade is assigned to each of these levels. The reviewer chooses which level answers/describes the given criterion best.

The final grade is aggregated as

- where is the grade given to the i-th criterion, is the minimal possible grade of the i-th criterion, is the maximal possible grade of the i-th criterion and is the number of criteria in the rubric.

Example of a single criterion can be: Overall quality of the paper with the levels 5 - An excellent paper, 3 - A mediocre paper, 0 - A weak paper (the number represent the grade).

There are two modes how the assessment form can be rendered - either in common grid form or in a list form. It is safe to switch the representation of the rubric any time.

Example of calculation: let us have a rubric with two criteria, which both have four levels 1, 2, 3, 4. The reviewer chooses level with the grade 2 for the first criterion and the grade 3 for the second criterion. Then the final grade is:

Note that this calculation may be different from how you intuitively use rubric. For example, when the reviewer in the previous example chose both levels with the grade 1, the plain sum would be 2 points. But that is actually the lowest possible score so it maps to the grade 0. To avoid confusion, it is recommended to always include a level with the grade 0 in the rubric definition.

Note on backwards compatibility: This strategy merges the legacy Rubric and Criterion strategies from Moodle 1.x into a single one. Conceptually, legacy Criterion was just one dimension of Rubric. In Workshop 1.x, Rubric could have several criteria (categories) but were limited to a fixed scale with 0-4 points. On the other hand, Criterion strategy in Workshop 1.9 could use custom scale, but was limited to a single aspect of assessment. The new Rubric strategy combines the old two. To mimic the legacy behaviour, the old Workshop are automatically upgraded so that:

- Criterion strategy from 1.9 are replaced with Rubric 2.0 using just one dimension

- Rubric from 1.9 are by Rubric 2.0 by using point scale 0-4 for every criterion.

In Moodle 1.9, reviewer could suggest an optional adjustment to a final grade. This is not supported any more. Eventually this may be supported in the future versions again as a standard feature for all grading strategies, not only rubric.

Experiencing Real Workshop

If you would like to try out a real workshop, please log in to the School demo Moodle with the username teacher and password moodle. You can access the different stages of and explore the grading and phases of a completed workshop with data on My home country.