Using analytics: Difference between revisions

(→Creating and editing models: added links to Target and Indicator pages.) |

Helen Foster (talk | contribs) (Analytics template) |

||

| (21 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

{{ | {{Analytics}} | ||

== Overview == | == Overview == | ||

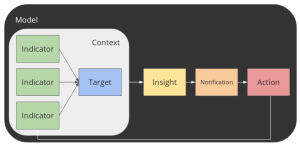

The Moodle Learning Analytics API is an open system that can become the basis for a very wide variety of models. Models can contain indicators (a.k.a. predictors), targets (the outcome we are trying to predict), insights (the predictions themselves), notifications (messages sent as a result of insights), and actions (offered to recipients of messages, which can become indicators in turn). | The Moodle Learning Analytics API is an open system that can become the basis for a very wide variety of models. Models can contain indicators (a.k.a. predictors), targets (the outcome we are trying to predict), insights (the predictions themselves), notifications (messages sent as a result of insights), and actions (offered to recipients of messages, which can become indicators in turn). | ||

| Line 16: | Line 16: | ||

Moodle can support multiple prediction models at once, even within the same course. This can be used for A/B testing to compare the performance and accuracy of multiple models. | Moodle can support multiple prediction models at once, even within the same course. This can be used for A/B testing to compare the performance and accuracy of multiple models. | ||

Moodle learning analytics supports two types of models. | |||

* '''Machine-learning''' based models, including predictive models, make use of AI models trained using site history to detect or predict hidden aspects of the learning process. | |||

* "'''Static'''" models use a simpler, rule-based system of detecting circumstances on the Moodle site and notifying selected users. | |||

Moodle core ships with three models. Additional prediction models can be created by using the [[dev:Analytics API| Analytics API]] or by using the new web UI. Each model is based on the prediction of a single, specific "target," or outcome (whether desirable or undesirable), based on a number of selected indicators. | |||

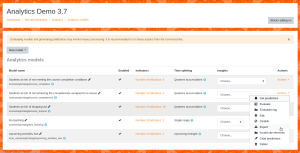

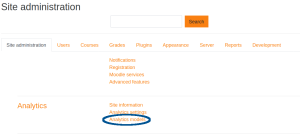

You can view and manage your system models from ''Site Administration > Analytics > Analytics models''. | You can view and manage your system models from ''Site Administration > Analytics > Analytics models''. | ||

| Line 30: | Line 34: | ||

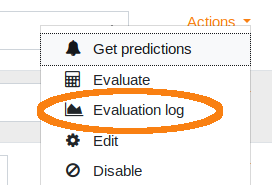

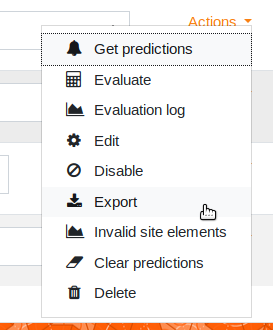

* '''Get predictions''' Train machine learning algorithms with the new data available on the system and get predictions for ongoing courses. ''Predictions are not limited to ongoing courses-- this depends on the model.'' | * '''Get predictions''' Train machine learning algorithms with the new data available on the system and get predictions for ongoing courses. ''Predictions are not limited to ongoing courses-- this depends on the model.'' | ||

* '''View Insights''' Once you have trained a machine learning algorithm with the data available on the system, you will see insights (predictions) here for each "analysable." In the included model | * '''View Insights''' Once you have trained a machine learning algorithm with the data available on the system, you will see insights (predictions) here for each "analysable." In the included model [[Students at risk of dropping out]], insights may be selected per course. ''Predictions are not limited to ongoing courses-- this depends on the model.'' | ||

[[ | * '''Evaluate''' This is a resource-intensive process, and will not be visible when sites have the "onlycli" setting checked (default). See [[Using analytics]] for more information. | ||

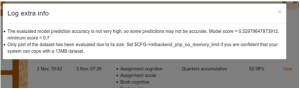

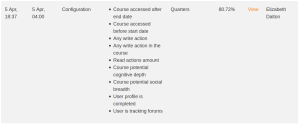

* '''Log''' View previous evaluation logs, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curve graphs, the tensorboard log dir or the model's Matthews correlation coefficient. The information available will depend on the machine learning backend in use. [[File:log_info.png|thumb]] | * '''Log''' View previous evaluation logs, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curve graphs, the tensorboard log dir or the model's Matthews correlation coefficient. The information available will depend on the machine learning backend in use. [[File:log_info.png|thumb]] | ||

| Line 44: | Line 42: | ||

* '''Edit''' You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited. | * '''Edit''' You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited. | ||

* '''Enable / Disable''' The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again. | * '''Enable / Disable''' This allows the model to run training and prediction processes. The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again. | ||

* '''Export''' Export your site training data to share it with your partner institutions or to use it on a new site. The Export action for models allows you to generate a csv file containing model data about indicators and weights, without exposing any of your site-specific data. We will be asking for submissions of these model files to help evaluate the value of models on different kinds of sites. | |||

* '''Export''' Export your site training data to share it with your partner institutions or to use it on a new site. The Export action for models allows you to generate a csv file containing model data about indicators and weights, without exposing any of your site-specific data. We will be asking for submissions of these model files to help evaluate the value of models on different kinds of sites | |||

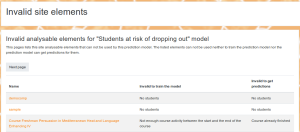

* '''Invalid site elements''' Reports on what elements in your site can not be analysed by this model | * '''Invalid site elements''' Reports on what elements in your site can not be analysed by this model | ||

| Line 57: | Line 54: | ||

==== Students at risk of dropping out ==== | ==== Students at risk of dropping out ==== | ||

[[Students at risk of dropping out | The model [[Students at risk of dropping out]] predicts students who are at risk of non-completion (dropping out) of a Moodle course, based on low student engagement. In this model, the definition of "dropping out" is "no student activity in the final quarter of the course." The prediction model uses the [https://en.wikipedia.org/wiki/Community_of_inquiry Community of Inquiry] model of student engagement, consisting of three parts: | ||

* [[Students at risk of dropping out#Cognitive depth|Cognitive presence]] | * [[Students at risk of dropping out#Cognitive depth|Cognitive presence]] | ||

| Line 65: | Line 62: | ||

This prediction model is able to analyse and draw conclusions from a wide variety of courses, and apply those conclusions to make predictions about new courses. The model is not limited to making predictions about student success in exact duplicates of courses offered in the past. However, there are some limitations: | This prediction model is able to analyse and draw conclusions from a wide variety of courses, and apply those conclusions to make predictions about new courses. The model is not limited to making predictions about student success in exact duplicates of courses offered in the past. However, there are some limitations: | ||

# This model requires a certain amount of in-Moodle data with which to make predictions. At the present time, only core Moodle activities are included in the | # This model requires a certain amount of in-Moodle data with which to make predictions. At the present time, only core Moodle activities are included in the|indicator set (see below). Courses which do not include several core Moodle activities per “time slice” (depending on the time splitting method) will have poor predictive support in this model. This prediction model will be most effective with fully online or “hybrid” or “blended” courses with substantial online components. | ||

# This prediction model assumes that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle. Because of this model design assumption, it is very important to properly set course start and end dates for each course to use this model. If both past courses and ongoing courses start and end dates are not properly set predictions cannot be accurate. Because the course end date field was only introduced in Moodle 3.2 and some courses may not have set a course start date in the past, we include a command line interface script: | # This prediction model assumes that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle. Because of this model design assumption, it is very important to properly set course start and end dates for each course to use this model. If both past courses and ongoing courses start and end dates are not properly set predictions cannot be accurate. Because the course end date field was only introduced in Moodle 3.2 and some courses may not have set a course start date in the past, we include a command line interface script: | ||

| Line 73: | Line 70: | ||

==== Upcoming activities due ==== | ==== Upcoming activities due ==== | ||

The static “upcoming activities due” model checks for activities with upcoming due dates and outputs to the user’s calendar page. | The static “upcoming activities due” model checks for activities with upcoming due dates and outputs to the user’s calendar page. | ||

| Line 80: | Line 77: | ||

== Creating and editing models == | == Creating and editing models == | ||

New machine learning models can be created by using the Analytics API, by importing an exported model from another site, or by using the new web UI | New machine learning models can be created by using the Analytics API, by importing an exported model from another site, or by using the new web UI. [[File:new_model.png|thumb]] If you delete a "default" model (shipped with Moodle core) you can restore it from the Create menu. (Note: "static" models cannot be created using the web UI at this time.) | ||

There are four components of a model that can be defined through the web UI: | There are four components of a model that can be defined through the web UI: | ||

=== Target === | === Target === | ||

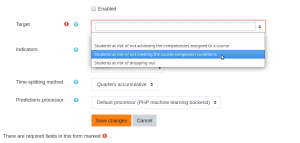

[[File:create_model_2.png|thumb]]Targets represent a “known good”-- something about which we have very strong evidence of value. Targets must be designed carefully to align with the curriculum priorities of the institution. Each model has a single target. The “Analyser” (context in which targets will be evaluated) is automatically controlled by the Target selection. See [[Learning analytics targets]] for more information. | [[File:create_model_2.png|thumb]]Targets represent a “known good”-- something about which we have very strong evidence of value. Targets must be designed carefully to align with the [[Curriculum theory|curriculum priorities]] of the institution. Each model has a single target. The “Analyser” (context in which targets will be evaluated) is automatically controlled by the Target selection. See [[Learning analytics targets]] for more information. | ||

=== Indicators === | === Indicators === | ||

| Line 102: | Line 99: | ||

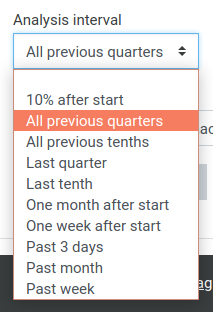

[[File:analysis_intervals.png|thumb]]Analysis intervals control how often the model will run to generate insights, and how much information will be included in each generation cycle. The two analysis intervals enabled by default for selection are “Quarters” and “Quarters accumulative.” Both options will cause models to execute four times-- after the first, second, third and fourth quarters of the course (the final execution is used to evaluate the accuracy of the predictions against the actual outcome). The difference lies in how much information will be included. “Quarters” will only include information from the most recent quarter of the course in its predictions. “Quarters accumulative” will include the most recent quarter and all previous quarters, and tends to generate more accurate predictions (though it can take more time and memory to execute). Moodle Learning Analytics also includes “Tenths” and “Tenths accumulative” options in Core, if you choose to enable them from the Analytics Settings panel. These generate predictions more frequently. | [[File:analysis_intervals.png|thumb]]Analysis intervals control how often the model will run to generate insights, and how much information will be included in each generation cycle. The two analysis intervals enabled by default for selection are “Quarters” and “Quarters accumulative.” Both options will cause models to execute four times-- after the first, second, third and fourth quarters of the course (the final execution is used to evaluate the accuracy of the predictions against the actual outcome). The difference lies in how much information will be included. “Quarters” will only include information from the most recent quarter of the course in its predictions. “Quarters accumulative” will include the most recent quarter and all previous quarters, and tends to generate more accurate predictions (though it can take more time and memory to execute). Moodle Learning Analytics also includes “Tenths” and “Tenths accumulative” options in Core, if you choose to enable them from the Analytics Settings panel. These generate predictions more frequently. | ||

* | * '''Single range''' indicates that predictions will be made once, but will take into account a range of time, e.g. one prediction at the end of a course. The prediction is made at the end of the range. | ||

* | * '''Upcoming...''' indicates that the model generates an insight based on a snapshot of data at a given moment, e.g. the "no teaching" model looks to see if there are currently any teachers or students assigned to a course one week before the start of the term, and issues one insight warning the site administrator that no teaching is likely to occur in that empty course. | ||

* " | * '''All previous...''' (formerly "accumulative") and '''Last...''' methods differ in how much data is included in the prediction. Both "All previous quarters" and "Last quarter" predictions are made at the end of each quarter of a time span (e.g. a course), but in "Last quarter," only the information from the most recent quarter is included in the prediction, whereas in "All previous quarters" all information up to the present is included in the prediction. | ||

Single range and | '''Single range''' and '''No time splitting''' methods do not have time constraints. They run during the next scheduled task execution, although models apply different restrictions (e.g. require that a course is finished to use it for training or some data in the course and students to use it to get predictions...). 'Single range' and 'No splitting' are not appropriate for students at risk of dropping out of courses. They are intended to be used in models like 'No teaching' or 'Spammer user' that are designed to make only one prediction per eligible sample. To explain this with an example: 'No teaching' model uses 'Single range' analysis interval; the target class (the main PHP class of a model) only accepts courses that will start during the next week. Once we provide a 'No teaching' insight for a course we won't provide any further 'No teaching' insights for that course. | ||

The difference between 'Single range' and 'No splitting' is that models analysed using 'Single range' will be limited to the analysable elements (the course in students at risk model) start and end dates, while 'No splitting' do not have any time contraints and all data available in the system is used to calculate the indicators. | The difference between 'Single range' and 'No splitting' is that models analysed using 'Single range' will be limited to the analysable elements (the course in students at risk model) start and end dates, while 'No splitting' do not have any time contraints and all data available in the system is used to calculate the indicators. | ||

| Line 126: | Line 123: | ||

Each prediction processor may support multiple algorithms in the future. | Each prediction processor may support multiple algorithms in the future. | ||

=== Changing the model name === | |||

The model name is used to identify insights generated by the model, and by default, is the same as the Target name. You can edit this by clicking the "pencil" icon next to the model name in the list of models: | |||

[[File:edit_model_name.png|thumb]] | |||

== Training models == | == Training models == | ||

| Line 137: | Line 139: | ||

At the present time, Moodle Learning Analytics only supports supervised models. | At the present time, Moodle Learning Analytics only supports supervised models. | ||

While we hope to include pre-trained models with the Moodle core installation in the future, at the current time we do not have large enough data sets to train a model for external use. | While we hope to include pre-trained models with the Moodle core installation in the future, at the current time we do not have large enough data sets to train a model for external use. | ||

=== Training data === | === Training data === | ||

| Line 144: | Line 146: | ||

The training set is defined in the php code for the Target. Models can only be trained if a site contains enough data matching the training criteria. Most models will require Moodle log data for the time period covering the events being analysed. For example, the [[Students at risk of dropping out]] model can only be trained if there is log data covering student activity in the courses that meet the training criteria. It is possible to train a model on an "archive" system and then use the model on a production system. | The training set is defined in the php code for the Target. Models can only be trained if a site contains enough data matching the training criteria. Most models will require Moodle log data for the time period covering the events being analysed. For example, the [[Students at risk of dropping out]] model can only be trained if there is log data covering student activity in the courses that meet the training criteria. It is possible to train a model on an "archive" system and then use the model on a production system. | ||

=== | == Evaluating models == | ||

[[File:model_menu_evaluate.png|thumb]]This is a '''manual''', resource-intensive process, and will not be visible from the Web UI when sites have the "onlycli" setting checked (default). | |||

This process can be executed independently of enabling or training the model, and causes Moodle to assemble the training data available on the site, calculate all the indicators and the target and pass the resulting dataset to machine learning backends. This process will split the dataset into training data and testing data and calculate its accuracy. Note that the evaluation process uses all information available on the site, even if it is very old. Because of this, the accuracy returned by the evaluation process may be lower than the real model accuracy as indicators are more reliably calculated immediately after training data is available because the site state changes over time. The metric used to describe accuracy is a weighted ''[https://en.wikipedia.org/wiki/F1_score F1 score]''. | |||

''We recommend that all machine-learning models be evaluated before being enabled on a production site.'' | |||

To force the model evaluation process to run from the command line: | |||

$ admin/tool/analytics/cli/evaluate_model.php | $ admin/tool/analytics/cli/evaluate_model.php | ||

Use the --help option to see parameters. | |||

If you are connecting to your Moodle server remotely, you will probably want to run the process in the background and detach it, so it will continue to run if you log out of your session (or your connection times out). Here is an example on Linux using bash: | |||

# php evaluate_model.php --modelid=4 --analysisinterval='\core\analytics\time_splitting\deciles_accum' > eval.log & | |||

# jobs | |||

[1]+ Running php evaluate_model.php --modelid=4 --analysisinterval='\core\analytics\time_splitting\deciles_accum' > eval.log & | |||

# disown -h %1 | |||

This will allow the process to continue to run after the remote shell disconnects. See the documentation for your OS and shell for more details. | |||

On small sites, you can uncheck "onlycli" in the Analytics settings page, and you can then evaluate models from the Analytics models page. However, this is not feasible with production sites. | |||

=== Review evaluation results === | === Review evaluation results === | ||

| Line 175: | Line 193: | ||

[[es:Uso de analítica]] | [[es:Uso de analítica]] | ||

[[de:Analytics nutzen]] | |||

Latest revision as of 14:36, 4 June 2021

Overview

The Moodle Learning Analytics API is an open system that can become the basis for a very wide variety of models. Models can contain indicators (a.k.a. predictors), targets (the outcome we are trying to predict), insights (the predictions themselves), notifications (messages sent as a result of insights), and actions (offered to recipients of messages, which can become indicators in turn).

Most learning analytics models are not enabled by default. Enabling models for use should be done after considering the institutional goals the models are meant to support. When selecting or creating an analytics model, the following steps are important:

- What outcome do we want to predict? Or what process do we want to detect? (Positive or negative)

- How will we detect that outcome/process?

- What clues do we think might help us predict that outcome/process?

- What should we do if the outcome/process is very likely? Very unlikely?

- Who should be notified? What kind of notification should be sent?

- What opportunities for action should be provided on notification?

Moodle can support multiple prediction models at once, even within the same course. This can be used for A/B testing to compare the performance and accuracy of multiple models.

Moodle learning analytics supports two types of models.

- Machine-learning based models, including predictive models, make use of AI models trained using site history to detect or predict hidden aspects of the learning process.

- "Static" models use a simpler, rule-based system of detecting circumstances on the Moodle site and notifying selected users.

Moodle core ships with three models. Additional prediction models can be created by using the Analytics API or by using the new web UI. Each model is based on the prediction of a single, specific "target," or outcome (whether desirable or undesirable), based on a number of selected indicators.

You can view and manage your system models from Site Administration > Analytics > Analytics models.

Existing models

Moodle core ships with three models, Students at risk of dropping out and the static models Upcoming activities due and No teaching. Other models can be added to your system by installing plugins or by using the web UI (see below). Existing models can be examined and altered from the "Analytics models" page in Site administration:

These are some of the actions you can perform on an existing model:

- Get predictions Train machine learning algorithms with the new data available on the system and get predictions for ongoing courses. Predictions are not limited to ongoing courses-- this depends on the model.

- View Insights Once you have trained a machine learning algorithm with the data available on the system, you will see insights (predictions) here for each "analysable." In the included model Students at risk of dropping out, insights may be selected per course. Predictions are not limited to ongoing courses-- this depends on the model.

- Evaluate This is a resource-intensive process, and will not be visible when sites have the "onlycli" setting checked (default). See Using analytics for more information.

- Log View previous evaluation logs, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curve graphs, the tensorboard log dir or the model's Matthews correlation coefficient. The information available will depend on the machine learning backend in use.

- Edit You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited.

- Enable / Disable This allows the model to run training and prediction processes. The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again.

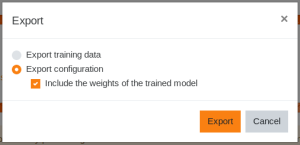

- Export Export your site training data to share it with your partner institutions or to use it on a new site. The Export action for models allows you to generate a csv file containing model data about indicators and weights, without exposing any of your site-specific data. We will be asking for submissions of these model files to help evaluate the value of models on different kinds of sites.

- Invalid site elements Reports on what elements in your site can not be analysed by this model

- Clear predictions Clears all the model predictions and training data

Core models

Students at risk of dropping out

The model Students at risk of dropping out predicts students who are at risk of non-completion (dropping out) of a Moodle course, based on low student engagement. In this model, the definition of "dropping out" is "no student activity in the final quarter of the course." The prediction model uses the Community of Inquiry model of student engagement, consisting of three parts:

This prediction model is able to analyse and draw conclusions from a wide variety of courses, and apply those conclusions to make predictions about new courses. The model is not limited to making predictions about student success in exact duplicates of courses offered in the past. However, there are some limitations:

- This model requires a certain amount of in-Moodle data with which to make predictions. At the present time, only core Moodle activities are included in the|indicator set (see below). Courses which do not include several core Moodle activities per “time slice” (depending on the time splitting method) will have poor predictive support in this model. This prediction model will be most effective with fully online or “hybrid” or “blended” courses with substantial online components.

- This prediction model assumes that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle. Because of this model design assumption, it is very important to properly set course start and end dates for each course to use this model. If both past courses and ongoing courses start and end dates are not properly set predictions cannot be accurate. Because the course end date field was only introduced in Moodle 3.2 and some courses may not have set a course start date in the past, we include a command line interface script:

$ admin/tool/analytics/cli/guess_course_start_and_end.php

This script attempts to estimate past course start and end dates by looking at the student enrolments and students' activity logs. After running this script, please check that the estimated start and end dates script results are reasonably correct.

Upcoming activities due

The static “upcoming activities due” model checks for activities with upcoming due dates and outputs to the user’s calendar page.

No teaching

This model's insights will inform site managers of which courses with an upcoming start date will not have teaching activity. This is a simple "static" model and it does not use machine learning backend to return predictions. It bases the predictions on assumptions, e.g. there is no teaching if there are no students.

Creating and editing models

New machine learning models can be created by using the Analytics API, by importing an exported model from another site, or by using the new web UI.

If you delete a "default" model (shipped with Moodle core) you can restore it from the Create menu. (Note: "static" models cannot be created using the web UI at this time.)

There are four components of a model that can be defined through the web UI:

Target

Targets represent a “known good”-- something about which we have very strong evidence of value. Targets must be designed carefully to align with the curriculum priorities of the institution. Each model has a single target. The “Analyser” (context in which targets will be evaluated) is automatically controlled by the Target selection. See Learning analytics targets for more information.

Indicators

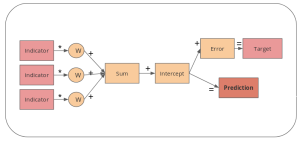

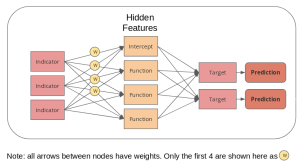

Indicators are data points that may help to predict targets. We are free to add many indicators to a model to find out if they predict a target-- the only limit is that the data must be available within Moodle and must have a connection to the context of the model (e.g. the user, the course, etc.). The machine learning “training” process will determine how much weight to give to each indicator in the model.

We do want to make sure any indicators we include in a production model have a clear purpose and can be interpreted by participants, especially if they are used to make prescriptive or diagnostic decisions.

Indicators are constructed from data, but the data points need to be processed to make consistent, reusable indicators. In many cases, events are counted or combined in some way, though other ways of defining indicators are possible and will be discussed later. How the data points are processed involves important assumptions that affect the indicators. In particular, indicators can be absolute, meaning that the value of the indicator stays the same no matter what other samples are in the context, or relative, meaning that the indicator compares the sample to others in the context.

See Learning analytics indicators for more information.

Analysis intervals

Analysis intervals control how often the model will run to generate insights, and how much information will be included in each generation cycle. The two analysis intervals enabled by default for selection are “Quarters” and “Quarters accumulative.” Both options will cause models to execute four times-- after the first, second, third and fourth quarters of the course (the final execution is used to evaluate the accuracy of the predictions against the actual outcome). The difference lies in how much information will be included. “Quarters” will only include information from the most recent quarter of the course in its predictions. “Quarters accumulative” will include the most recent quarter and all previous quarters, and tends to generate more accurate predictions (though it can take more time and memory to execute). Moodle Learning Analytics also includes “Tenths” and “Tenths accumulative” options in Core, if you choose to enable them from the Analytics Settings panel. These generate predictions more frequently.

- Single range indicates that predictions will be made once, but will take into account a range of time, e.g. one prediction at the end of a course. The prediction is made at the end of the range.

- Upcoming... indicates that the model generates an insight based on a snapshot of data at a given moment, e.g. the "no teaching" model looks to see if there are currently any teachers or students assigned to a course one week before the start of the term, and issues one insight warning the site administrator that no teaching is likely to occur in that empty course.

- All previous... (formerly "accumulative") and Last... methods differ in how much data is included in the prediction. Both "All previous quarters" and "Last quarter" predictions are made at the end of each quarter of a time span (e.g. a course), but in "Last quarter," only the information from the most recent quarter is included in the prediction, whereas in "All previous quarters" all information up to the present is included in the prediction.

Single range and No time splitting methods do not have time constraints. They run during the next scheduled task execution, although models apply different restrictions (e.g. require that a course is finished to use it for training or some data in the course and students to use it to get predictions...). 'Single range' and 'No splitting' are not appropriate for students at risk of dropping out of courses. They are intended to be used in models like 'No teaching' or 'Spammer user' that are designed to make only one prediction per eligible sample. To explain this with an example: 'No teaching' model uses 'Single range' analysis interval; the target class (the main PHP class of a model) only accepts courses that will start during the next week. Once we provide a 'No teaching' insight for a course we won't provide any further 'No teaching' insights for that course.

The difference between 'Single range' and 'No splitting' is that models analysed using 'Single range' will be limited to the analysable elements (the course in students at risk model) start and end dates, while 'No splitting' do not have any time contraints and all data available in the system is used to calculate the indicators.

Note: Although the examples above refer to courses, analysis intervals can be used on any analysable element. For example, enrolments can have start and end dates, so an analysis interval could be applied to generate predictions about aspects of an enrollment. For analysable elements with no start and end dates, different analysis intervals would be needed. For example, a "weekly" analysis interval could be applied to a model intended to predict whether a user is likely to log in to the system in the future, on the basis of activity in the previous week.

Predictions processor

This setting controls which machine learning backend and algorithm will be used to estimate the model. Moodle currently supports two predictions processors:

- PHP machine learning backend - implements logistic regression using php-ml (contributed by Moodle)

- Python machine learning backend - implements single hidden layer feed-forward neural network using TensorFlow.

You can only choose from the predictions processors enabled on your site.

Each prediction processor may support multiple algorithms in the future.

Changing the model name

The model name is used to identify insights generated by the model, and by default, is the same as the Target name. You can edit this by clicking the "pencil" icon next to the model name in the list of models:

Training models

Machine-learning based models require a training process using previous data from the site. "Static" models make use of sets of pre-defined rules, and do not need to be trained.

There are two main categories of machine-learning based analytics models: supervised and unsupervised.

- Supervised models must be trained by using a data set with the target values already identified. For example, if the model will predict course completion, the model must be trained on a set of courses and enrollments with known completion status.

- Unsupervised models look for patterns in existing data, e.g. grouping students based on similarities in their behavior in courses.

At the present time, Moodle Learning Analytics only supports supervised models.

While we hope to include pre-trained models with the Moodle core installation in the future, at the current time we do not have large enough data sets to train a model for external use.

Training data

The model code includes criteria for "training" and "prediction" data sets. For example, only courses with enrolled students and an end date in the past can be used to train the Students at risk of dropping out model, because it is impossible to determine whether a student dropped out until a course has ended. On the other hand, for this model to make predictions, there must be a course with students enrolled that has started, but not yet ended.

The training set is defined in the php code for the Target. Models can only be trained if a site contains enough data matching the training criteria. Most models will require Moodle log data for the time period covering the events being analysed. For example, the Students at risk of dropping out model can only be trained if there is log data covering student activity in the courses that meet the training criteria. It is possible to train a model on an "archive" system and then use the model on a production system.

Evaluating models

This is a manual, resource-intensive process, and will not be visible from the Web UI when sites have the "onlycli" setting checked (default).

This process can be executed independently of enabling or training the model, and causes Moodle to assemble the training data available on the site, calculate all the indicators and the target and pass the resulting dataset to machine learning backends. This process will split the dataset into training data and testing data and calculate its accuracy. Note that the evaluation process uses all information available on the site, even if it is very old. Because of this, the accuracy returned by the evaluation process may be lower than the real model accuracy as indicators are more reliably calculated immediately after training data is available because the site state changes over time. The metric used to describe accuracy is a weighted F1 score.

We recommend that all machine-learning models be evaluated before being enabled on a production site.

To force the model evaluation process to run from the command line:

$ admin/tool/analytics/cli/evaluate_model.php

Use the --help option to see parameters.

If you are connecting to your Moodle server remotely, you will probably want to run the process in the background and detach it, so it will continue to run if you log out of your session (or your connection times out). Here is an example on Linux using bash:

# php evaluate_model.php --modelid=4 --analysisinterval='\core\analytics\time_splitting\deciles_accum' > eval.log & # jobs [1]+ Running php evaluate_model.php --modelid=4 --analysisinterval='\core\analytics\time_splitting\deciles_accum' > eval.log & # disown -h %1

This will allow the process to continue to run after the remote shell disconnects. See the documentation for your OS and shell for more details.

On small sites, you can uncheck "onlycli" in the Analytics settings page, and you can then evaluate models from the Analytics models page. However, this is not feasible with production sites.

Review evaluation results

You can review the results of the model training process by accessing the evaluation log.

Check for warnings about evaluation completion, model accuracy, and model variability.

You can also check the invalid site elements list to verify which site elements were included or excluded in the analysis. If you see a large number of unexpected elements in this report, it may mean that you need to check your data. For example, if courses don't have appropriate start and end dates set, or enrolment data has been purged, the system may not be able to include data from those courses in the model training process.

Exporting and Importing models

Models can also be exported from one site and imported to another.

Exporting models

You can export the data used to train the model, or the model configuration and the weights of the trained model.

Note: the model weights are completely anonymous, containing no personally identifiable data! This means it is safe to share them with researchers without worrying about privacy regulations.

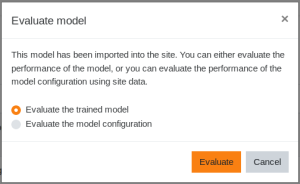

Importing models

When a model is imported with weights, the site administrator has the option to evaluate the trained model using site data, or to evaluate the model configuration by re-training it using the current site data.