Analytics: Difference between revisions

(→Features: added new model in 3.7) |

(→Settings: added link preparatory to moving content to new page) |

||

| Line 43: | Line 43: | ||

You can access ''Analytics settings'' from ''Site administration > Analytics > Analytics settings''. | You can access ''Analytics settings'' from ''Site administration > Analytics > Analytics settings''. | ||

See [[Analytics settings]] for more detail. | |||

=== Site information === | === Site information === | ||

{{New features}} | {{New features}} | ||

Revision as of 19:17, 24 June 2019

Overview

Beginning in version 3.4, Moodle core implements open source, transparent next-generation learning analytics using machine learning backends that go beyond simple descriptive analytics to provide predictions of learner success, and ultimately diagnosis and prescriptions (advisements) to learners and teachers.

What are learning analytics?

Learning analytics are software algorithms that are used to predict or detect unknown aspects of the learning process, based on historical data and current behavior. There are four main categories of learning analytics:

- descriptive (what happened?)

- predictive (what will happen next?)

- diagnostic (why did it happen?)

- prescriptive (do this to improve)

Most commercial solutions are descriptive only. Those that are predictive or proactive make certain assumptions about learning that don’t apply to everyone.

Analytics vs. reporting

Moodle provides a variety of built-in reports based on log data, but they are primarily descriptive in nature -- they tell participants what happened, but not why, and they don’t predict outcomes or advise participants how to improve outcomes. Log entries, while very detailed, are not in themselves descriptive of the learning process. They tell us “who,” “what,” and “when,” but not “why” or “how well.” Much more context is needed around each micro-action to develop a pattern of engagement.

Many third-party plugins also exist for Moodle that provide descriptive analytics. There are also integrations with third-party off-site reporting solutions. Again, these primarily provide descriptive analytics that rely on human judgment to interpret reports and generate predictions and prescriptions.

The system can be easily extended with new custom models, based on reusable targets, indicators, and other components. For more information, see the Analytics API developer documentation.

Often in the past, learning analytics systems have attempted to analyze past activities to predict future activities in real time. With Moodle Learning Analytics, we are more ambitious. We believe a full learning analytics solution will help us not only predict events, but change them to be more positive.

Features

- Three built-in prediction models: "Students at risk of dropping out", "Upcoming events" and "No Teaching".New feature

in Moodle 4.1!

- A set of student engagement indicators based on the Community of Inquiry.

- Built-in tools to evaluate models against your site's data

- Proactive notifications using Events

- Instructors can easily send messages to students identified by the model, or jump to the Outline report for that student for more detail about student activity

- An API to build indicators and prediction models for third-party Moodle plugins

- Machine learning backend plugin type - supports PHP and Python, and can be extended to implement other ML backends

Note: PHP 7.x is required.

Limitations

- Models included in this release must be "trained" on a site with previous completed courses, ideally using the Moodle course completion feature. The current models cannot make predictions on a site until this is done.

- Models must be designed and selected to match the educational priorities of the institution.

Settings

You can access Analytics settings from Site administration > Analytics > Analytics settings. See Analytics settings for more detail.

Site information

Site information will be used to help learning analytics models take characteristics of the institution into account. This information is also reported as part of site data collection when you register your site. This will allow HQ to understand which areas in learning analytics are seeing the most use and prioritize development resources appropriately.

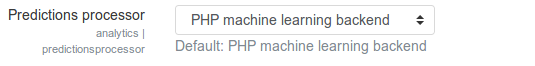

Predictions processor

Prediction processors are the machine learning backends that process the datasets generated from the calculated indicators and targets and return predictions. Moodle core includes 2 prediction processors:

- The PHP processor is the default. There are no other system requirements to use this processor.

- The Python one is more powerful and it generates graphs that explain the model performance. It requires setting up extra tools: Python itself (https://wiki.python.org/moin/BeginnersGuide/Download) and the moodlemlbackend python package.

pip install moodlemlbackend

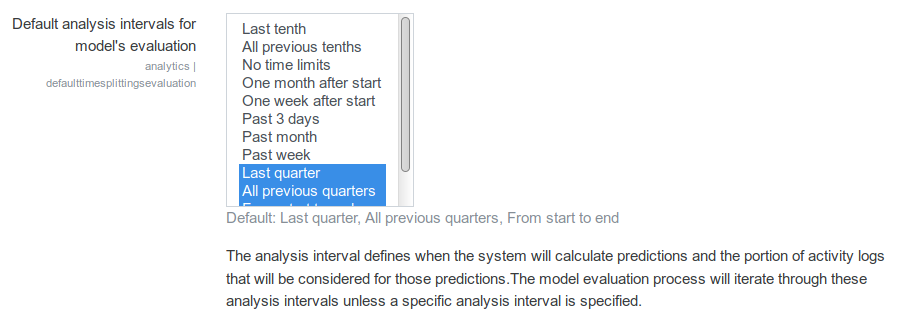

Analysis intervals

Analysis intervals determine how often insights will be generated, and how much information to use for each calculation. Using proportional analysis intervals allows courses of different lengths to be used to train a single model.

Each analysis interval divides the course duration into segments. At the end of each defined segment, the predictions engine will run and generate insights. It is recommended that you only enable the analysis intervals you are interested in using; the evaluation process will iterate through all enabled analysis intervals, so the more analysis intervals enabled, the slower the evaluation process will be.

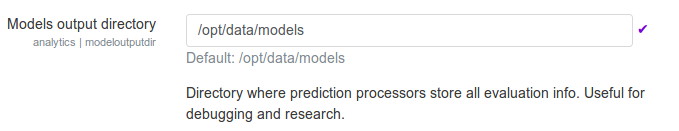

Models output directory

This setting allows you to define a directory where machine learning backends data is stored. Be sure this directory exists and is writable by the web server. This setting can be used by Moodle sites with multiple frontend nodes (a cluster) to specify a shared directory across nodes. This directory can be used by machine learning backends to store trained algorithms (its internal variables weights and stuff like that) to use them later to get predictions. Moodle cron lock will prevent multiple executions of the analytics tasks that train machine learning algorithms and get predictions from them.

Scheduled tasks

Most analytics API processes are executed through scheduled tasks. These processes usually read the activity log table and can require some time to finish. You can find Train models and Predict models scheduled tasks listed in Administration > Site administration > Server > Scheduled tasks. It is recommended to edit the tasks schedule so they run nightly.

Using analytics

The Moodle Learning Analytics API is an open system that can become the basis for a very wide variety of models. Models can contain indicators (a.k.a. predictors), targets (the outcome we are trying to predict), insights (the predictions themselves), notifications (messages sent as a result of insights), and actions (offered to recipients of messages, which can become indicators in turn).

When selecting or creating an analytics model, the following steps are important:

- What outcome do we want to predict? Or what process do we want to detect? (Positive or negative)

- How will we detect that outcome/process?

- What clues do we think might help us predict that outcome/process?

- What should we do if the outcome/process is very likely? Very unlikely?

- Who should be notified? What kind of notification should be sent?

- What opportunities for action should be provided on notification?

Moodle can support multiple prediction models at once, even within the same course. This can be used for A/B testing to compare the performance and accuracy of multiple models.

Moodle core ships with two prediction models, Students at risk of dropping out and No teaching. Additional prediction models can be created by using the Analytics API. Each model is based on the prediction of a single, specific "target," or outcome (whether desirable or undesirable), based on a number of selected indicators.

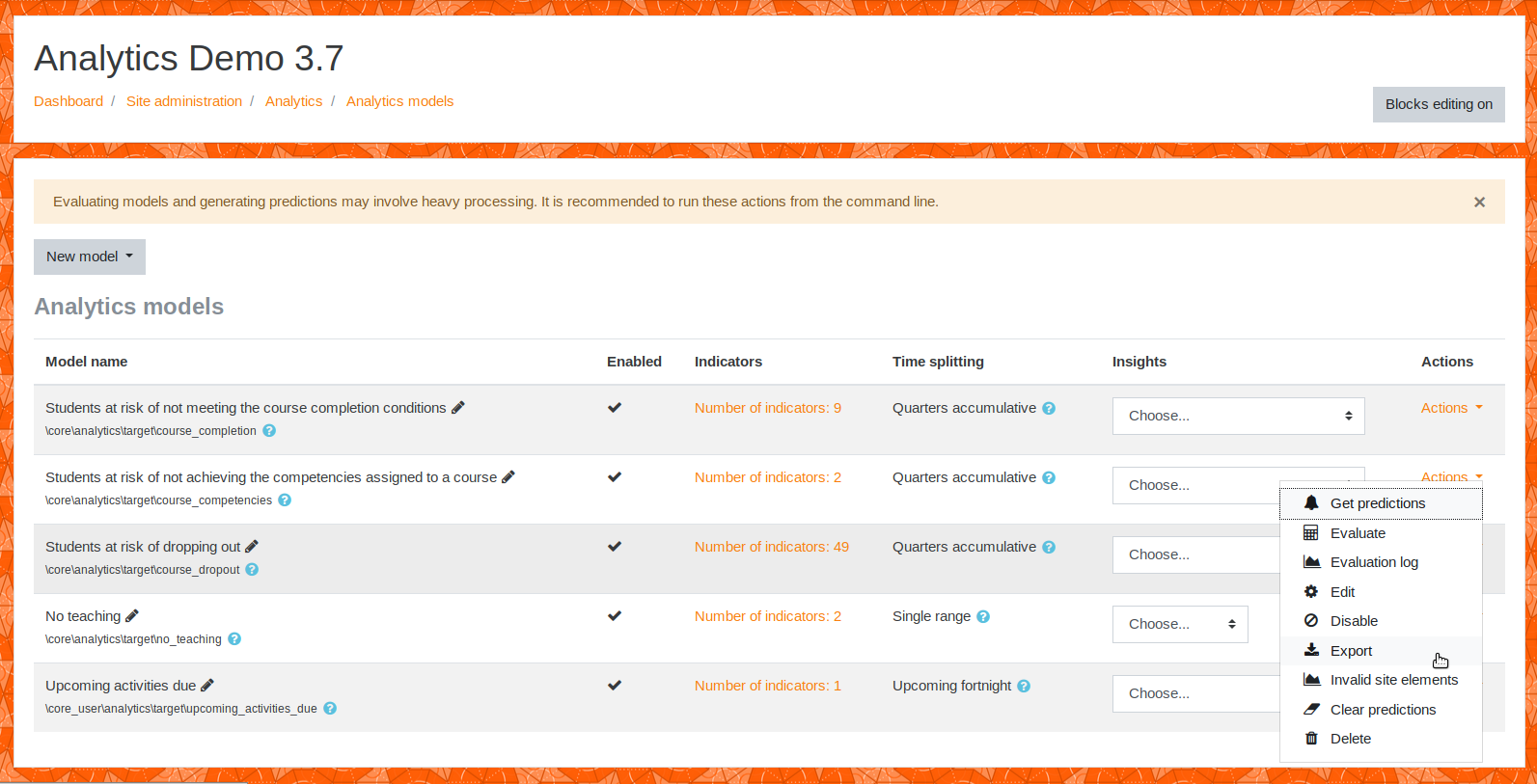

You can manage your system models from Site Administration > Analytics > Analytics models.

These are some of the actions you can perform on a model:

Get predictions

Train machine learning algorithms with the new data available on the system and get predictions for ongoing courses. Predictions are not limited to ongoing courses-- this depends on the model.

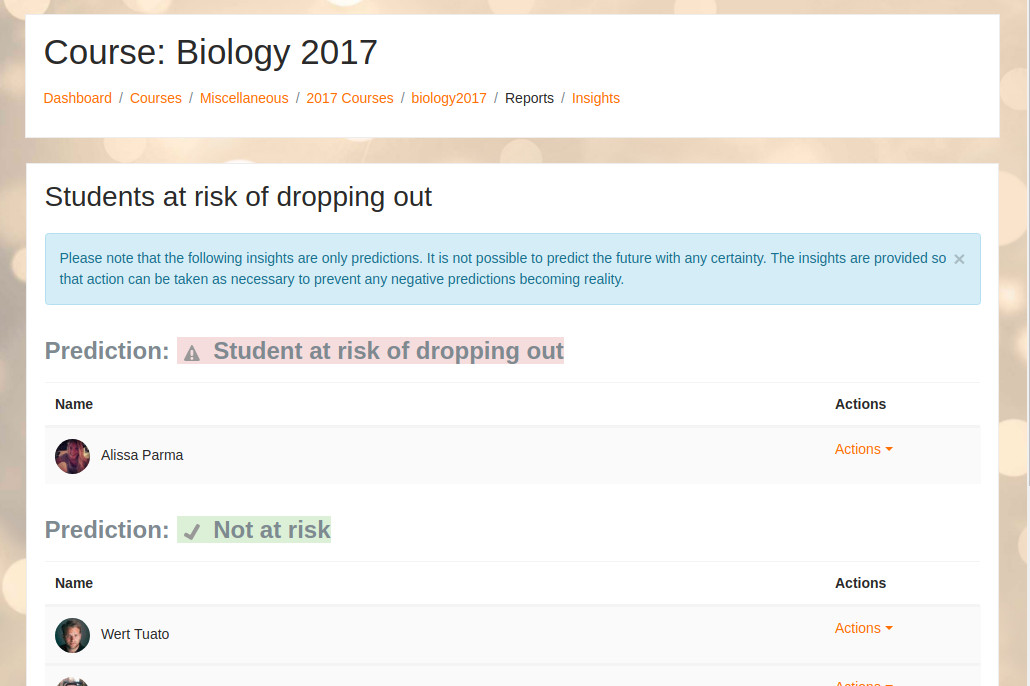

View Insights

Once you have trained a machine learning algorithm with the data available on the system, you will see insights (predictions) here for each "analysable." In the included model "Students at risk of dropping out, insights may be selected per course. Predictions are not limited to ongoing courses-- this depends on the model.

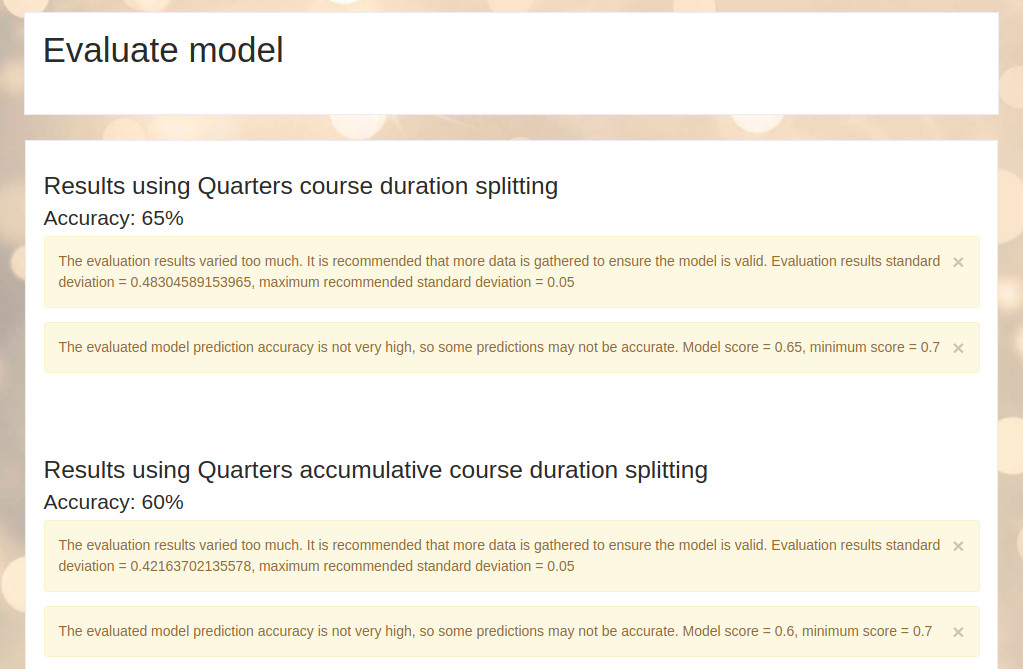

Evaluate

Evaluate the prediction model by getting all the training data available on the site, calculating all the indicators and the target and passing the resulting dataset to machine learning backends. This process will split the dataset into training data and testing data and calculate its accuracy. Note that the evaluation process uses all information available on the site, even if it is very old. Because of this, the accuracy returned by the evaluation process may be lower than the real model accuracy as indicators are more reliably calculated immediately after training data is available because the site state changes over time. The metric used to describe accuracy is the Matthews correlation coefficient (a metric used in machine learning for evaluating binary classifications)

You can force the model evaluation process to run from the command line:

$ admin/tool/analytics/cli/evaluate_model.php

Log

View previous evaluation logs, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curve graphs, the tensorboard log dir or the model's Matthews correlation coefficient. The information available will depend on the machine learning backend in use.

Edit

You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited.

Enable / Disable

The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again.

Export

Export your site training data to share it with your partner institutions or to use it on a new site. The Export action for models allows you to generate a csv file containing model data about indicators and weights, without exposing any of your site-specific data. We will be asking for submissions of these model files to help evaluate the value of models on different kinds of sites. Please see the Learning Analytics community for more information.

Invalid site elements

Reports on what elements in your site can not be analysed by this model

Clear predictions

Clears all the model predictions and training data

Core models

Students at risk of dropping out

This model predicts students who are at risk of non-completion (dropping out) of a Moodle course, based on low student engagement. In this model, the definition of "dropping out" is "no student activity in the final quarter of the course." The prediction model uses the Community of Inquiry model of student engagement, consisting of three parts:

This prediction model is able to analyse and draw conclusions from a wide variety of courses, and apply those conclusions to make predictions about new courses. The model is not limited to making predictions about student success in exact duplicates of courses offered in the past. However, there are some limitations:

- This model requires a certain amount of in-Moodle data with which to make predictions. At the present time, only core Moodle activities are included in the indicator set (see below). Courses which do not include several core Moodle activities per “time slice” (depending on the time splitting method) will have poor predictive support in this model. This prediction model will be most effective with fully online or “hybrid” or “blended” courses with substantial online components.

- This prediction model assumes that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle. Because of this model design assumption, it is very important to properly set course start and end dates for each course to use this model. If both past courses and ongoing courses start and end dates are not properly set predictions cannot be accurate. Because the course end date field was only introduced in Moodle 3.2 and some courses may not have set a course start date in the past, we include a command line interface script:

$ admin/tool/analytics/cli/guess_course_start_and_end.php

This script attempts to estimate past course start and end dates by looking at the student enrolments and students' activity logs. After running this script, please check that the estimated start and end dates script results are reasonably correct.

Upcoming events

The “upcoming events” model outputs to the user’s calendar page.

No teaching

This model's insights will inform site managers of which courses with an upcoming start date will not have teaching activity. This is a simple model and it does not use machine learning backend to return predictions. It bases the predictions on assumptions, e.g. there is no teaching if there are no students.

Creating and editing models

New models can be created by using the Analytics API, by importing an exported model from another site, or by using the new web UI in 3.7. There are four components of a model that can be defined through the web UI:

Target

Targets represent a “known good”-- something about which we have very strong evidence of value. Targets must be designed carefully to align with the curriculum priorities of the institution. Each model has a single target. The “Analyser” (context in which targets will be evaluated) is automatically controlled by the Target selection.

Indicators

Indicators are data points that may help to predict targets. We are free to add many indicators to a model to find out if they predict a target-- the only limit is that the data must be available within Moodle and must have a connection to the context of the model (e.g. the user, the course, etc.). The machine learning “training” process will determine how much weight to give to each indicator in the model.

We do want to make sure any indicators we include in a production model have a clear purpose and can be interpreted by participants, especially if they are used to make prescriptive or diagnostic decisions.

Indicators are constructed from data, but the data points need to be processed to make consistent, reusable indicators. In many cases, events are counted or combined in some way, though other ways of defining indicators are possible and will be discussed later. How the data points are processed involves important assumptions that affect the indicators. In particular, indicators can be absolute, meaning that the value of the indicator stays the same no matter what other samples are in the context, or relative, meaning that the indicator compares the sample to others in the context.

Analysis intervals

Analysis intervals control how often the model will run to generate insights, and how much information will be included in each generation cycle. The two analysis intervals enabled by default for selection are “Quarters” and “Quarters accumulative.” Both options will cause models to execute four times-- after the first, second, third and fourth quarters of the course (the final execution is used to evaluate the accuracy of the predictions against the actual outcome). The difference lies in how much information will be included. “Quarters” will only include information from the most recent quarter of the course in its predictions. “Quarters accumulative” will include the most recent quarter and all previous quarters, and tends to generate more accurate predictions (though it can take more time and memory to execute). Moodle Learning Analytics also includes “Tenths” and “Tenths accumulative” options in Core, if you choose to enable them from the Analytics Settings panel. These generate predictions more frequently.

- "Single range" indicates that predictions will be made once, but will take into account a range of time, e.g. one prediction at the end of a course. The prediction is made at the end of the range.

- "No splitting" indicates that the model generates an insight based on a snapshot of data at a given moment, e.g. the "no teaching" model looks to see if there are currently any teachers or students assigned to a course at a defined point before the start of the term, and issues one insight warning the site administrator that no teaching is likely to occur in that empty course.

- "Accumulative" methods differ in how much data is included in the prediction. Both "quarterly" and "quarterly accumulative" predictions are made at the end of each quarter of a time span (e.g. a course), but in "quarterly," only the information from the most recent quarter is included in the prediction, whereas in "quarterly accumulative" all information up to the present is included in the prediction.

Single range and no splitting methods do not have time constraints. They run during the next scheduled task execution, although models apply different restrictions (e.g. require that a course is finished to use it for training or some data in the course and students to use it to get predictions...). 'Single range' and 'No splitting' are not appropriate for students at risk of dropping out of courses. They are intended to be used in models like 'No teaching' or 'Spammer user' where you just want one prediction and done. To explain this with an example: 'No teaching' model uses 'Single range' analysis interval; the target class (the main PHP class of a model) only accepts courses that will start during the next week. Once we provide a 'No teaching' insight for a course we won't provide any further 'No teaching' insights for that course.

The difference between 'Single range' and 'No splitting' is that models analysed using 'Single range' will be limited to the analysable elements (the course in students at risk model) start and end dates, while 'No splitting' do not have any time contraints and all data available in the system is used to calculate the indicators.

Note: Although the examples above refer to courses, analysis intervals can be used on any analysable element. For example, enrolments can have start and end dates, so an analysis interval could be applied to generate predictions about aspects of an enrollment. For analysable elements with no start and end dates, different analysis intervals would be needed. For example, a "weekly" analysis interval could be applied to a model intended to predict whether a user is likely to log in to the system in the future, on the basis of activity in the previous week.

Predictions processor

This setting controls which machine learning backend and algorithm will be used to estimate the model. Moodle currently supports two predictions processors:

- PHP machine learning backend - implements logistic regression using php-ml (contributed by Moodle)

- Python machine learning backend - implements single hidden layer feed-forward neural network using TensorFlow.

You can only choose from the predictions processors enabled on your site.

Managing models

Predictions and Insights

Models will start generating predictions at different points in time, depending on the site prediction models and the site courses start and end dates.

Each model defines which predictions will generate insights and which predictions will be ignored. For example, the Students at risk of dropping out prediction model does not generate an insight if a student is predicted as "not at risk," since the primary interest is which students are at risk of dropping out of courses, not which students are not at risk.

Users can specify how they wish to receive insights notifications, or turn them off, via their User menu > Preferences > Notification preferences.

Actions

Each insight can have one or more actions defined. Actions provide a way to act on the insight as it is read. These actions may include a way to send a message to another user, a link to a report providing information about the sample the prediction has been generated for (e.g. a report for an existing student), or a way to view the details of the model prediction.

Insights can also offer two important general actions that are applicable to all insights. First, the user can acknowledge the insight. This removes that particular prediction from the view of the user, e.g. a notification about a particular student at risk is removed from the display.

The second general action is to mark the insight as "Not useful." This also removes the insight associated with this calculation from the display, but the model is adjusted to make this prediction less likely in the future.

Monitoring model quality

Check the log reports regularly to review the accuracy of your models.

Capabilities

There are two analytics capabilities:

- Manage models - allowed for the default role of manager only

- List insights - allowed for the default roles of manager, teacher and non-editing teacher