Analytics: Difference between revisions

(→Model management: broke out model actions so we can have direct links) |

Mary Cooch (talk | contribs) (added video) |

||

| Line 2: | Line 2: | ||

==Overview== | ==Overview== | ||

{{MediaPlayer | url = https://youtu.be/MS1IqKsrXAI | desc = Analytics overview}} | |||

Beginning in version 3.4, Moodle core now implements open source, transparent next-generation learning analytics using machine learning backends that go beyond simple descriptive analytics to provide predictions of learner success, and ultimately diagnosis and prescriptions (advisements) to learners and teachers. | Beginning in version 3.4, Moodle core now implements open source, transparent next-generation learning analytics using machine learning backends that go beyond simple descriptive analytics to provide predictions of learner success, and ultimately diagnosis and prescriptions (advisements) to learners and teachers. | ||

Revision as of 09:37, 15 November 2017

Overview

Beginning in version 3.4, Moodle core now implements open source, transparent next-generation learning analytics using machine learning backends that go beyond simple descriptive analytics to provide predictions of learner success, and ultimately diagnosis and prescriptions (advisements) to learners and teachers.

In Moodle 3.4, this system ships with two built-in models:

- Students at risk of dropping out

- No teaching activity

The system can be easily extended with new custom models, based on reusable targets, indicators, and other components. For more information, see the Analytics API developer documentation.

Features

- Two built-in prediction models: "Students at risk of dropping out" and "No Teaching".

- A set of student engagement indicators based on the Community of Inquiry.

- Built-in tools to evaluate models against your site's data

- Proactive notifications for instructors using Events

- Instructors can easily send messages to students identified by the model, or jump to the Outline report for that student for more detail about student activity

- An API to build indicators and prediction models for third-party Moodle plugins

- Machine learning backend plugin type - supports PHP and Python, and can be extended to implement other ML backends

Note: PHP 7.x is required.

Limitations

This release of Moodle Learning Analytics has the following limitations:

- Models included in this release must be "trained" on a site with previous completed courses, ideally using the Moodle course completion feature. The current models cannot make predictions on a site until this is done.

- The prediction model included with this version requires that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle.

- Models and predictions are only visible to teachers and administrators at present.

We are continuing to enhance Moodle Learning Analytics, and expanded capabilities will be released going forward. To help contribute to our progress, please join the conversation at the Moodle Learning Analytics Community. In particular, we still need data sets from a wide variety of Moodle-using institutions in order to be able to ship a working prediction model that does not depend on local site data before it can be used.

Settings

You can access Analytics settings from Site administration > Analytics > Analytics settings.

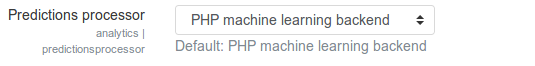

Predictions processor

Prediction processors are the machine learning backends that process the datasets generated from the calculated indicators and targets and return predictions. Moodle core includes 2 prediction processors:

- The PHP processor is the default. There are no other system requirements to use this processor.

- The Python one is more powerful and it generates graphs that explain the model performance. It requires setting up extra tools: Python itself (https://wiki.python.org/moin/BeginnersGuide/Download) and the moodlemlbackend python package.

pip install moodlemlbackend

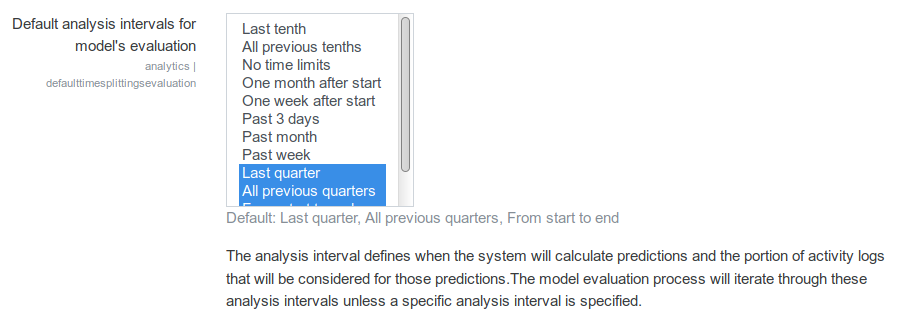

Time splitting methods

Time splitting methods allow insights generated from one course to be used on another course, even if the two courses are not exactly the same length.

Each time splitting method divides the course duration into segments. At the end of each defined segment, the predictions engine will run and generate insights. It is recommended that you only enable the time splitting methods you are interested in using; the evaluation process will iterate through all enabled time-spitting methods, so the more time-splitting methods enabled, the slower the evaluation process will be.

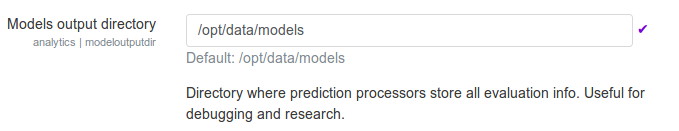

Models output directory

This setting allows you to define a directory where machine learning backends data is stored. Be sure this directory exists and is writable by the web server.

Model management

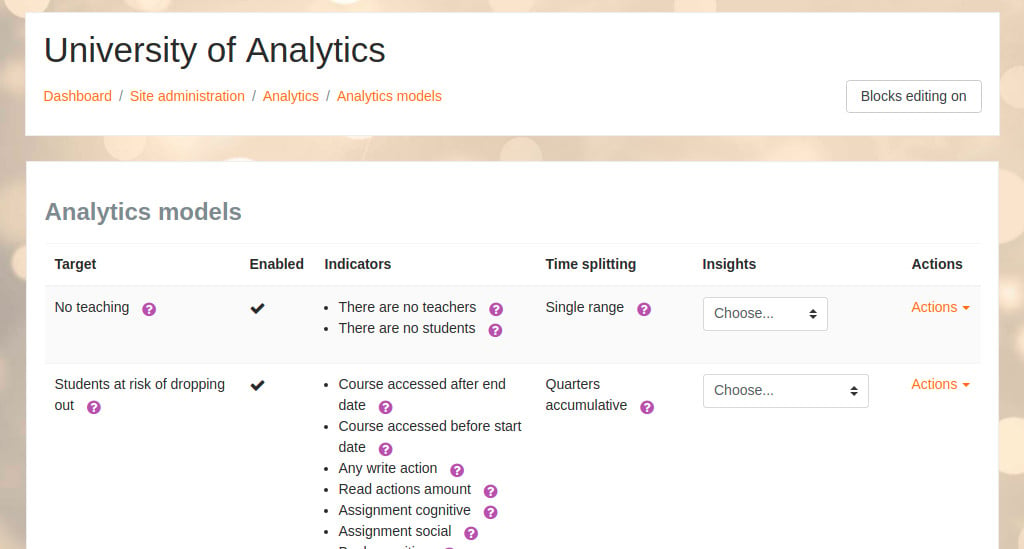

Moodle can support multiple prediction models at once, even within the same course. This can be used for A/B testing to compare the performance and accuracy of multiple models.

Moodle core ships with two prediction models, Students at risk of dropping out and No teaching. Additional prediction models can be created by using the Analytics API. Each model is based on the prediction of a single, specific "target," or outcome (whether desirable or undesirable), based on a number of selected indicators.

You can manage your system models from Site Administration > Analytics > Analytics models.

These are some of the actions you can perform on a model:

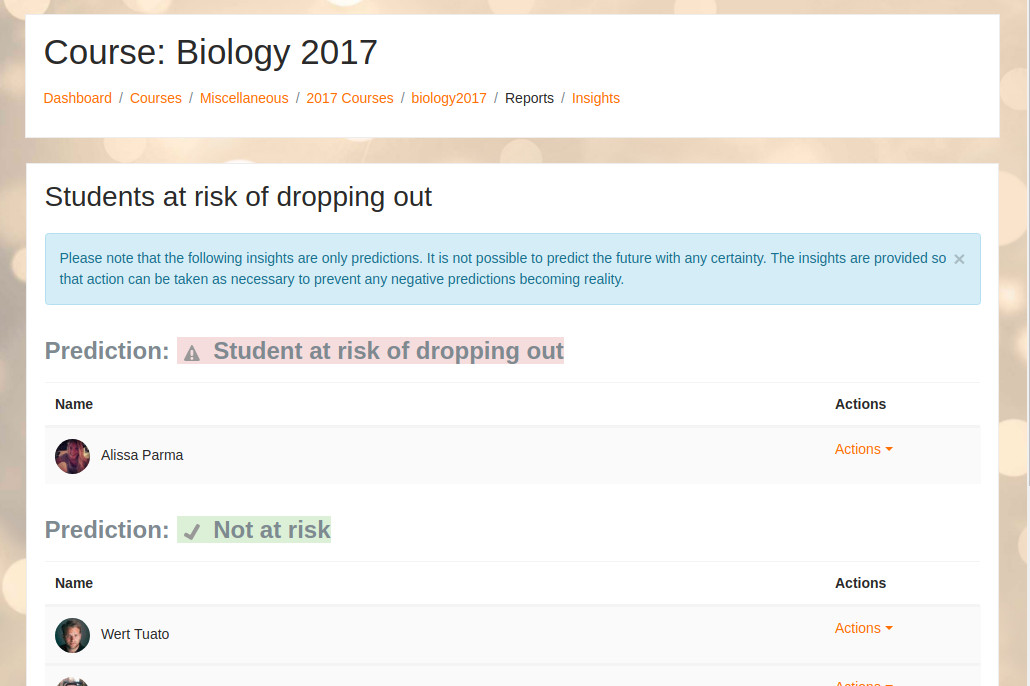

View Insights

Once you have trained a machine learning algorithm with the data available on the system, you will see insights (predictions) here for each "analysable." In the included model "Students at risk of dropping out, insights may be selected per course. Predictions are not limited to ongoing courses-- this depends on the model.

Evaluate

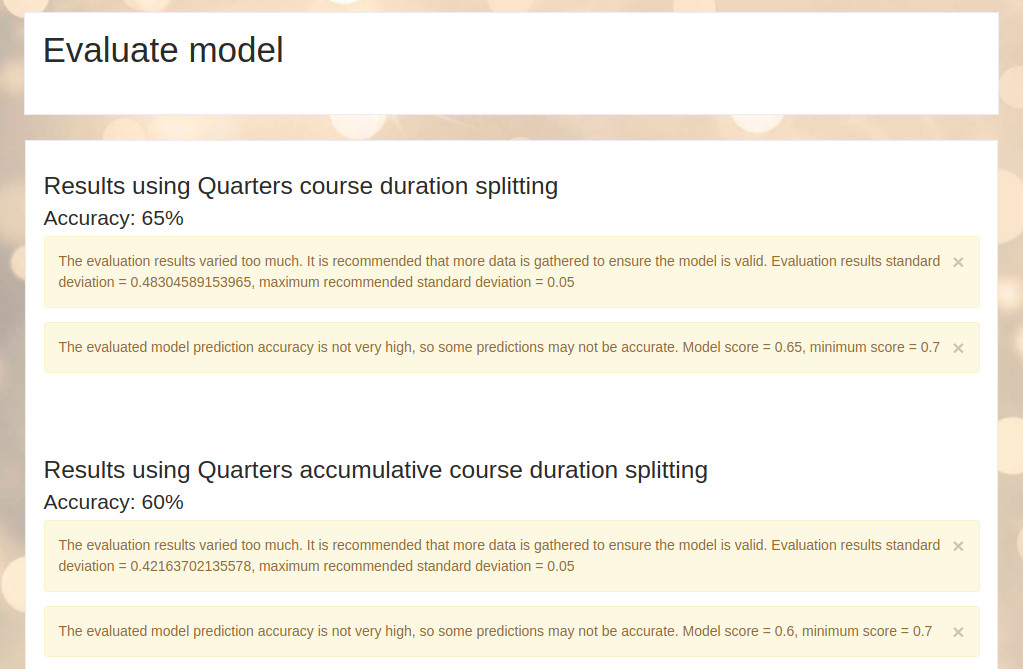

(disabled by default) Evaluate the prediction model by getting all the training data available on the site, calculating all the indicators and the target and passing the resulting dataset to machine learning backends. This process will split the dataset into training data and testing data and calculate its accuracy. Note that the evaluation process uses all information available on the site, even if it is very old. Because of this, the accuracy returned by the evaluation process may be lower than the real model accuracy as indicators are more reliably calculated immediately after training data is available because the site state changes over time. The metric used to describe accuracy is the Matthews correlation coefficient (a metric used in machine learning for evaluating binary classifications)

You can force the model evaluation process to run from the command line:

$ admin/tool/analytics/cli/evaluate_model.php

Log

View previous evaluation logs, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curve graphs, the tensorboard log dir or the model's Matthews correlation coefficient. The information available will depend on the machine learning backend in use.

Edit

You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited.

Enable / Disable

The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again.

Export

Export your site training data to share it with your partner institutions or to use it on a new site. The Export action for models allows you to generate a csv file containing model data about indicators and weights, without exposing any of your site-specific data. We will be asking for submissions of these model files to help evaluate the value of models on different kinds of sites. Please see the Learning Analytics community for more information.

Invalid site elements

Reports on what elements in your site can not be analysed by this model

Clear predictions

Clears all the model predictions and training data

Core models

Students at risk of dropping out

This model predicts students who are at risk of non-completion (dropping out) of a Moodle course, based on low student engagement. In this model, the definition of "dropping out" is "no student activity in the final quarter of the course." The prediction model uses the Community of Inquiry model of student engagement, consisting of three parts:

This prediction model is able to analyse and draw conclusions from a wide variety of courses, and apply those conclusions to make predictions about new courses. The model is not limited to making predictions about student success in exact duplicates of courses offered in the past. However, there are some limitations:

- This model requires a certain amount of in-Moodle data with which to make predictions. At the present time, only core Moodle activities are included in the indicator set (see below). Courses which do not include several core Moodle activities per “time slice” (depending on the time splitting method) will have poor predictive support in this model. This prediction model will be most effective with fully online or “hybrid” or “blended” courses with substantial online components.

- This prediction model assumes that courses have fixed start and end dates, and is not designed to be used with rolling enrollment courses. Models that support a wider range of course types will be included in future versions of Moodle. Because of this model design assumption, it is very important to properly set course start and end dates for each course to use this model. If both past courses and ongoing courses start and end dates are not properly set predictions cannot be accurate. Because the course end date field was only introduced in Moodle 3.2 and some courses may not have set a course start date in the past, we include a command line interface script:

$ admin/tool/analytics/cli/guess_course_start_and_end.php

This script attempts to estimate past course start and end dates by looking at the student enrolments and students' activity logs. After running this script, please check that the estimated start and end dates script results are reasonably correct.

No teaching

This model's insights will inform site managers of which courses with an upcoming start date will not have teaching activity. This is a simple model and it does not use machine learning backend to return predictions. It bases the predictions on assumptions, e.g. there is no teaching if there are no students.

Predictions and Insights

Models will start generating predictions at different point in time, depending on the site prediction models and the site courses start and end dates.

Each model defines which predictions will generate insights and which predictions will be ignored. For example, the Students at risk of dropping out prediction model does not generate an insight if a student is predicted as "not at risk," since the primary interest is which students are at risk of dropping out of courses, not which students are not at risk.

Actions

Each insight can have one or more actions defined. Actions provide a way to act on the insight as it is read. These actions may include a way to send a message to another user, a link to a report providing information about the sample the prediction has been generated for (e.g. a report for an existing student), or a way to view the details of the model prediction.

Insights can also offer two important general actions that are applicable to all insights. First, the user can acknowledge the insight. This removes that particular prediction from the view of the user, e.g. a notification about a particular student at risk is removed from the display.

The second general action is to mark the insight as "Not useful." This also removes the insight associated with this calculation from the display, but the model is adjusted to make this prediction less likely in the future.