Analytics

Info to be added soon... This page describes the Analytics models tool used to visualise, manage and evaluate prediction models.

Settings

You can access Analytics settings from Site administration > Analytics > Analytics settings.

Predictions processor

Prediction processors are the machine learning backends that process the datasets generated from the calculated indicators and targets and return predictions. This plugin is shipped with 2 prediction processors:

- The PHP one is the default

- The Python one is more powerful and it generates graphs with the model performance but it requires setting up extra stuff like Python itself (https://wiki.python.org/moin/BeginnersGuide/Download) and the moodlemlbackend package.

pip install moodlemlbackend

Time splitting methods

The time splitting method divides the course duration in parts, the predictions engine will run at the end of these parts. It is recommended that you only enable the time splitting methods you could be interested on using; the site contents analyser will calculate all indicators using each of the enabled time splitting methods. The more enabled time splitting methods the slower the evaluation process will be.

Models management

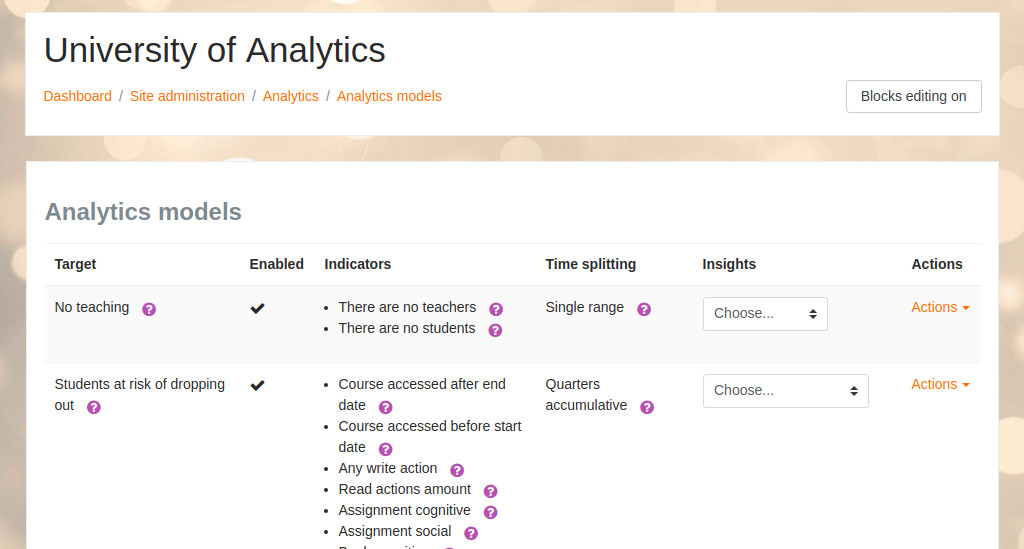

You can access the tool from Site Administration > Analytics > Analytics models to see the list of prediction models.

These are some of the actions you can perform on a model:

- Edit: You can edit the models by modifying the list of indicators or the time-splitting method. All previous predictions will be deleted when a model is modified. Models based on assumptions (static models) can not be edited.

- Enable / Disable: The scheduled task that trains machine learning algorithms with the new data available on the system and gets predictions for ongoing courses skips disabled models. Previous predictions generated by disabled models are not available until the model is enabled again.

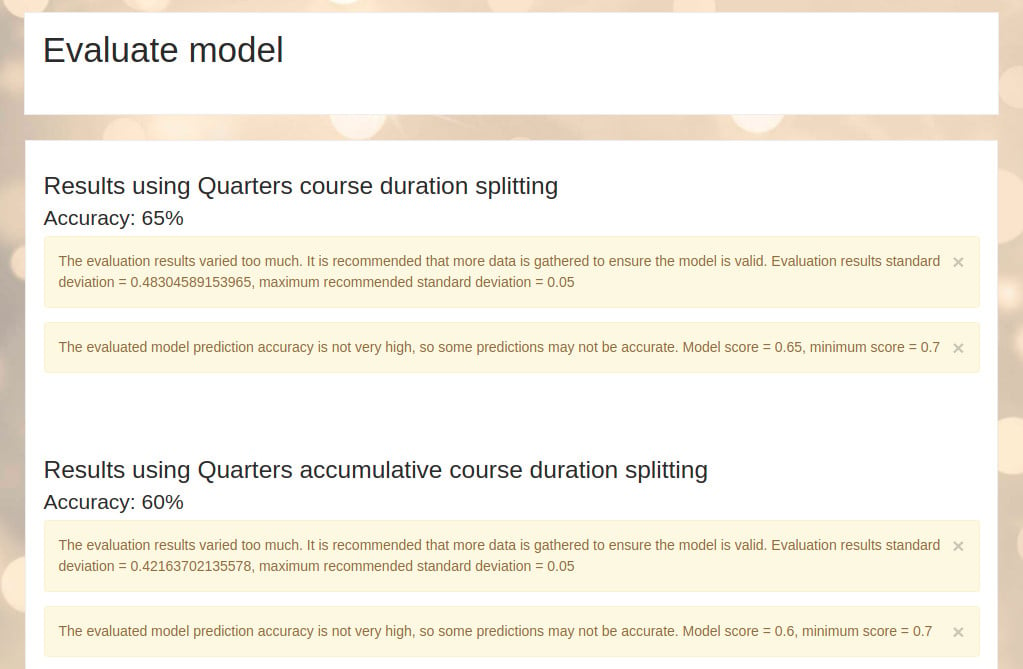

- Evaluate: Evaluate the prediction model by getting all the training data available on the site, calculating all the indicators and the target and passing the resulting dataset to machine learning backends, they will split the dataset into training data and testing data and calculate its accuracy. Note that the evaluation process use all information available on the site, even if it is very old, the accuracy returned by the evaluation process will generally be lower than the real model accuracy as indicators are more reliably calculated straight after training data is available because the site state changes along time. The metric used as accuracy is the Matthew’s correlation coefficient (good metric for binary classifications)

- Log: View previous evaluations log, including the model accuracy as well as other technical information generated by the machine learning backends like ROC curves, learning curves graphs, the tensorboard log dir or the model's Matthew’s correlation coefficient. The information available will depend on the machine learning backend in use.

- Get predictions: Train machine learning algorithms with the new data available on the system and get predictions for ongoing courses. Predictions are not limited to ongoing courses, this depends on the model.

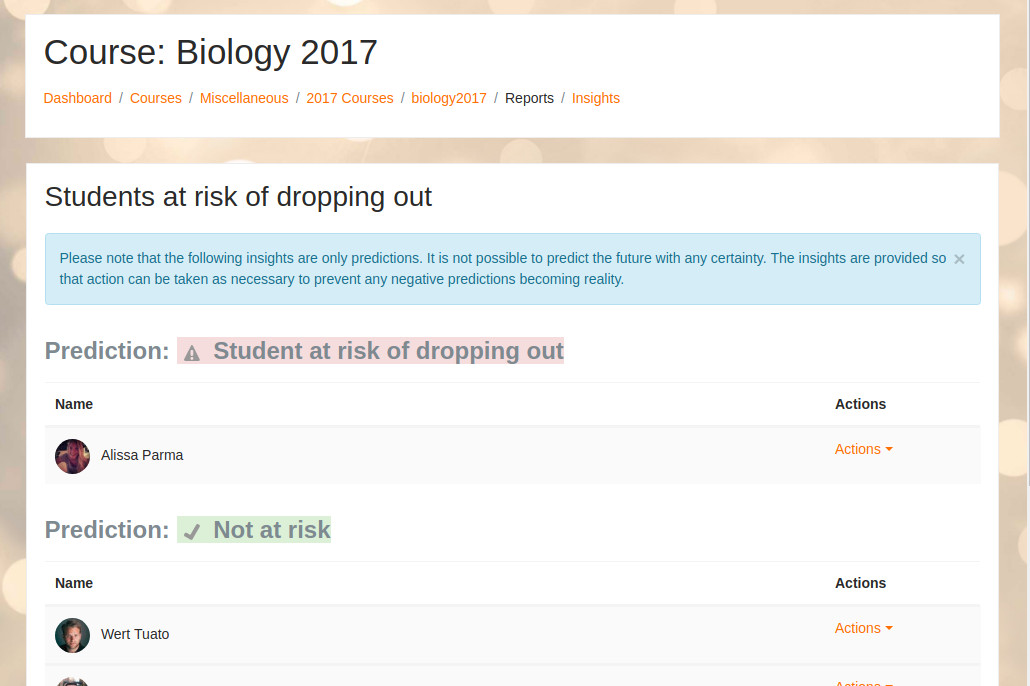

Predictions and Insights

Models will start generating predictions at different point in time, depending on the site prediction models and the site courses start and end dates.

Each model defines which predictions will generate insights and which predictions will be ignored. This is an example of Students at risk of dropping out prediction model; if a student is predicted as not at risk no insight is generated as what is interesting is to know which students are at risk of dropping out of courses, not which students are not at risk.