Pattern-match question type detailed documentation

See the most up-to-date English language documentation at the O.U. site

Overview

The Pattern-match question type is used to test if a short free-text student response matches a specified response pattern.

Pattern match is a more sophisticated alternative to the Short-Answer question type and offers:

- the ability to cater for misspellings, with and without an English dictionary

- specification of synonyms and alternative phrases

- flexible word order

- checks on the proximity of words.

For certain types of response it has been shown to provide an accuracy of marking that is on a par with, or better than, that provided by a cohort of human markers.

Pattern match works on the basis that you have a student response which you wish to match against any number of response matching patterns. Each pattern is compared in turn until a match is found and feedback and marks are assigned.

The key to using Pattern match is in asking questions that you have a reasonable hope of marking accurately. Hence writing the question stem is the most important part of writing these questions.

Components of the question

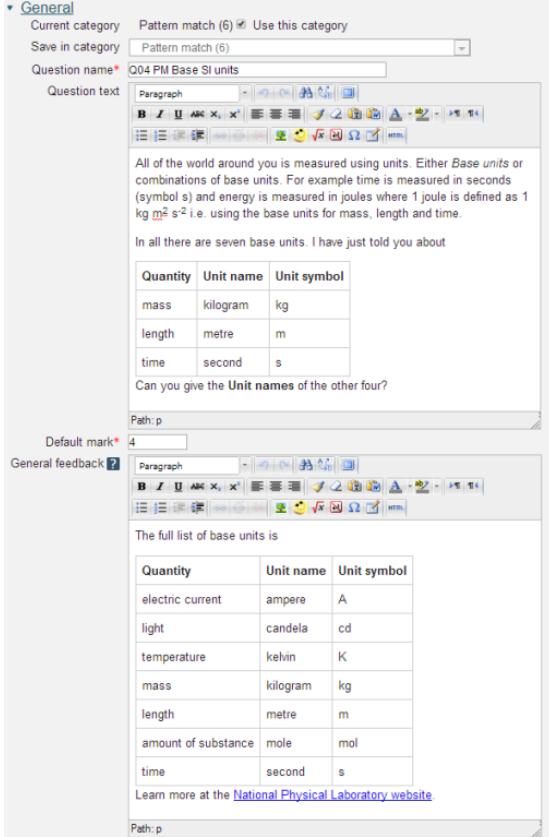

Question name: A descriptive name is sensible. This name will not be shown to students.

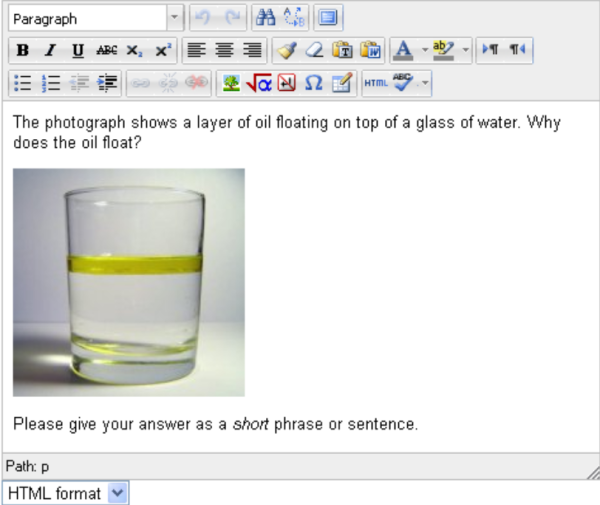

Question text: You may use the full functionality of the editor to state the question.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores. The minimum number of underscores required to trigger this action is 5. The size of the input box may also be specified by __XxY__ e.g. __20x1__ will produce a box 20 columns wide and 1 row high. If no underscores are present the response box will be placed after the question.

Default mark: Decide on how to score your questions and be consistent.

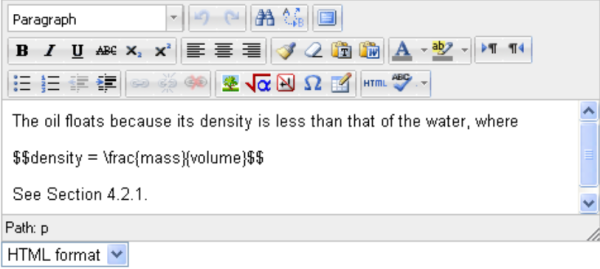

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. For the Pattern match question type you cannot rely on using the machine generated 'Right answer' (from the iCMA definition form).

As usual the General feedback area will contain the correct answer and gives a first pattern match that you should consider

Note:The text enclosed between two $$ characters is TeX and it will be rendered by your Maths filter (that you have enabled/installed previously).

Options for entering answers

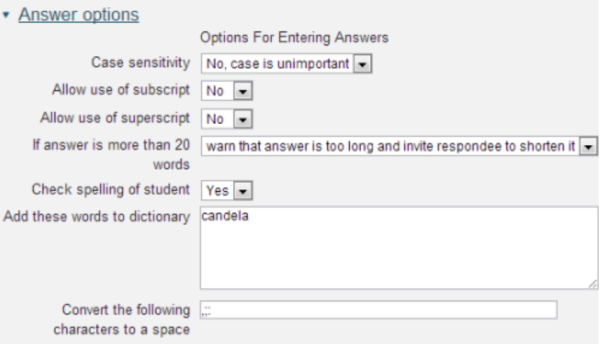

Case sensitivity: No or yes.

Allow use of subscript/superscript: No or Yes. Any subscripts entered by the student are contained in their response between the standard tags of and or and . For example:

- The formula for water is H<sub>2</sub>O will produce

- The formula for water is H2O

- The speed of light is approximately 3x10<sup>8</sup> m s<sup>-1</sup> will produce

- The speed of light is approximately 3x108 m s-1

At run time keyboard users may move between normal, subscript and superscript by using the up-arrow and down-arrow keys. You may wish to include this information within your question. If you do please note that the up-arrow and down-arrow provided in the HTML editor’s ‘insert custom characters’ list are not spoken by a screen reader and you should also include the words ‘up-arrow’ and ‘down-arrow’.

If answer is more than 20 words: We strongly recommend that you limit responses to 20 words. Allowing unconstrained responses often results in responses that are both right and wrong – which are difficult to mark consistently one way or the other.

Check spelling of student: How many ways do you know of to spell ‘temperature’? We’ve seen 14! You will improve the marking accuracy by insisting on words that are in Moodle system dictionary.

Add these words to dictionary: When dealing with specialised scientific, technical and medical terms that are not in a standard dictionary it is most likely that you will have to add them by using this field. Enter your words leaving a space between them.

Convert the following characters to space: In Pattern match words are defined as sequences of characters between spaces. The exclamation mark and question mark are also taken to mark the end of a word. The period is a special case; as a full stop it is also a word delimiter but as the decimal point it is not. All other punctuation is considered to be part of the response but this option lets you remove it.

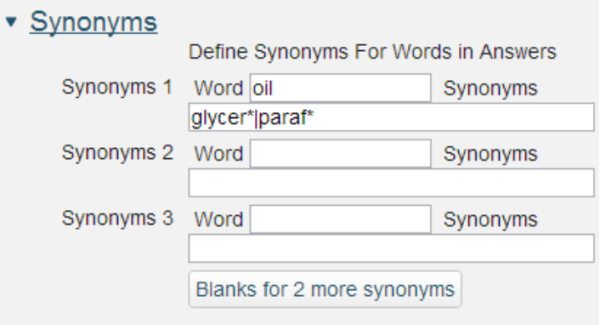

Words and synonyms: All words and synonyms are specified as they are to be applied by the response matching. They do not have to be full words but can be stems with a wildcard.

Synonyms may only be single words i.e. the ability to specify alternate phrases in synonym lists is not allowed.

From the example above any occurrence of the word oil in the response match will be replaced by oil|glycer*|paraf* before the match is carried out.

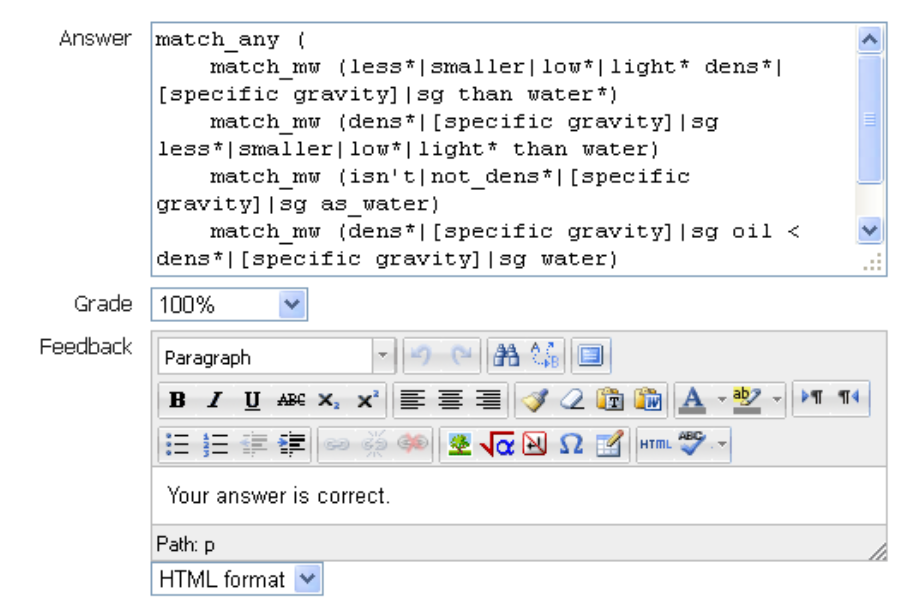

From the other example of oil above water:

This example shows that Pattern match will support complex response matching. Here there are four acceptable phrases and in each there are various alternative words.

Take the first phrase match_mw(less*|smaller|low*|light* dens*|[specific gravity]|sg than water*) This would match “less dense than water” or “has a lower specific gravity than water”. Please see the section on Pattern match syntax for a full description.

Answer: This example shows that Pattern match will support complex response matching.

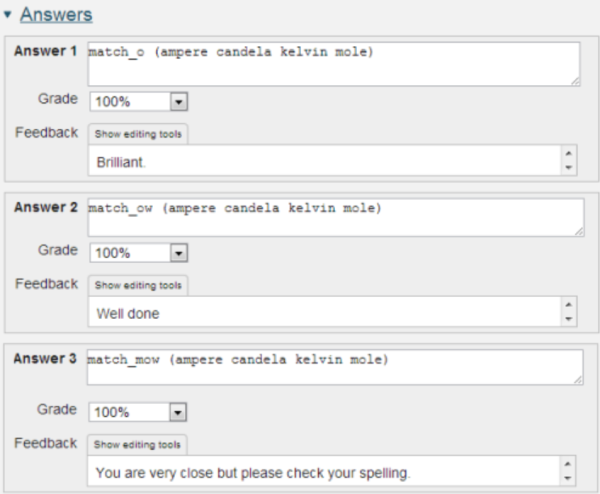

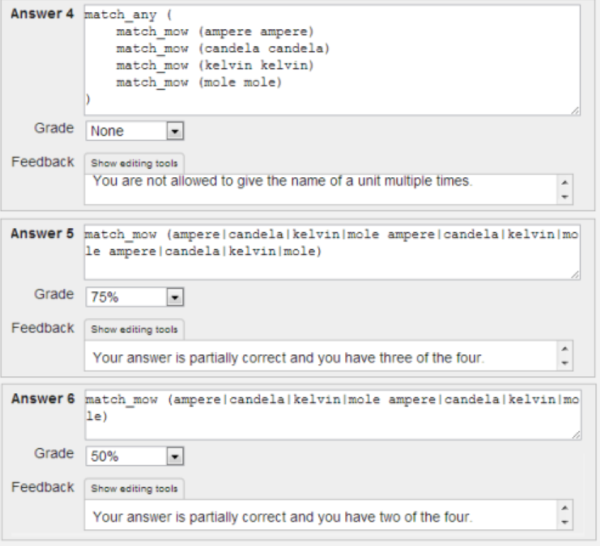

Take the first answer field match_o(ampere candela kelvin mole) is the exact match for the four words with the additional feature that the matching option 'o' allows the words to be given in any order.

The answer field match_ow(ampere candela kelvin mole) requires the same four words, again in any order, but also allows other words.

The third answer field match_mow(ampere candela kelvin mole) allows for misspellings which are still in the dictionary e.g. mule instead of mole.

Please see the section on Pattern match syntax for a full description.

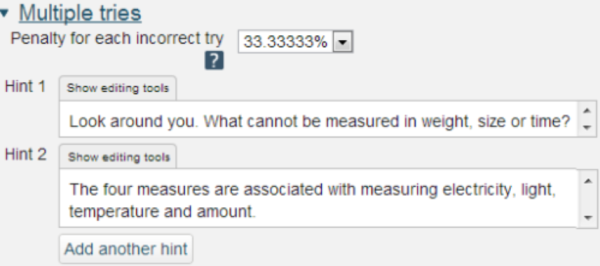

Grade: Between ‘none’ and 100%. At least one response must have a mark of 100%.

Feedback: Specific feedback that is provided to anyone whose response is matched by the response matching rule in Answer.

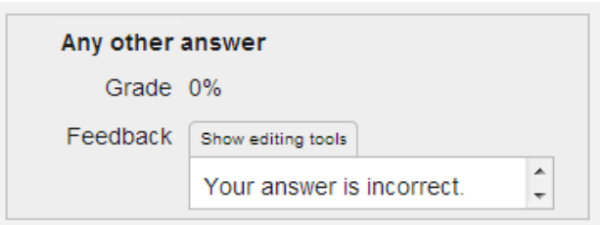

The feedback for all non-matched responses should go into the ‘Any other answer’ field.

How the response is handled

The basic unit of the student response that Pattern match operates on is the word, where a word is defined as a sequence of characters between spaces. The full stop (but not the decimal point), exclamation mark and question mark are also treated as ending a word.

Numbers are special instances of words and are matched by value and not by the form in which they are given. match_w(25 ms-1) will match the following correct responses; 25 ms-1, 2.5e1 ms-1, 2.5x10-1 ms-1

With the exception of numbers and the word terminators (<space><full stop>? and !) Pattern match matches what it is given. Whether case does or does not matter is left to the author to decide as is the significance of punctuation such as ,;: and others.

The response is treated as a whole with the exception that words that are required to be in proximity must also be in the same sentence.

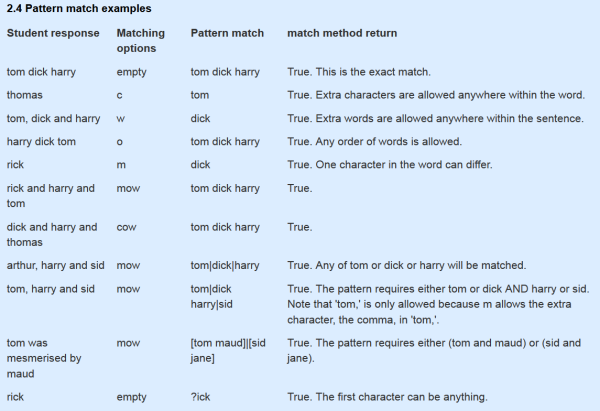

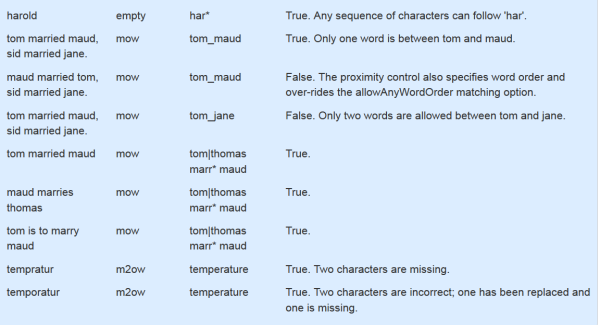

Pattern match syntax

The match syntax can be considered in three parts.

- the matching options e.g. mow

- the words to be matched e.g.tom dick harry together with the in-word special characters.

- and, or and not combinations of matches e.g. match_any()

Matching options

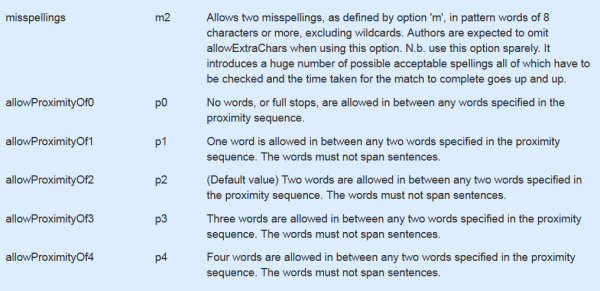

The matching options are appended to the word match with an intervening underscore and may be combined. A typical match combines the options ‘mow’ to allow for

- misspellings

- any word order

- any extra words

and is written match_mow(words to be matched).

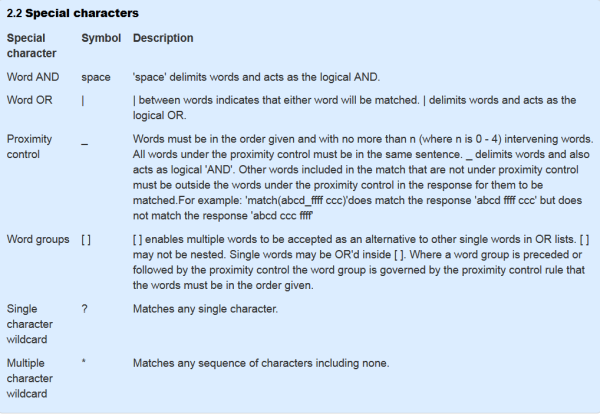

Special characters

It is possible to match some of the special characters by ‘escaping’ them with the ‘\’ character. So match(\|) will match ‘|’. Ditto for _, [, ], and *. And if you wish to match round brackets then match(\(\)) will match exactly ‘()’

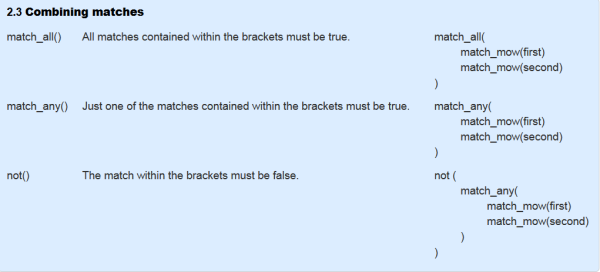

Combinations

match_all(), match_any()and not() may all be nested.

Examples

Advice on creating matching rules

How can you possibly guess the multiplicity of phrases that your varied student cohort will use to answer a question? Of course you can’t, but you can record everything and over time you will build a bank of student responses on which you can base your response matching. And gradually you might be surprised at how well your response matching copes.

Before we describe the response matching it’s worth stressing:

- The starting point is asking a question that you believe you will be able to mark accurately.

- How you phrase the question can have a significant impact.

- Pattern match works best when you are asking for a single explanation that you will mark as simply right or wrong. You will find that dealing with multiple parts in a question or apportioning partial marks is a much harder task that will quickly turn into a research project.

- A bank of marked responses from real students is an essential starting point for developing matching patterns. Consider using the question in a human marked essay to get your first bank of student responses. Alternatively first use the question in a deferred feedback test where marks are allocated once all responses are received.

- This question type possibly more than all others demands that you monitor student responses and amend your response matching as required. There will come a point at which you believe that the response matching is ‘good enough’ and might wonder ‘how many responses might I need to check?’ Unfortunately there is no easy answer to this question. Sometimes 200 responses will be sufficient. At other times you may have to go much further than this. If you do bear in mind that

- Asking students to construct their response, as opposed to choosing it from a list, asks more of the student i.e. it is worth your while to do this.

- Human markers following a mark scheme are fallible too. Ditto.

- Stemming is the accepted method of catering for different word endings e.g. mov* will cater for ‘moved’ and ‘moves’ and ‘moving’ etc.

- Students do not type responses that are deliberately wrong. Why should they? This is in contrast to academics who try to defeat the system. Consequently you will not find students entering the right response but preceding it with ‘it is not..’ And consequently you do not have to match deliberate errors.

- The proximity control enables you to link one word to another.

- It is often the case that marking obvious wrong responses early in your matching scheme will improve your overall accuracy.

- Dealing with responses that contain both right and wrong answers requires you to take a view. You will either mark all such responses right or wrong. The computer will carry out your instructions consistently c.f. asking a group of human markers to apply your mark scheme consistently.

- You should aim for an accuracy of >95% (in our trials our human markers were in the range 92%-96%). But, of course, aim as high as is reasonable given the usual diminishing returns on continuing efforts.

History

The underlying structure of the response matching described here was developed in the Computer Based Learning Unit of Leeds University in the 1970s and was incorporated into Leeds Author Language. The basic unit of the word, the matching options of allowAnyChars, allowAnyWords, allowAnyOrder and the word OR feature all date back to Leeds Author Language.

In 1976 the CALCHEM project which was hosted by the Computer Based Learning Unit, the Chemistry Department at Leeds University and the Computer Centre of Sheffield Polytechnic (now Sheffield Hallam University) produced a portable version of Leeds Author Language.

A portable version for microcomputers was developed in 1982 by the Open University, the Midland Bank (as it then was; now Midland is part of HSBC) and Imperial College. The single and multiple character wildcards were added at this time.

The misspelling, proximity and Word groups in 'or' lists additions were added as part of the Open University COLMSCT projects looking at free text response matching during 2006 - 2009.

References

Philip G. Butcher and Sally E. Jordan, A comparison of human and computer marking of short free-text student responses, Computers & Education 55 (2010) 489-499

This info was copied from http://www.open.edu/openlearnworks/mod/oucontent/view.php?id=52747§ion=2.2.1 on september 17th 2014.